Containerized services must be stateless, a doctrine that was widely used in the early days of containerization, which came hand-in-hand with microservices. While it makes elasticity easy, these days, we containerize many types of services, such as databases, which cannot be stateless—at least, without losing their meaning. This is where the Kubernetes container storage interface (CSI) comes in.

Docker, initially released in 2013, brought containerized applications to the vast majority of users (outside of the Solaris and BSD world), making them a commodity to the masses. Kubernetes, however, eased the process of orchestrating complex container-based systems. Both systems enable data storage options, ephemeral (temporary) or persistent. Let’s dive into concepts of container-attached storage and Kubernetes CSI.

What is Container Attached Storage (CAS)?

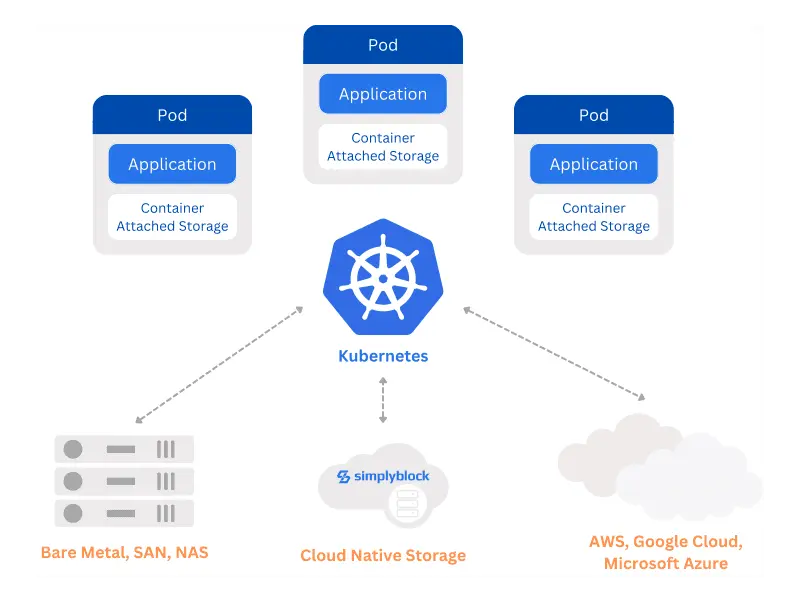

When containerized services need disk storage, whether ephemeral or persistent, container-attached storage (or CAS) provides the requested “virtual disk” to the container.

The CAS resources are managed alongside other container resources and are directly attached to the container’s own lifecycle. That means that storage resources are automatically provisioned and potentially de-provisioned. To achieve this functionality, the management of container-attached storage resources isn’t provided by the host operating system but directly integrated into the container runtime environment, hence systems such as Kubernetes, Docker, and others.

Since the storage resource is attached to the container, it isn’t used by the host operating system or other containers. Detaching storage and compute resources provides one of the building blocks of loosely coupled services, which small and independent development teams can easily manage.

From my perspective, five main principles are important to CAS:

- Native: Storage resources are a first-class citizen of containerized environments. Therefore, the overall container runtime environment seamlessly integrates with and fully manages it.

- Dynamic: Storage resources are (normally) coupled to their container’s lifecycle. This allows for on-demand provisioning of storage volumes whose size and performance profile are tailored to the applications’ needs. The dynamic nature and automatic resource management prevent manual intervention of volumes and devices.

- Decoupled: Storage resources are decoupled from the underlying infrastructure, meaning the container doesn’t know (and care) where the provided storage comes from. That makes it easy to provide different storage options, like high performance or highly resilient storage, to different containers. For super-high performance but ephemeral storage, even RAM disks would be an option.

- Efficient: By eliminating the need for traditional storage, e.g., local storage, it is easy to optimize resource utilization using special storage clusters, thin provisioning, and over-commitment. It also makes it easy to provide multi-regional backups and enables immediate re-attachment in case the container needs to be rescheduled on another cluster node.

- Agnostic: The storage provider can be easily exchanged due to the decoupling of storage resources and container runtime. This prevents vendor lock-in or provides the option to utilize multiple different storage options, depending on the needs of specific applications. A database running in a container will have very different storage requirements from a normal REST API service.

Given the five features above, we have the chance to provide each and every container with the exact storage option necessary. Some may need only ephemeral storage. Hence, temporary storage can be discarded when the container itself stops, while others need persistent storage, which either lives until the container is deleted or, in specific cases, will even survive this to be reattached to a new container (for example, in the case of container migration).

What is a Container Storage Interface (Kubernetes CSI)?

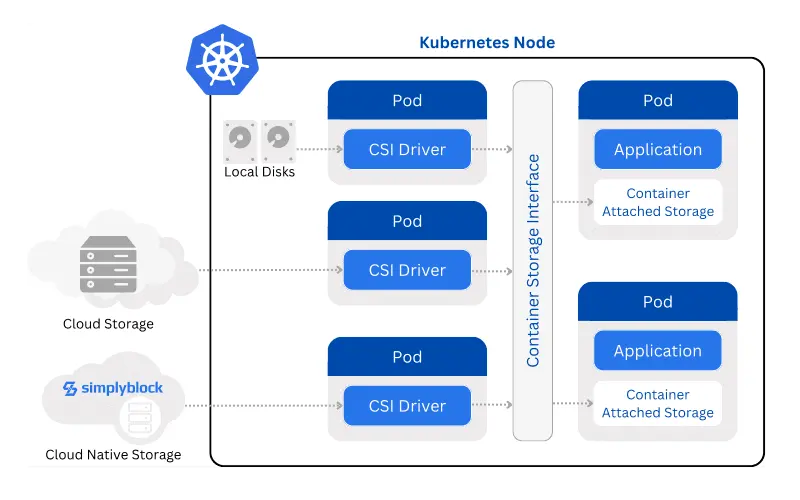

Like everything in Kubernetes, the container-attached storage functionality is provided by a set of microservices orchestrated by Kubernetes itself, making it modular by design. That said, services internally provided and extended by vendors make up the container storage interface (or Kubernetes CSI). Together, they create a well-defined interface for any type of storage option to be plugged into Kubernetes.

The container storage interface defines a standard set of functionalities, some mandatory and some optional, to be implemented by the Kubernetes CSI drivers. Those drivers are commonly provided by the different vendors of storage systems.

Hence, the CSI drivers build bridges between Kubernetes and the actual storage implementation, which can be physical, software-defined, or fully virtual (like an implementation sending all data “stored” to /dev/null). On the other hand, it allows vendors to implement their storage solution as efficiently as possible, providing a minimal set of operations towards provisioning and general management. That way, vendors can choose how to implement storage, with the two main categories being hyperconverged (compute and storage sharing the same cluster nodes), disaggregated, meaning that the actual storage environment is fully separated from the Kubernetes workloads using them, bringing a clear separation of storage and compute resources.

Just like Kubernetes, the container storage interface is developed as a collaborative effort inside the Cloud Native Computing Foundation (better known as CNCF) by members from all sides of the industry, vendors, and users.

The main goal of Kubernetes CSI is to deliver on the premise of being fully vendor-neutral. In addition, it enables parallel deployment of multiple different drivers, offering storage classes for each of them. This provides us, as users, with the ability to choose the best storage technology for each container, even in the same Kubernetes cluster.

As mentioned, the Kubernetes CSI driver interface provides a standard storage (or volume) operation set. These include creation or provisioning, resizing, snapshotting, cloning, and volume deletion. The operations can either be performed directly or through Kubernetes’ container resource descriptors (CRD), integrating into the consistent approach to managing container resources.

Editor’s note: We also have a deep dive into how the Kubernetes Container Storage Interface works.

Kubernetes and Stateful Workloads

For many people, containerized workloads should be fully stateless; in the past, it was the most commonly used mantra. With the rise of orchestration platforms, such as Kubernetes, it also became more typical to deploy more stateful workloads, often due to the simplified deployment. Orchestrators offer features like automatic elasticity, restarting containers after crashes, automatic migration of containers for rolling upgrades, as well as many more typical operational procedures. Having them built-in into an orchestration platform takes a lot of the burden, hence people started to deploy more and more databases.

Databases aren’t the only stateful workloads, though. Other applications and services may also require storage of some kind of state, sometimes as a local cache, using ephemeral storage, and sometimes in a more persistent fashion, as databases.

Benjamin Wootton (then working for Contino, now at Ensemble) wrote a great blog post about the difference between stateless and stateful containers and why the latter is needed. You should read it, but only after this one.

Your Kubernetes Storage with Simplyblock

The container storage interface in Kubernetes serves as the bridge between Kubernetes and external storage systems. It provides a standardized and modular approach to provisioning and managing container-attached storage resources.

By decoupling storage functionality from the Kubernetes core, Kubernetes CSI promotes interoperability, flexibility, and extensibility. This enables organizations to seamlessly leverage Storage Solutions for Kubernetes, tailoring the storage to the needs of each container individually.

With the evolving ecosystem and changing Kubernetes workloads towards databases and other IO-intensive or low-latency applications, storage becomes increasingly important. Simplyblock is your distributed, disaggregated, high-performance, predictable, low-latency, and resilient storage solution. Simplyblock is tightly integrated with Kubernetes through the CSI driver and available as a StorageClass. It enables storage virtualization with overcommitment, thin provisioning, NVMe over TCP access, copy-on-write snapshots, and many more features.

If you want to learn more about simplyblock, read “Why simplyblock?” If you want to get started, we believe in simple pricing.

Questions and Answers

Kubernetes manages storage by combining the CSI (Container Storage Interface) with container-attached storage (CAS) solutions. CSI provides a standard API for interacting with storage backends, while CAS enables running the storage layer directly inside the cluster—offering scalability and flexibility for cloud-native workloads.

Container-attached storage refers to storage solutions that run as containers within Kubernetes itself. CAS systems manage data locally or across clusters and often use CSI to expose volumes to workloads, enabling cloud-native storage provisioning with reduced external dependencies.

Simplyblock provides a CSI-compatible, high-performance block storage solution based on NVMe over TCP. Unlike traditional CAS solutions, it supports true scale-out, encryption, and snapshotting while maintaining performance across hybrid and multi-cloud environments.

Yes. With CSI integration, CAS solutions can support stateful Kubernetes workloads like databases, AI pipelines, and analytics systems, offering persistent, resilient, and scalable storage tailored to container-native environments.

CSI provides a vendor-agnostic interface for managing volumes, which simplifies storage operations in Kubernetes. It decouples storage lifecycle from the core platform and enables seamless integration with modern, high-speed storage backends like simplyblock.