Bringing AI Applications from Prototype to Production: The Last Mile

Sep 22nd, 2025 | 8 min read

I recently wrote a LinkedIn post about bridging the gap between MVP and production for AI applications, which eventually inspired this article. In the post, I said: “The gap between idea → MVP and MVP → production-grade is still huge. If we can generate full apps with AI in minutes… shouldn’t we also be able to bridge that last mile?”. The reactions confirmed a simple truth.

AI has made it incredibly easy to build prototypes, but moving those prototypes into reliable production remains a massive challenge for enterprises. The excitement of an AI-powered demo often fades when the realities of scale, compliance, and operational complexity come into play.

The New Reality: Prototypes in Minutes

Modern AI tools like bolt.new or Builder.io have changed how quickly teams can move from an idea to a working MVP. With just a few prompts, some auto-generated code, and rapid iterations, developers can spin up an application, a website, or an internal tool in hours.

For product teams, this speed is transformative. They can test more ideas, validate assumptions early, and show tangible progress to stakeholders without waiting months for engineering resources. Prototypes that once required weeks of planning and building can now be assembled in a single sprint, without developer involvement.

This acceleration is reshaping expectations. If AI can deliver prototypes so quickly, business leaders naturally expect the same speed when it comes to deploying production systems. And yet, this is exactly where momentum stalls. The gap between prototyping and production highlights the need for scalable, ready-to-use solutions—whether it’s infrastructure, analytics, or even outreach tools like cold email software to support go-to-market efforts.

The Wall Between MVP and Production

Turning an MVP into a production-grade system is where complexity returns. The same AI-generated app that looked polished in a demo now needs to withstand the scale and risk of a live environment.

One major challenge is the data. Prototypes often run on small synthetic datasets or simplified samples. Production environments involve live data at scale, full of irregularities, edge cases, and sensitive information. AI models trained on simplified datasets often fail when exposed to this real-world complexity.

Engineering complexity adds another layer of friction. Prototypes lean heavily on scaffolding, shortcuts, or no-code tools. But production requires resilient infrastructure: automated testing, version control, monitoring, high availability, compliance checks, and secure integrations with existing systems. Complement this with UI/UX design services to ensure intuitive admin consoles and smooth onboarding. Suddenly, the architecture needs to change. Teams must replace parts of the stack, rewire pipelines, and add processes that slow things down.

Finally, there is organizational risk. A buggy AI model or a broken database migration in production can mean more than an outage. It can result in data leaks, regulatory violations, or broken customer trust. Enterprises deliberately slow down the deployment process because the cost of mistakes is simply too high.

The result is a widening gap. AI allows teams to generate prototypes in minutes, but enterprises still take months to harden these prototypes into production-ready systems.

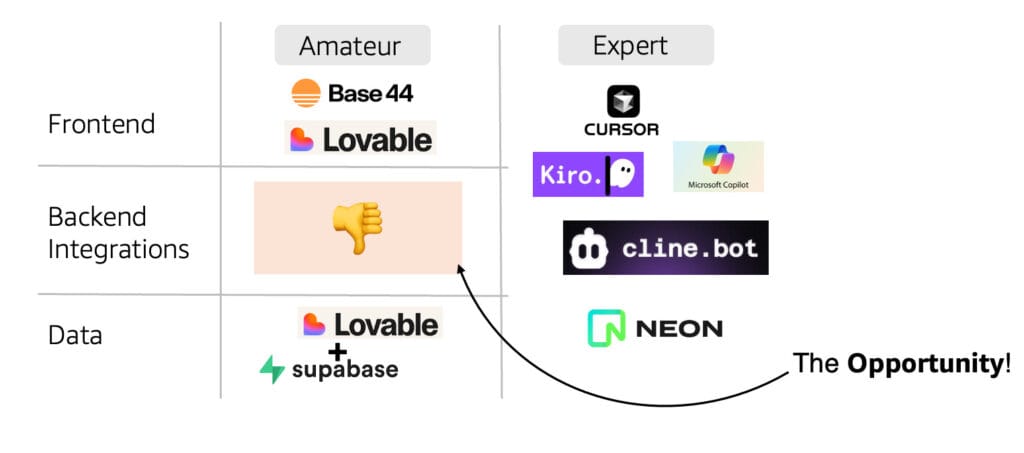

The Hidden Backend Problem

Ewa Treitz recently described this issue from another angle: AI-generated backends often become a black box. Engineers may be able to debug them, but for business users, they are inaccessible. Non-technical teams cannot iterate or improve something they cannot see or understand. As a result, the backend becomes a bottleneck.

This highlights a deeper opportunity: automating backend work for business users. If business leaders and product managers had access to safe, automated ways to clone, test, and scale their systems, they could keep projects moving forward instead of waiting on engineering cycles. Backend automation creates a shared foundation where both technical and non-technical stakeholders can collaborate without risking production.

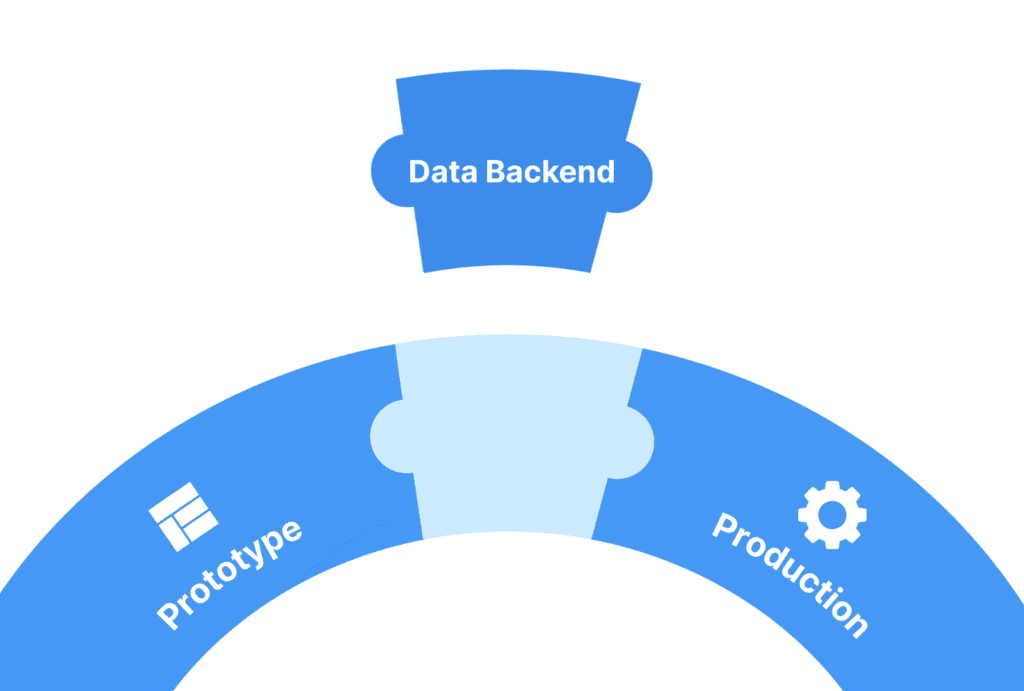

This is the exact gap we are addressing with Vela, which offers unified data backend for all business and AI applications. AI has made the front-end and application layer dramatically faster, but without a reliable backend layer, the last mile will always remain blocked. What enterprises need is not just a better database—they need a business backend: an automated foundation that connects AI-generated applications with enterprise-grade production systems.

An AI business backend does more than store data. It provides instant cloning and branching so teams can test against production-like environments without delay. It integrates governance, RBAC, and compliance so business users can work safely within guardrails instead of waiting for IT. Such backend decouples compute from storage so costs stay under control, even when workloads scale. And it makes complex workflows, from migrations to rollbacks to anonymization, available as self-service operations instead of long engineering projects.

Why the “AI Gap” Matters

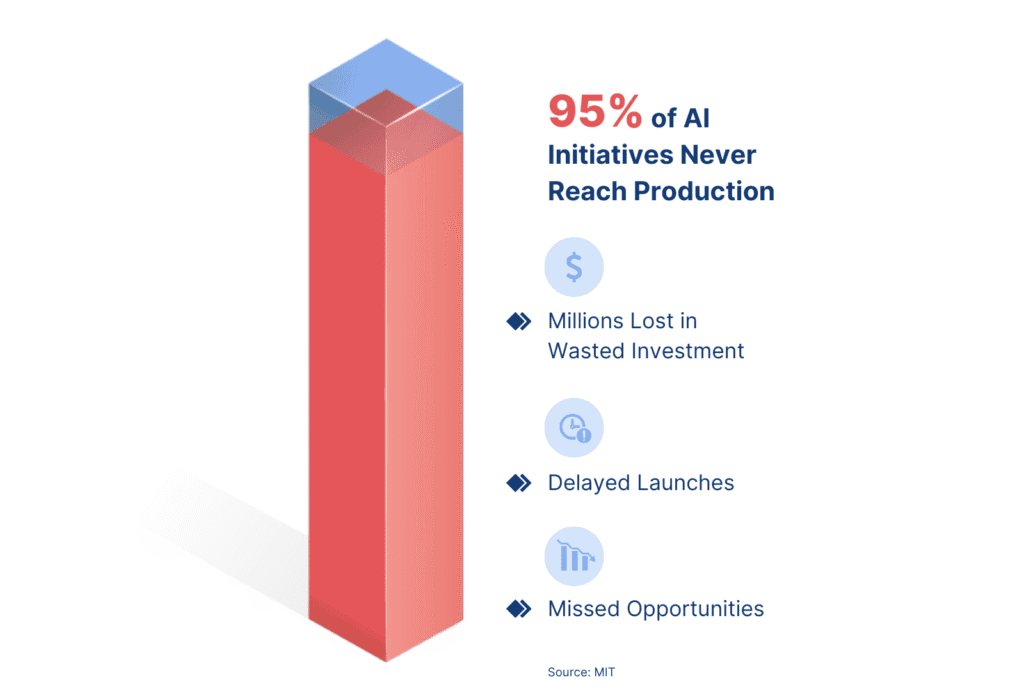

The gap between prototype and production for AI applications carries real business consequences. Projects that lose momentum in this stage often fail to deliver any impact at all. MIT has estimated that more than 95% of AI initiatives never make it into production. For enterprises, that translates into millions of dollars lost in wasted investment, delayed launches, and missed market opportunities.

The cost is not only financial. Innovation cycles slow down, and engineering teams get trapped in endless rework. By the time a project is ready to launch, the market may have shifted, and the opportunity may be gone. The inability to bridge the last mile creates frustration for developers and skepticism from business leaders, weakening trust in AI-driven initiatives.

The GenAI Divide: Why the Gap Persists

MIT’s State of AI in Business 2025 report calls this problem the GenAI Divide. The survey responses highlight the same underlying frustration in different words: AI feels brittle, manual, and disconnected from enterprise workflows. Users say it “doesn’t learn from our feedback,” “requires too much manual context,” “can’t be customized,” and “breaks in edge cases.” On the surface these look like model flaws, but they are really symptoms of a deeper issue: AI tools are being run outside of enterprise data environments.

Without access to live, production-like data, AI cannot accumulate knowledge across sessions, adapt to company-specific workflows, or handle messy real-world edge cases. Instead, every interaction feels like starting from scratch, forcing users to re-supply context that already exists in their systems. What looks like a failure of the model is actually a failure of the environment it runs in. This explains why over 95% of generative AI pilots never make it into production, highlighting the need for sustainable intelligence in AI deployments.

Bridging the divide requires enterprise-ready data infrastructure — systems that make production-like datasets safely accessible for testing and iteration. Only then can AI move from brittle prototypes to durable, evolving tools that truly integrate into core workflows.

What Enterprises Need to Close the “AI Gap”

Enterprises cannot afford to let promising AI applications die before reaching production. Closing the gap requires new ways of managing data, testing, and deployment.

The first requirement is production-like testing from the very beginning. QA and staging environments should be instant, accurate reflections of production, not outdated snapshots or stripped-down mock databases. When environments stay in sync, teams can detect problems early instead of discovering them after release.

The second requirement is safe access to real data. Developers and data scientists need to test against realistic data volumes and structures, but enterprises must protect sensitive information. This calls for instant cloning of production databases with built-in anonymization and compliance controls or Row-Level Security (RLS). Teams can work with data that behaves like production without compromising data privacy and security.

The third requirement is developer-friendly infrastructure. Engineers should not waste time waiting days for staging refreshes or fighting for access to a shared test database. They need self-service environments that can be provisioned instantly and scaled independently. That freedom accelerates development and reduces bottlenecks across teams.

Finally, platform engineering must be seen as a business enabler, not just an internal IT function. Companies that invest in giving their platform teams the right tools move faster, deploy more safely, and spend less. Those that stick to manual processes and fragile staging setups continue to lose time and money.

The Bigger Picture: AI Demands Faster Execution

AI is changing the speed of innovation. The ability to generate applications in minutes has shifted the bottleneck away from building and toward operationalizing. The real competitive advantage no longer lies in who can build the fastest prototype. It lies in who can bring those prototypes into production safely, consistently, and at scale.

Enterprises that master this transition will capture the value of AI before their competitors. They will iterate faster, launch more confidently, and avoid the expensive mistakes that come with outdated infrastructure. Those that fail to adapt will continue to see their AI projects stall in the gap between MVP and production.

Closing Thoughts

AI has solved the slow prototyping issue. We will never go back to spending hours trying to sepcify our idea for an application. We will just build an MVP with a few prompts. The challenge now is bridging the last mile. Enterprises need to invest in smarter infrastructure that enables production-like testing, safe data access, and developer-friendly workflows.

If we can build entire apps with AI in minutes, we should also be able to take them into production without months of rework and risk. The companies that solve this problem will not only deploy AI faster but will also unlock its full business potential.