Faster Feedback for Database Changes – How Real Database Branching Changes the Game

Aug 28th, 2025 | 10 min read

TLDR; Database change management process is a great solution to keep track and automatically apply database schema changes. This process doesn’t eliminate the human factor, though. When writing code or maintaining a codebase, we need to ensure that schema and semantic changes are applied consistently. We also need to ensure we have sufficient understanding of semantics to develop for all edge cases. Production-grade database branches are the logical extension of already existing database change management.

I love to develop software. I worked as a software engineer for many years in various industries, and much of my work was related to databases. Either I had to talk to databases directly (with or without ORMs) or I was using databases indirectly through some set of microservices, built by other teams or even other companies.

Either way, every so often (more often than I want to admit), something still failed in production. Sometimes, the data didn’t look like what I was told. Sometimes, database schema changes were applied by the database team that nobody knew about. Most often, making columns nullable because they can (I bet there were reasons, but it felt like it at the time).

Many such issues were eventually “solved” using database change management tools such as Liquibase or Flyway. However, they won’t solve issues where data uses semantics to convey meaning. Data can still look unexpected. That is true, even if all our beloved unit tests are green. And it’s true, even if our staging environments claim that tests are successful (depending on how you build your staging).

What is Database Change Management?

Let’s have a quick refresher on what database change management is.

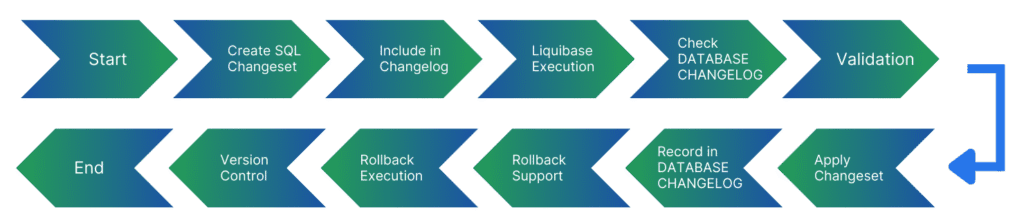

Database change management is all about bringing order to how database schemas and data evolve over time. Instead of developers firing off ad-hoc ALTER TABLE or INSERT statements in production, changes are tracked, versioned, and deployed in a controlled way. It doesn’t only sound like source control for your database changes. That’s how it typically works. Implemented via a GIT repository with changes being versioned and often forward and backward replayable.

If a change needs to be made to a database, you create a new .sql file, name it according to the date-time (ISO 8601 for the win) or with an incremental number, add the changes, and commit it to the repository.

At production time, the changes are applied to the database in the natural order. Eventually, the executing tool stores the last applied change set to the database to remember where in the migration chain it has to restart at the next run.

This ensures that all required database changes for a specific version are applied after the run, no matter the initial starting version. At its core, the process enforces idempotent, repeatable, and reversible database operations. It also makes dependencies explicit: you know which migrations must run first, which ones can run in parallel, and what impact they’ll have on performance— a critical factor in any migration from Amazon RDS to PostgreSQL.

The promise: with database change management, teams can move fast, keep databases stable, and avoid the infamous “it worked locally but blew up in production” problem.

What Database Change Management is Not

Here’s the thing: database change management is not your silver bullet. As engineers, we love to think of everything new as exactly that, our new, shiny silver bullet. It’s rarely true.

Btw, silver bullet, a good friend of mine, Hadi Hariri, had an excellent presentation a few years back about the “Silver Bullet Syndrome.” I highly recommend watching it.

While tools like Liquibase take an enormous burden off our shoulders. They handle schema changes, required data migrations, and are an integral part of the testing and deployment processes. However, they can’t prevent human errors, such as making wrong assumptions about semantics baked into the model.

Likewise, encoding business rules in a schema is commonplace. These rules need to be validated to ensure data quality. Changes to the semantics or misjudging how enums, foreign keys, or indexing strategies evolve can create long-term headaches. And business rules change all the time. At least, that is my experience.

Why Database Change Management Still Hurts

Here’s the reality: most of us develop against databases that are a shadow of production. Maybe it’s a small local PostgreSQL instance seeded with a few hundred rows of synthetic test data. Maybe it’s a shared staging environment that hasn’t been refreshed in three months because no one wants to deal with the dump/restore cycle. Either way, you’re testing on something that doesn’t represent the real world.

Wrong Data Assumptions

Let’s have a quick look at two examples. First, we want to read a user from the database to check the password on login. However, just recently, oauth2 authentication was added. In the process, the password field was changed to nullable.

const checkPassword(username, password) => {

const user = await db.queryOne(

"SELECT id, username, password_hash FROM users WHERE username=$1",

username

);

if (!user) return false;

const passwordHash = hashPassword(password).toLowerCase();

// Ugly hack to fix some casing issue in the database

const dbPasswordHash = user.password_hash.toLowerCase();

return dbPasswordHash === passwordHash;

}

You see the ugly hack? Raise your hand if you’ve never seen a comment like this. Now raise your hand if you created a comment like it. Right?! Whoever had the hand down on both questions should “throw the first stone.”

Anyway, the code looks ok, but in production it fails now.

TypeError: Cannot read properties of null (reading ‘toLowerCase’)

It’s a quick fix, but it’s embarrassing nonetheless. The problem is how we test. The unit tests are still green because they weren’t updated. And there is a good chance that integration tests weren’t updated either. Simple reason, they belong to the implementation. If I don’t change that, why would I change the tests?

Wrong Semantics

Another great example is the inherent meaning in data. Imagine a company that has only sold products in the USA for the past couple of years since its inception. Naturally, your database is built according to this environment.

Now, suddenly, management decides to jump into new markets. A great way to grow the business. Everyone is on board. Only a few small changes are required, and… we’re ready to go. But are we?

ResultSet rs = stmt.executeQuery(

"SELECT phone_number FROM customers"

);

while (rs.next()) {

String phone = rs.getString("phone_number");

if (!phone.matches("\\+\\d{1,3}-\\d{3}-\\d{4}")) {

throw new IllegalArgumentException(

"Invalid phone format: " + phone

);

}

}

If you’re in the United States, you’ve seen this: the typical way to write a US phone number. Nothing’s wrong with it. Except that it neither has a country code (which you may need with internal customers) nor does the number format apply to most other countries in the world.

A few years ago, I tried to register an account with a company and exactly that issue kept me away even though Germany was a totally valid residence country to select. Eventually, I used our company number in Palo Alto to sign up. Not exactly how it should be.

Faster Feedback, Fewer Bugs

As developers, we often sit in a vacuum. From the business side, we frequently get requirements that aren’t technically thought through, because it’s not their job, and no matter how deep we try to think of issues, we miss edge cases that will happen in production, or potentially have happened already, and we just don’t know.

What we really need is a database that encapsulates real-world-like data. Running our code against a production-grade dataset will quickly uncover issues and edge cases we hadn’t thought about.

Don’t get me wrong, unit tests are super important. What I’m saying is, such a database can help us reveal edge cases we want to integrate into our unit tests. It also helps to ensure that the semantics are all covered, and we don’t apply the “happy world testing” principle, meaning, testing only the good code paths.

As developers, feedback speed is everything. If I can quickly get a database with data that represents the real world, I can provide better code, faster. I don’t have to imagine potential edge cases. I can quickly discover them by running my code against the data.

How Vela Pulls It Off

The trick to getting production-grade data for testing is instant database cloning (also known as database branching). From a golden production copy (which can already be anonymized), Vela clones off new databases with a complete set of data in seconds.

We’re not dumping data, not restoring snapshots, not waiting hours for a dataset to load. With Vela’s storage engine, clones are effectively created at a block level using copy-on-write. You can branch a multi-terabyte production database in seconds and start testing immediately.

The magic here isn’t a clever script. Vela is built upon our NVMe/TCP software-defined storage engine, built for performant snapshotting and cloning of existing data volumes.

During the initial cloning process from production to our golden clone, we can anonymize data using mapping rules to encode and preserve semantic meaning. Seconds later, you can create a full-blown database clone and run your tests or try to reproduce bugs.

Why This Matters for Developers and QA

As developers, we’ve gotten used to the idea that code can be branched, tested, and discarded. We rely on that safety net to try new ideas and ship faster. Databases have been the stubborn outlier, but that doesn’t have to be the case anymore.

With real database cloning, we can stop guessing. We can stop writing code against toy datasets. We can stop being surprised when a QA cycle passes, but code still fails in production. Instead, you build, test, and iterate with confidence that your changes have been validated against the same complexity your users generate every day.

For QA engineers, the difference is night and day. No more “works on staging” bugs. No more delayed refreshes. Just quick, realistic test environments that mirror production, without the security risks. This is critical when planning fast backups and disaster recovery operations.

Database Cloning: The Perfect Extension of Database Change Management

Database change management has come a long way with tools like Liquibase or Flyway. They already save many hours trying to recreate a production-like database schema. However, the feedback loop of real-world-like data has always been the limiting factor. Without access to production-grade test data, we ship code and migrations with blind spots.

Vela fixes that by making database branches as easy and natural as code branching. Instant clones, anonymized production data, disposable environments. We got you covered. It’s the missing piece for faster iterations, fewer bugs, and less 3 a.m. rollback drama.

As a developer, I can’t stress enough how freeing it feels to treat the database like code. Want to try a risky migration? Branch it. Want to reproduce a gnarly bug? Branch it. Want to run integration tests on every pull request? Branch it.

Real database branching doesn’t just speed up feedback, it changes the way we build and ship software.