InfiniBand

Terms related to simplyblock

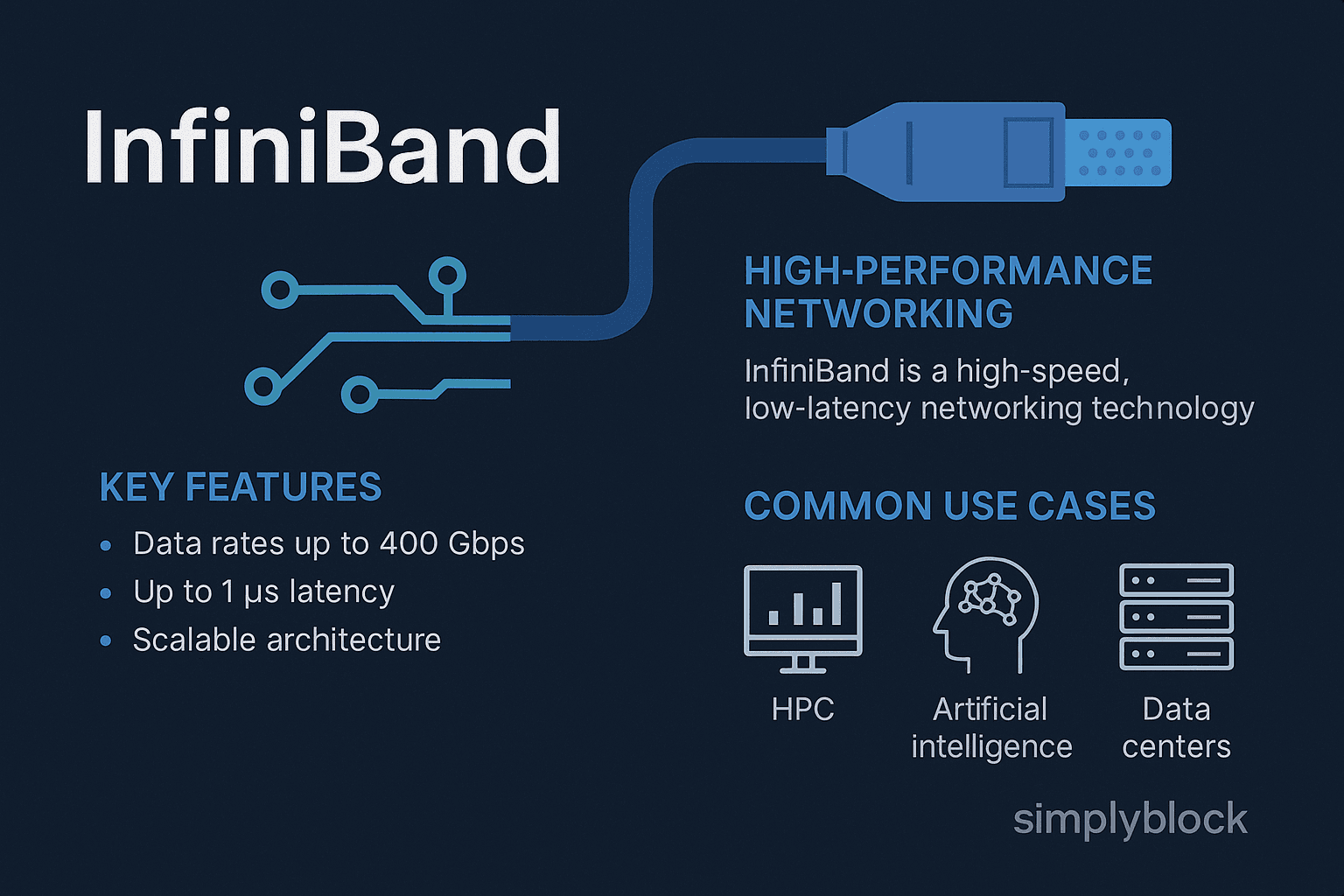

InfiniBand is a high-performance, low-latency networking architecture used primarily in high-performance computing (HPC), AI/ML clusters, and low-latency storage fabrics. Unlike Ethernet, InfiniBand is designed for extreme data throughput and minimal latency, with speeds ranging from 100 Gbps to over 400 Gbps and sub-microsecond latency.

It supports Remote Direct Memory Access (RDMA), allowing data to bypass the operating system and CPU, transferring directly between memory spaces. This design drastically reduces overhead, making InfiniBand ideal for distributed file systems and NVMe over Fabrics (NVMe-oF) in RDMA mode.

How InfiniBand Works

InfiniBand is built on a switched fabric architecture, rather than a bus or point-to-point topology. Key components and layers include:

- Host Channel Adapters (HCAs) for endpoint communication

- Switches to forward data across the fabric

- RDMA support for direct memory access between nodes

- Transport modes: Reliable connection (RC), unreliable datagram (UD), and more

The RDMA mechanism enables zero-copy networking, meaning data is transferred without CPU involvement, reducing context switches and latency.

InfiniBand supports communication between compute nodes, storage systems, and GPUs, making it foundational in large-scale AI and simulation clusters.

Benefits of InfiniBand in Storage and Compute

InfiniBand delivers key performance and scalability advantages:

- Sub-microsecond latency: Crucial for time-sensitive workloads like HFT, simulations, and AI inference

- High bandwidth: Up to 400 Gbps with HDR and NDR generations

- RDMA acceleration: Enables ultra-efficient NVMe-oF deployments

- CPU offloading: Reduces compute overhead by bypassing the OS kernel

- Scalable fabrics: Handles large node counts with minimal congestion

In storage, InfiniBand is leveraged in NVMe-oF (RDMA) deployments, delivering lower latency than NVMe/TCP at the cost of higher complexity and specialized hardware.

Use Cases for InfiniBand

InfiniBand is adopted in use cases where latency, throughput, and CPU efficiency are mission-critical:

- HPC clusters for scientific modeling, genomics, or fluid dynamics

- AI/ML training farms with GPU-to-GPU interconnect

- Distributed storage fabrics using NVMe-oF with RDMA

- High-frequency trading (HFT) systems

- Parallel databases with high write throughput and synchronous replication

- Supercomputing infrastructures at national labs and research institutes

InfiniBand vs Ethernet vs Fibre Channel

A quick comparison of their speed, latency, cost, and ideal workloads.

| Feature | InfiniBand | Ethernet (RoCE / TCP) | Fibre Channel |

|---|---|---|---|

| Max Speed | 400 Gbps (NDR) | 100–400 Gbps | 32–128 Gbps |

| Latency | <1 µs | 10–100 µs | 1–5 µs |

| RDMA Support | Native | RoCEv2 / iWARP | No native RDMA |

| CPU Offload | Yes | Partial (RoCE) | No |

| Protocol Flexibility | Low (IB-specific) | High (IP-based) | Moderate (FCP only) |

| Cost & Complexity | High | Moderate | High |

| Kubernetes Integration | Indirect / advanced users | Native with CSI + TCP/RoCE | Limited |

InfiniBand and Simplyblock

Simplyblock is designed around NVMe-over-TCP, which provides Ethernet-based performance that’s easier to deploy in Kubernetes and cloud-native environments. However, for edge cases requiring maximum throughput and latency control, InfiniBand may be used at the backend layer or in specialized HPC zones.

Key highlights in simplyblock’s fabric include:

- Sub-millisecond performance over standard Ethernet

- RDMA-like throughput via zero-copy techniques in NVMe/TCP

- Compatibility with Kubernetes CSI for stateful volumes

- Resilience via erasure coding and multi-zone replication

This allows simplyblock to offer near-RDMA performance without requiring InfiniBand hardware, improving adoption in hybrid and edge scenarios.

Related Technologies

Teams often review these glossary pages alongside InfiniBand when they design low-latency fabrics for RDMA traffic, storage transport, and infrastructure offload in clustered environments.

RoCEv2

NVMe/RDMA

NVMe-oF Target on DPU

In-Network Computing

External Resources

- InfiniBand – Wikipedia

- NVIDIA Mellanox InfiniBand Overview

- Introduction to RDMA – Intel Developer Zone

- NVMe over Fabrics Specification – NVM Express

- InfiniBand vs Ethernet – TechTarget

Questions and Answers

InfiniBand is a high-speed, low-latency networking technology widely used in HPC, AI/ML, and data-intensive workloads. It delivers ultra-fast bandwidth and remote direct memory access (RDMA), making it ideal for clustered databases, distributed storage, and scientific computing.

InfiniBand offers lower latency and higher throughput than standard Ethernet, but it requires specialized hardware. NVMe over TCP provides similar scalability and performance benefits on standard Ethernet, making it more accessible and Kubernetes-friendly.

InfiniBand is used in specialized Kubernetes setups, especially in HPC or AI clusters. However, it lacks native support in most CSI drivers. For broader compatibility and dynamic provisioning, Kubernetes-native NVMe storage is a more operationally flexible alternative.

InfiniBand supports secure communication layers, but it relies on the storage or application layer for encryption at rest. For end-to-end data security, encryption should be enforced at the volume level in multi-tenant setups.

InfiniBand provides excellent performance but comes with higher costs, proprietary infrastructure, and limited support in cloud-native stacks. By contrast, software-defined storage using NVMe/TCP runs on standard Ethernet and integrates easily with containerized environments.