TiDB

Terms related to simplyblock

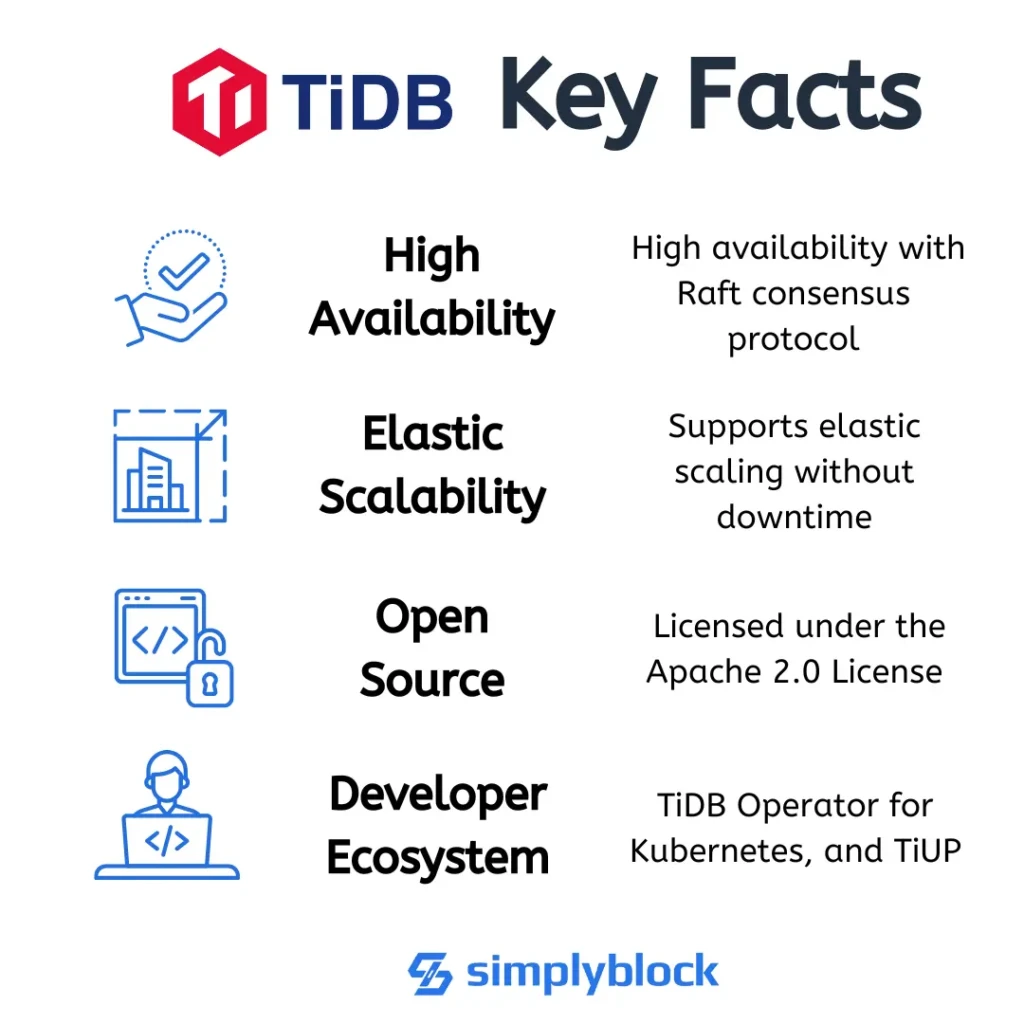

TiDB is an open-source, distributed SQL database developed by PingCAP. It combines the benefits of traditional RDBMSs with horizontal scalability and cloud-native resilience. Compatible with MySQL syntax and ecosystem, TiDB is built for OLTP and OLAP workloads, making it suitable for hybrid transactional and analytical processing (HTAP). It supports strong consistency, elastic scaling, and automatic failover across multi-region deployments.

How TiDB Works

TiDB uses a layered architecture consisting of:

- TiDB Server – Stateless SQL layer that processes MySQL-compatible queries

- TiKV – Distributed transactional key-value storage engine

- PD (Placement Driver) – Centralized metadata and scheduling manager

- TiFlash – Columnar, analytical engine for HTAP workloads

TiKV and TiFlash store data across multiple nodes using the Raft consensus protocol. This design ensures ACID compliance and enables real-time replication and fault tolerance across distributed infrastructure.

TiDB vs Traditional SQL Databases

TiDB provides horizontal scalability and native high availability without sharding or external clustering tools. Here’s how it compares:

| Feature | TiDB | Traditional RDBMS (e.g., MySQL, PostgreSQL) |

|---|---|---|

| Scalability | Horizontal (distributed nodes) | Vertical (scale-up) |

| Availability | Built-in Raft replication | Manual HA setup |

| OLTP + OLAP Support | Native HTAP via TiFlash | Typically OLTP only |

| Cloud Readiness | Kubernetes and hybrid support | Mostly monolithic |

| Storage Engine | TiKV + TiFlash | InnoDB, PGStorage |

TiDB decouples compute and storage, enabling dynamic elasticity for cloud-native workloads.

TiDB and NVMe-Optimized Storage

TiDB’s performance hinges on its storage layer, particularly TiKV and TiFlash. Both engines are I/O intensive and benefit significantly from high-throughput, low-latency block storage. Deploying TiDB on NVMe over TCP with simplyblock™ enhances:

- Write throughput for TiKV’s transactional log

- Scan performance for TiFlash columnar reads

- Snapshot and backup operations during scaling

- Latency for Raft replication across zones or clusters

Simplyblock’s SDS enables storage elasticity and efficient resource utilization across nodes, improving performance under load and during failover.

Running TiDB in Kubernetes

TiDB supports native Kubernetes deployment via TiDB Operator, simplifying scaling, upgrades, and resource isolation. However, persistent volumes for TiKV and TiFlash demand consistent IOPS, low latency, and multi-zone replication.

With simplyblock’s Kubernetes-native CSI driver, TiDB gains:

- Dynamic provisioning of high-performance NVMe volumes

- Instant snapshotting for backups or testing environments

- Multi-zone support for fault tolerance

- Encryption-at-rest and secure volume isolation

This ensures consistent database performance across hybrid and edge environments.

TiDB Storage Requirements

Key storage characteristics for a high-performing TiDB cluster include:

- Low latency writes: Required by TiKV’s LSM-tree and Raft logs

- High throughput: For multi-tenant or HTAP use cases

- Snapshot support: For backups and scale-out scenarios

- Availability zones awareness: To maintain quorum

- Elastic growth: To support tenant scaling or workload spikes

Simplyblock’s erasure coding provides durable, cost-efficient protection—essential for stateful workloads like TiDB.

Common Use Cases for TiDB

TiDB supports high-concurrency workloads that require MySQL compatibility but need distributed scale. Key use cases include:

- E-commerce platforms: Real-time inventory, payment processing

- Financial services: Transactional systems with real-time reporting

- Gaming: Leaderboards, user stats, and in-game economies

- SaaS platforms: Multi-tenant apps with elastic demand

- IoT telemetry: Write-heavy ingestion with real-time analytics

Hybrid-cloud deployments using TiDB can benefit from simplyblock’s consistent volume performance across regions.

Simplyblock™ Enhancements for TiDB

Simplyblock’s SDS platform delivers multiple benefits for TiDB deployments:

- NVMe-over-TCP support for scalable IOPS

- Snapshot and clone features to accelerate CI/CD and DR

- Thin provisioning and tiering for efficient cost control

- QoS controls for multi-tenant environments

- Full Kubernetes storage lifecycle management

Together, these enable resilient, scalable, and cost-effective TiDB clusters.

External Resources

Questions and Answers

TiDB is an open-source, distributed SQL database that blends the best of MySQL compatibility with horizontal scalability. It’s ideal for hybrid transactional and analytical processing (HTAP), making it a strong choice for real-time analytics and large-scale OLTP systems.

Yes, TiDB has first-class Kubernetes support via the TiDB Operator. For consistent performance, it should be paired with NVMe-backed Kubernetes storage to handle high IOPS, rapid failover, and persistent volume demands at scale.

TiDB relies on fast storage for TiKV (its distributed key-value layer). NVMe over TCP offers the high throughput and low latency needed to support TiDB’s transactional consistency and real-time analytical workloads efficiently.

Yes, TiDB supports encryption at rest, including envelope encryption with external key management systems. For added security and regulatory compliance, use storage-level encryption at rest with per-volume key isolation.

TiDB is designed for horizontal scalability and can handle terabytes to petabytes of data with minimal manual sharding. When combined with software-defined storage, TiDB delivers consistent performance in multi-tenant and hybrid cloud setups.