Snowflake

Terms related to simplyblock

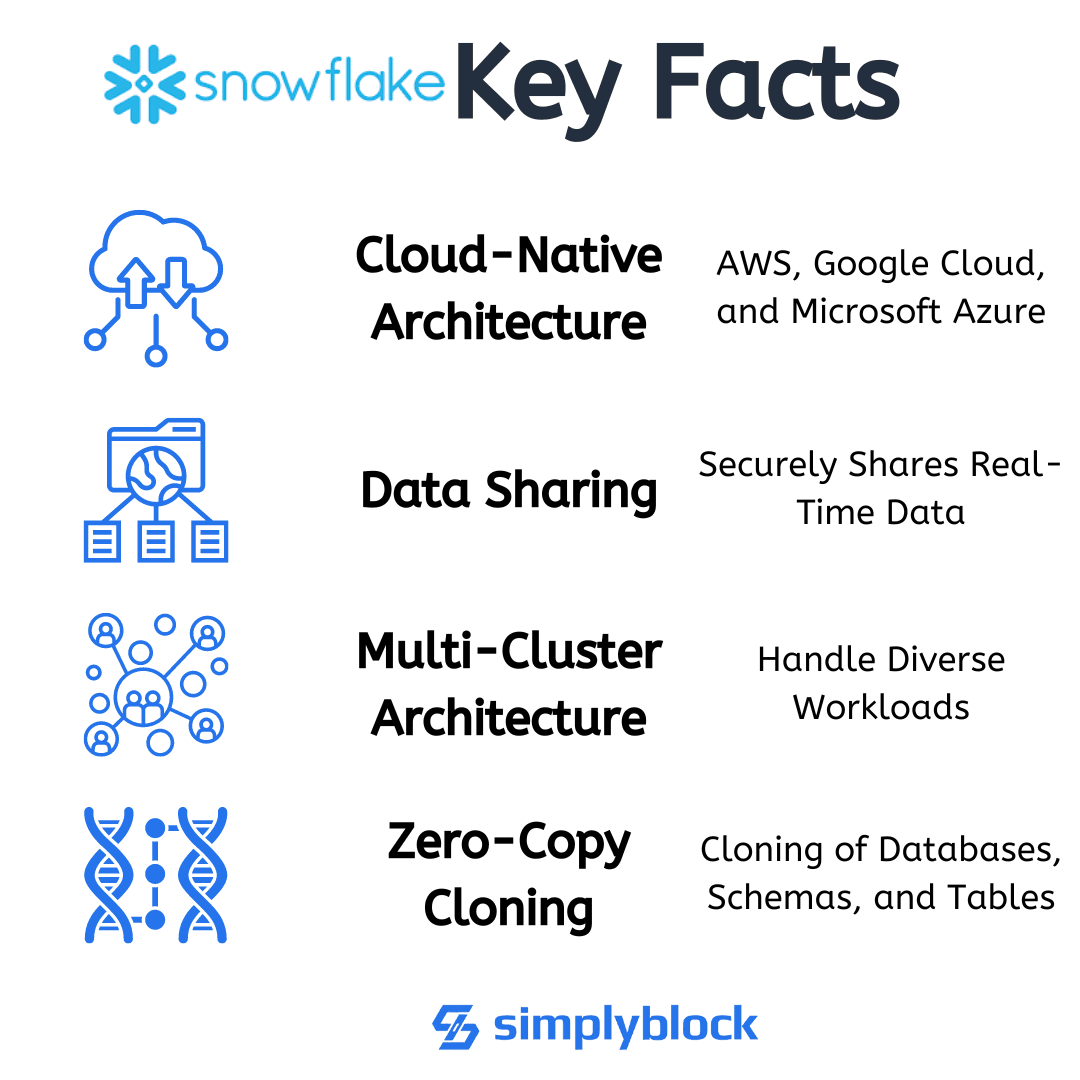

Snowflake is a cloud-native data platform designed for scalable analytics, data warehousing, and data sharing. Unlike traditional databases, Snowflake separates storage and compute, enabling independent scaling of each layer. Built on a multi-cluster shared data architecture, Snowflake supports structured and semi-structured data, including JSON, Avro, and Parquet. It’s offered as a fully managed SaaS solution across major clouds like AWS, Azure, and Google Cloud.

How Snowflake Works

Snowflake operates on a decoupled architecture consisting of three layers:

- Database Storage – Handles compressed, columnar storage of all structured and semi-structured data.

- Compute Layer – Virtual warehouses execute queries and load operations in parallel.

- Cloud Services – Coordinates infrastructure, metadata management, query parsing, authentication, and security.

By separating compute and storage, Snowflake allows workloads to scale elastically. Each virtual warehouse can be sized and paused independently, optimizing cost and performance for different users and applications.

Snowflake vs Traditional Data Warehouses

Snowflake’s cloud-native model eliminates infrastructure management and manual tuning. Here’s how it compares:

| Feature | Snowflake | Traditional Data Warehouse (e.g., Teradata, Oracle Exadata) |

|---|---|---|

| Deployment | Fully managed SaaS | On-premises or hybrid |

| Storage and Compute | Decoupled | Tightly integrated |

| Scalability | Automatic, per-workload scaling | Manual provisioning |

| Data Types | Structured + Semi-structured | Mostly structured |

| Maintenance | No management overhead | Requires DBA involvement |

Snowflake enables instant elasticity, concurrent workloads, and cross-cloud availability—beneficial for dynamic analytics use cases.

Snowflake Storage Characteristics

While Snowflake abstracts infrastructure from the user, it still relies on underlying cloud object and block storage. Key storage traits include:

- Columnar compression: Enables faster analytics and lower storage cost

- Automatic partitioning: Organizes data for efficient scan and retrieval

- Immutable storage: Ensures data consistency and historical audit

- Cloud object stores: Typically AWS S3, Azure Blob, or Google Cloud Storage

However, organizations integrating Snowflake with high-speed ingestion systems or downstream transactional stores benefit from pairing it with NVMe-optimized staging layers.

Using Snowflake with NVMe and Simplyblock™

Although Snowflake runs on managed cloud storage, many enterprises implement real-time ingestion, caching, and preprocessing layers before loading data into Snowflake. These layers benefit significantly from NVMe over TCP storage.

For example:

- Log analytics pipelines: Use Kubernetes + NVMe-backed caches to preprocess event data before loading to Snowflake

- ETL staging layers: Leverage high-throughput SDS to buffer large-scale ingestions

- Temporary compute: Real-time dashboards querying operational stores using Snowflake for historical joins

Simplyblock provides high-speed, erasure-coded, distributed NVMe storage ideal for these auxiliary analytics components.

Snowflake in Hybrid Data Architectures

While Snowflake itself is cloud-native, most organizations operate hybrid analytics environments. Integrating Snowflake with on-premises or edge infrastructure requires synchronization tools, replication, or ETL staging systems that benefit from:

- Kubernetes-native persistent volumes

- Low-latency block storage for data caching

- Snapshotting and cloning for parallel pipelines

- Durable, high-availability storage for data sync retries

Using simplyblock, enterprises can build hybrid data lakes and ingestion pipelines without bottlenecks in their Snowflake integrations.

Common Use Cases of Snowflake

Snowflake supports a wide variety of data-driven use cases, including:

- Enterprise data warehousing

- Real-time and batch analytics

- Data lake querying

- Business intelligence dashboards

- Secure data sharing across departments or partners

- Machine learning model feature generation

Its flexible compute scaling and ability to query semi-structured data make it suitable for modern analytics stacks alongside open-source tools, cloud-native applications, and Kubernetes deployments.

Simplyblock™ Features Supporting Snowflake Workloads

Snowflake-centric architectures benefit from surrounding infrastructure with:

- NVMe-over-TCP staging volumes for ETL acceleration

- Copy-on-write snapshots for safe, parallel processing

- Multi-tenant QoS and volume isolation for dev/test environments

- Erasure coding to reduce 3x replication cost in transient workloads

- Kubernetes support to manage ingestion and transformation containers

Visit our performance report to evaluate latency improvements for ingestion-heavy stacks.

External Resources

- Snowflake Official Website

- Snowflake Documentation

- Cloud Data Warehouse – Wikipedia

- Introduction to Data Lakes

Questions and Answers

Snowflake is a cloud-native data platform designed for scalable analytics, with separate compute and storage layers. It supports semi-structured data, automatic scaling, and concurrency handling—making it ideal for BI, ELT pipelines, and multi-team analytics workloads.

No, Snowflake is a fully managed SaaS platform and not available for self-hosting or Kubernetes deployment. For teams needing more control or private infrastructure, software-defined storage combined with open-source data platforms is a flexible alternative.

Snowflake stores data in cloud object storage (e.g. AWS S3, Azure Blob, GCS). However, for similar analytics use cases on self-managed platforms, NVMe over TCP can offer significantly lower query latency and faster load performance.

Yes, Snowflake uses always-on encryption at rest and in transit by default. For comparable on-prem or hybrid architectures, ensure your storage stack supports encryption-at-rest to meet compliance requirements like GDPR, HIPAA, and SOC 2.

Yes, Snowflake scales elastically by provisioning virtual warehouses independently. For similar scalability in private or multi-cloud environments, pair distributed query engines with high-performance NVMe storage to support parallel processing at scale.