PCI Express

Terms related to simplyblock

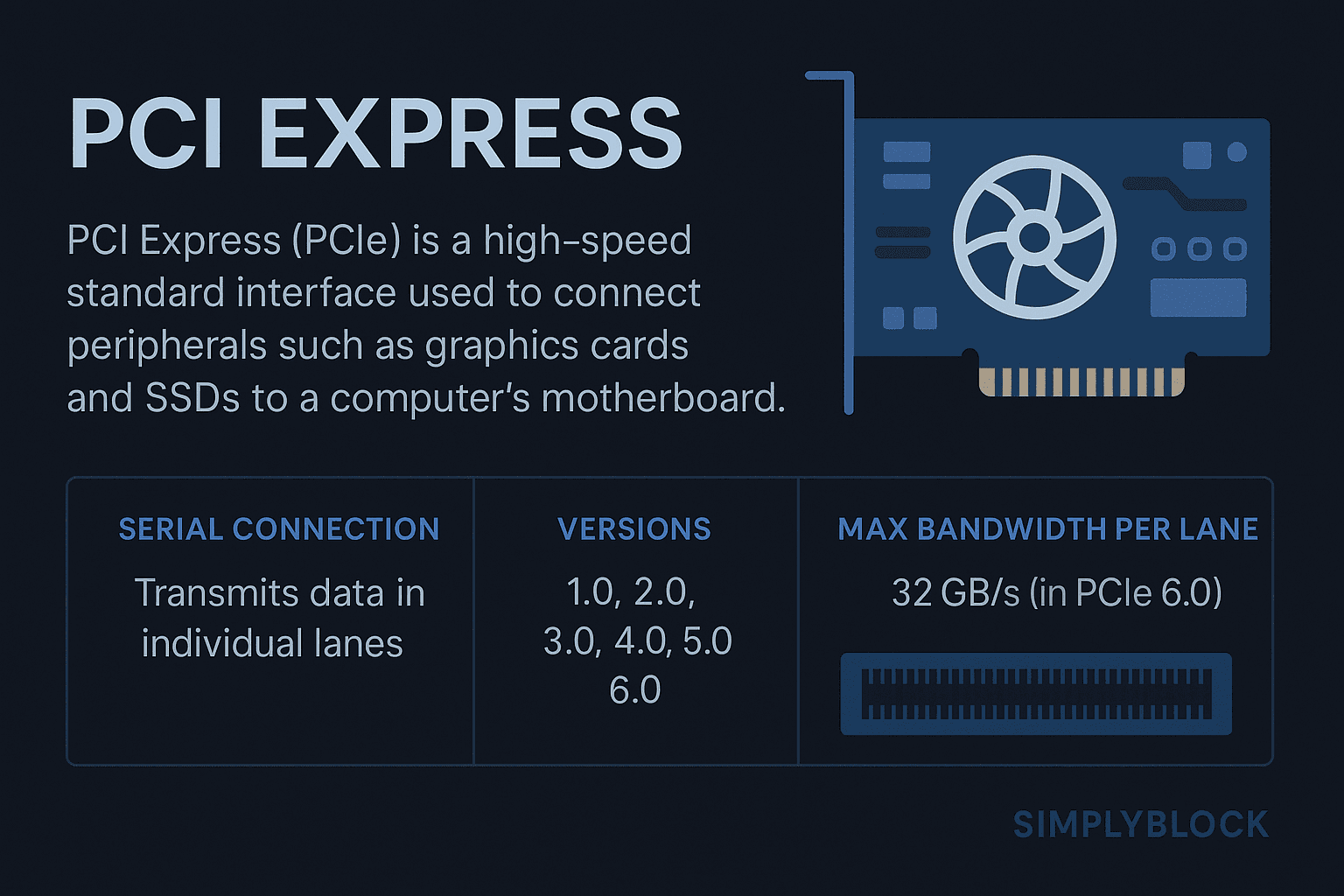

PCI Express (PCIe) is a high-speed serial computer expansion bus standard designed to replace older standards such as PCI, PCI-X, and AGP. It serves as the primary interface for connecting high-performance components—like graphics cards, network interface cards (NICs), storage controllers, and SSDs—to a computer’s motherboard.

PCIe delivers scalable bandwidth through a lane-based architecture, where each “lane” consists of two pairs of wires for sending and receiving data. It supports point-to-point links with full-duplex communication, enabling much higher transfer rates than previous bus architectures.

In enterprise infrastructure, PCIe is critical for low-latency, high-throughput communication between CPUs and fast storage technologies, especially NVMe SSDs.

How PCIe Works

PCI Express uses a layered protocol stack with three main layers:

- Transaction Layer: Manages communication and data formatting.

- Data Link Layer: Ensures reliable transmission with error checking.

- Physical Layer: Handles the actual transmission of bits across lanes.

PCIe slots are available in configurations like x1, x4, x8, and x16, referring to the number of lanes. More lanes mean higher bandwidth:

- PCIe Gen3: ~1 GB/s per lane (x4 = 4 GB/s)

- PCIe Gen4: ~2 GB/s per lane (x4 = 8 GB/s)

- PCIe Gen5: ~4 GB/s per lane (x4 = 16 GB/s)

- PCIe Gen6 (upcoming): ~8 GB/s per lane (x4 = 32 GB/s)

Backward and forward compatibility ensure that devices of different generations can often function together, though at the lowest common speed.

Benefits of PCIe

PCIe offers several benefits that make it indispensable for both client and enterprise systems:

- High Throughput: Supports data-intensive applications like AI/ML, video rendering, and high-speed storage.

- Low Latency: Enables real-time responsiveness in mission-critical systems.

- Scalability: Multiple lanes and generations allow for customizable performance based on hardware requirements.

- Power Efficiency: PCIe 4.0 and newer versions are optimized for performance-per-watt.

- Direct CPU Access: Devices connected via PCIe can communicate with the CPU and memory with minimal overhead.

- Multipurpose Interface: Supports a wide range of peripherals, including NVMe SSDs, GPUs, FPGAs, and DPUs.

In a software-defined storage context, PCIe is the foundation for NVMe architecture, which leverages direct PCIe connectivity to eliminate bottlenecks seen in traditional SATA or SAS-based systems.

PCIe vs Other Interfaces

Here is a comparison of PCIe with common alternatives in storage and I/O interfaces:

| Interface | Max Bandwidth (x4) | Latency | Typical Use Case |

|---|---|---|---|

| PCIe Gen4 | ~8 GB/s | ~1–10 µs | NVMe SSDs, GPUs, DPUs |

| SATA III | ~600 MB/s | ~100–500 µs | HDDs, budget SSDs |

| SAS-3 (12 Gbps) | ~1,200 MB/s | ~50–150 µs | Enterprise HDDs/SSDs |

| USB 3.2 Gen2 | ~1.2 GB/s | ~50–300 µs | External drives, peripherals |

| Ethernet (10GbE) | ~1.25 GB/s | ~100 µs–1 ms | Network-attached storage, clusters |

Use Cases for PCIe

PCIe is central to modern infrastructure, powering:

- NVMe SSD Storage: PCIe is the physical layer for NVMe, providing ultra-low latency and high IOPS.

- High-Performance Computing (HPC): GPUs, FPGAs, and other accelerators interface via PCIe.

- SmartNICs and DPUs: Used in cloud networking for offloading infrastructure tasks.

- Database Acceleration: NVMe-over-PCIe drives reduce transaction time in PostgreSQL, Oracle, and NoSQL workloads.

- Software-Defined Storage (SDS): PCIe-based NVMe pools form the performance tier in platforms like simplyblock.

PCIe in Simplyblock™

At the core of simplyblock’s high-performance storage platform is a PCIe-enabled NVMe architecture. Unlike traditional storage relying on SAS or SATA, simplyblock aggregates PCIe-attached NVMe devices into a unified, software-defined storage fabric.

This enables:

- Sub-millisecond Latency: Ideal for Kubernetes persistent volumes, AI/ML workloads, and transactional databases.

- Dynamic Scalability: NVMe devices can be scaled horizontally, all managed via a centralized SDS control plane.

- Hybrid Deployments: PCIe-based storage can coexist with SATA for tiered storage, all abstracted through advanced erasure coding.

- Cloud and Edge Readiness: PCIe-based NVMe nodes can be deployed in hybrid, multi-cloud, and air-gapped edge environments with full workload portability.

Related Technologies

Teams often review these glossary pages alongside PCI Express (PCIe) when they plan host-to-device topology and offload paths that affect storage and networking latency.

PCIe-Based DPU

Intel E2200 IPU

vSwitch / OVS Offload on DPU

Storage Virtualization on DPU

External Resources

- PCI Express – Wikipedia

- PCI-SIG (PCI Special Interest Group)

- What is PCIe? – Intel

- PCIe Gen4 Explained – Kingston Technology

- Understanding SSD Technology: NVMe, SATA, M.2 – Kingston Technology

Questions and Answers

PCI Express (PCIe) is a high-speed interface standard used to connect components like GPUs, SSDs, and network cards directly to the CPU. It’s essential for modern NVMe storage, offering ultra-low latency and massive bandwidth for data-intensive applications.

PCIe delivers significantly higher throughput and lower latency than both SATA and SAS. When used with NVMe over TCP, PCIe-based drives can reach their full potential over standard Ethernet networks without specialized hardware.

Yes. PCIe-attached NVMe drives can back Kubernetes-native storage via CSI drivers. This provides high-speed persistent volumes for stateful workloads like databases, AI/ML pipelines, and real-time analytics.

PCIe itself doesn’t handle encryption, but it supports hardware like self-encrypting NVMe drives. For secure storage in production, encryption at rest should be applied at the volume or platform level to protect data.

PCIe is used in high-performance environments like SSD arrays, GPU-accelerated workloads, and software-defined storage platforms. It’s critical in systems that demand low latency and high IOPS, such as cloud-native databases and real-time analytics.