Compression in Block Storage

Terms related to simplyblock

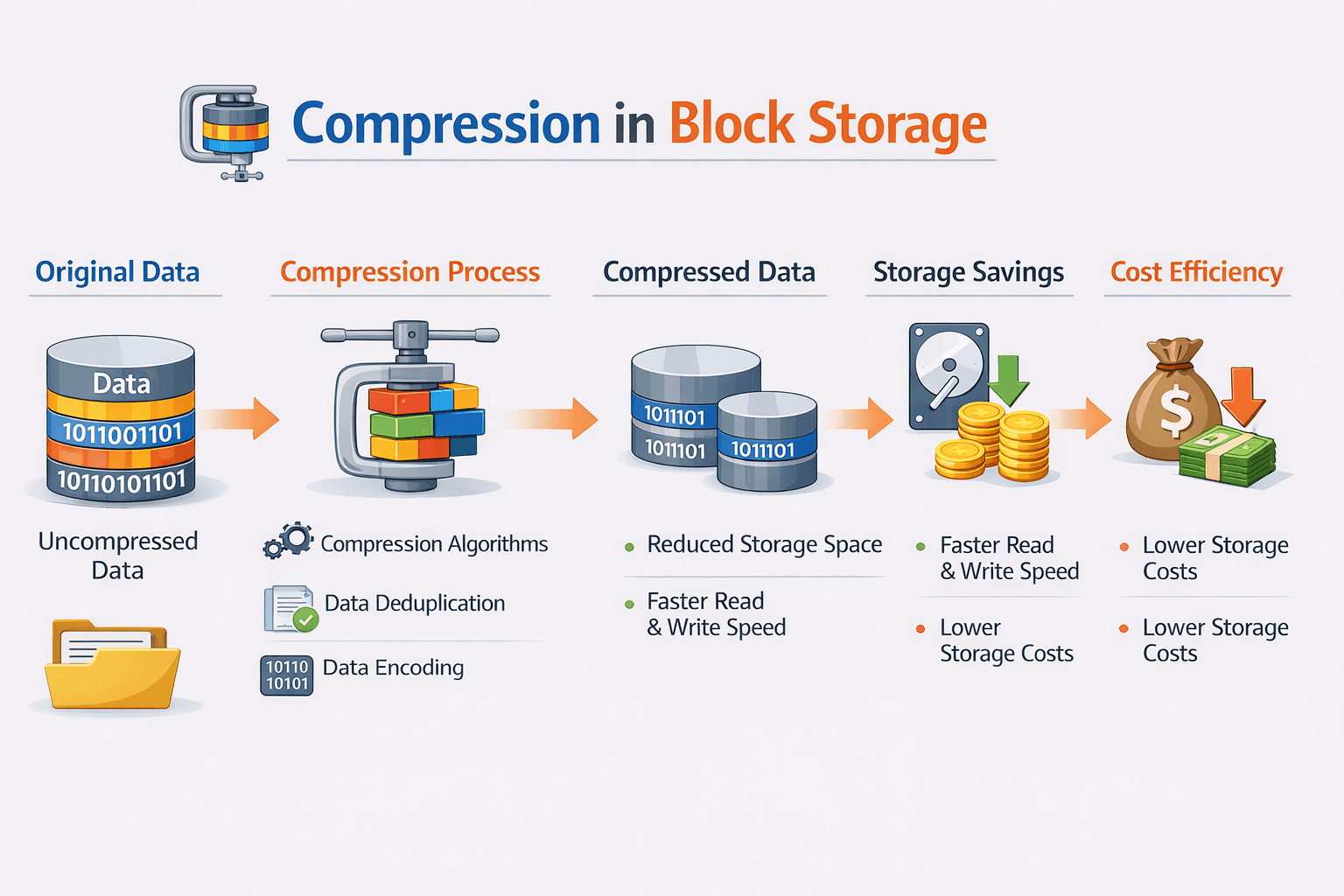

Compression in Block Storage reduces the physical bytes written to storage by encoding data in a smaller form before the platform places it on media. Your applications still read and write the same logical blocks, but the storage layer stores fewer bytes and often moves less data across the network.

Compression shifts the cost curve and the performance curve at the same time. You can shrink capacity and replication traffic, but you must manage CPU, memory bandwidth, and queue depth to keep latency steady. That trade-off matters most in Software-defined Block Storage because the software data path already handles placement, resiliency, and QoS.

Most teams evaluate compression with three questions. How much space will we save for this dataset? What happens to p95 and p99 latency under load? Which node pays the CPU bill, the storage node, the compute node, or an offload device?

Optimizing Compression in Block Storage with Practical Platform Controls

Inline compression delivers immediate data reduction, so finance teams see savings without waiting for a rewrite job. Strong implementations also skip low-yield blocks instead of compressing everything. That behavior protects CPU cycles and lowers the risk of tail latency spikes.

Policy-based control reduces drift across clusters. A storage class in Kubernetes Storage can map to a compression level, a minimum target ratio, and a QoS profile, so platform owners can enforce standards without asking each app team to tune codecs.

For reference architectures that prioritize efficiency, many teams pair compression with zero-copy I/O and user-space data paths. SPDK-style design reduces CPU overhead for I/O handling, which helps offset the extra cycles compression needs.

🚀 Enable Compression in Block Storage, Natively in Kubernetes

Use Simplyblock to apply StorageClass-level compression and keep latency predictable at scale.

👉 Configure Compression in Simplyblock CSI →

Compression in Block Storage in Kubernetes Storage

Kubernetes Storage adds noisy neighbors, bursty jobs, and frequent volume lifecycle events. Compression works best when teams treat it as a platform capability rather than an app feature. That approach keeps behavior consistent across namespaces and prevents “one team per codec” overhead.

When you run stateful workloads on persistent volumes, align compression with what the workload actually writes. Log streams, JSON, and text-heavy telemetry often compress well. Already-compressed media and encrypted blobs often do not. The platform should detect that difference quickly and stop wasting cycles.

Operationally, focus on predictability. Define targets for p95 and p99 latency, then validate them during scale events like node drains and rebuilds. Link compression to QoS so one tenant’s batch compaction job does not destabilize another tenant’s transaction tier.

Compression in Block Storage and NVMe/TCP

NVMe/TCP moves block I/O over standard Ethernet and scales across racks with familiar tooling. Compression can cut east-west traffic during replication, rebuilds, remote reads, and cross-zone copy. Less traffic helps you protect headroom on high-speed links, especially when multiple tenants push peak throughput at the same time.

CPU scheduling becomes the main risk factor. NVMe/TCP already consumes cycles for packet handling and queue processing, and compression adds more work on top. Efficient user-space I/O and a tight copy-avoidance strategy matter here. If the platform copies buffers repeatedly, compression amplifies the problem.

Measuring and Benchmarking Compression in Block Storage Performance

Benchmarking needs two views of throughput. Start with application-visible numbers, then add physical backend numbers. That split shows whether compression helps you push more logical data per second while moving fewer bytes on the wire.

Use repeatable workload patterns and vary data types. Test at 4K random reads and writes, but also run 64K and 256K sequential jobs that match analytics, backups, and log pipelines. Track IOPS, bandwidth, p95 latency, p99 latency, CPU utilization, and the compression ratio per volume.

When you share results with execs, translate them into unit economics. Show usable terabytes per raw terabyte and IOPS per core for each storage tier. Those two metrics make the business case clear without turning the review into a codec debate.

Approaches for Improving Compression in Block Storage Performance

Most teams improve results by tightening controls, not by chasing exotic settings. These practices usually move the needle while keeping operations simple:

- Choose a codec and level per service tier, then standardize it across clusters.

- Enable adaptive logic that skips blocks that do not compress well.

- Match compression chunk size to real write patterns to avoid fragmentation in hot regions.

- Set QoS limits so batch tenants cannot steal CPU from latency-sensitive services.

- Benchmark with realistic block sizes and queue depths instead of one synthetic profile.

Compression Strategy Comparison Matrix

Use this table to compare common compression placements and their impact on cost, CPU, and predictability across Kubernetes Storage and NVMe/TCP deployments.

| Approach | Where it runs | Typical upside | Typical downside | Best fit |

|---|---|---|---|---|

| Inline compression at the storage layer | Storage data path | Central policy control and immediate savings | Can raise tail latency if CPU runs hot | Multi-tenant Software-defined Block Storage |

| Host-level compression (filesystem/LVM) | Compute nodes | Simple per-host enablement | Shifts CPU cost to app nodes and drifts across fleets | Small clusters, single-tenant stacks |

| App-level compression | Inside the app | Workload-aware choices | Adds app complexity and inconsistent ops | A few owned services with strong SRE support |

| No compression | N/A | Lowest CPU overhead | Higher capacity and replication costs | Latency-first tiers with ample capacity |

Predictable Compression Economics with Simplyblock™

Simplyblock™ focuses on predictable performance while reducing storage overhead, which is ideal for compression-heavy environments that require stable p99 latency. Simplyblock utilizes a high-performance, user-space architecture that aligns with SPDK concepts, including efficient buffer handling and low-overhead I/O paths, ensuring that compression does not become a CPU tax that you cannot control.

In Kubernetes Storage, simplyblock supports hyper-converged and disaggregated layouts, so you can place compression work where your cluster has headroom. That flexibility also helps NVMe/TCP deployments because you can separate compute saturation from storage saturation while still serving fast block I/O.

If you want stable outcomes across tenants, pair compression policy with multi-tenancy controls and QoS so each workload class stays inside its intended performance envelope.

Where Compression in Block Storage Is Heading Next

Platform teams want compression that tunes itself based on real metrics, not guesswork. Expect broader use of per-volume adaptivity that reacts to compressibility changes and backs off automatically when CPU pressure rises.

Offload will matter more as well. SmartNICs, DPUs, and IPUs can take on parts of the data path, which can reduce host CPU load during heavy NVMe/TCP traffic. As more enterprises standardize on Kubernetes Storage for stateful services, compression will increasingly ship as a default platform policy instead of a manual tuning exercise.

Related Terms

Teams often review these glossary pages alongside Compression in Block Storage when they set capacity-efficiency targets and latency SLOs for Kubernetes Storage and Software-defined Block Storage, especially on NVMe/TCP backends.

Zero-Copy I/O

SmartNIC

Storage High Availability

Asynchronous Storage Replication

Questions and Answers

Compression reduces the physical space required to store data by encoding it more compactly at the block level. This leads to better storage utilization, reduced IO operations, and lower costs—especially in cloud environments where capacity impacts pricing directly.

Yes, but selectively. Compression is ideal for workloads with redundant or text-heavy data. However, for IO-intensive databases, it must be implemented with minimal overhead to avoid adding latency or compute bottlenecks during decompression.

Block storage compression happens at the volume level and is transparent to the application, while file storage compression occurs within the file system itself. For Kubernetes block volumes, block-level compression ensures broader compatibility and infrastructure-wide efficiency.

If not implemented efficiently, compression can introduce CPU overhead or latency during reads and writes. However, modern storage platforms use hardware-accelerated or inline compression methods that balance performance and savings without compromising workload requirements.

Simplyblock integrates compression into its logical volume architecture, allowing users to gain space efficiency without manual configuration. It works seamlessly with snapshotting, replication, and NVMe-over-TCP protocols to maintain speed and data durability.