CSI Controller Plugin

Terms related to simplyblock

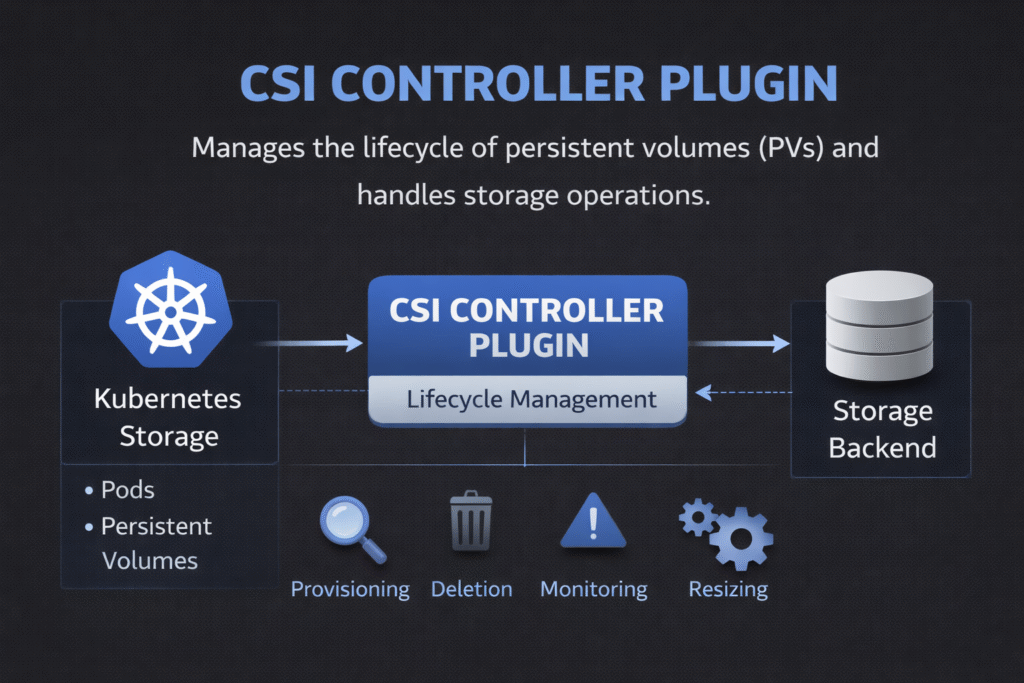

A CSI Controller Plugin runs in the Kubernetes control plane as a Deployment and handles cluster-wide volume actions. It creates and deletes volumes, manages attach and detach flows (when the driver supports them), and coordinates lifecycle tasks through the CSI API. The Node Plugin handles node-local work like mount, unmount, and device setup, so the controller stays focused on decisions that apply across the cluster.

This split matters for execs and operators because it defines where risk concentrates. When the CSI Controller Plugin slows down or fails, new PersistentVolumeClaims can stall, scale-outs can lag, and recovery workflows can queue behind retries. In Kubernetes Storage, the controller often becomes the “pace car” for stateful automation, even when the backend storage runs fast.

A well-designed controller also supports Software-defined Block Storage goals. It turns storage intent into repeatable automation and reduces ticket-driven operations that slow delivery.

CSI Controller Plugin Responsibilities and Boundaries

The CSI Controller Plugin owns actions that need a global view of the cluster. It typically provisions new volumes, removes old ones, and brokers control-plane calls for publish and unpublish workflows. It also interacts with CSI sidecars, such as external-provisioner, external-attacher, external-resizer, and snapshot controllers, depending on what features you enable.

The controller does not mount filesystems, and it does not manage per-node device mapping. Those jobs sit with the Node Plugin and kubelet. This boundary helps you isolate failures. A node-local mount issue impacts one workload, while a controller issue can block many workloads at once.

Teams should treat the controller as a production service with SLOs. Define acceptable time-to-provision, attach latency, and retry behavior. Align those goals with the business impact of delayed deploys and slower failover.

🚀 Harden Your CSI Controller Plugin for Faster Provisioning and Fewer Stuck PVCs

Use simplyblock CSI guidance to tune controller-side behavior and keep Kubernetes Storage predictable on NVMe/TCP.

👉 Review simplyblock CSI Controller Plugin Architecture →

CSI Controller Plugin Fit in Kubernetes Storage Operations

Kubernetes Storage relies on declarative intent. A developer submits a claim, Kubernetes selects a StorageClass, and the platform provisions storage through CSI. The CSI Controller Plugin sits at the center of that automation loop, so it directly affects “time to ready” for stateful Pods.

Topology features raise the stakes. In multi-zone clusters, the controller must honor placement hints and coordinate with scheduling rules, or it can create volumes in the wrong failure domain. That mistake shows up as cross-zone traffic, higher latency, and slower recovery during reschedules.

Multi-tenancy adds another layer. The controller can become a shared choke point if noisy namespaces generate large waves of claims, resizes, and snapshot requests. Strong designs pair rate controls, backoff rules, and clear quotas with QoS policies in the storage layer.

CSI Controller Plugin Behavior on NVMe/TCP Backends

NVMe/TCP delivers fast block I/O over standard Ethernet, but the control plane still shapes user experience during day-2 events. Provisioning, attaching orchestration, and recovery sequences depend on the controller’s health and throughput.

CPU efficiency matters here. NVMe/TCP environments often run high IOPS workloads, which leads teams to scale clusters aggressively. That growth increases control-plane churn: more claims, more reschedules, more snapshot actions, and more resizes. If the controller burns cycles on retries and slow API calls, it can lag behind demand and create a backlog.

Software-defined Block Storage platforms that use efficient user-space I/O paths can reduce CPU pressure on storage nodes. That efficiency does not remove controller work, but it helps keep the overall system stable when the cluster runs hot.

Operational Signals and Failure Modes to Watch

Operators keep controller behavior predictable by watching a small set of signals and acting early:

- Track provisioning time from claim creation to bound volume, and alert on drift.

- Monitor controller CPU, memory, and restart counts, and correlate spikes with deploy waves.

- Watch sidecar logs for repeated retries, permission errors, and API throttling events.

- Measure attach and detach duration during drains, then compare results across node pools.

- Confirm leader election health when you run multiple replicas for high availability.

These signals help teams catch issues before they turn into stuck claims, slow rollouts, or delayed recovery.

Benchmarking Controller-Side Latency and Throughput

Benchmarking the CSI Controller Plugin means measuring time-based outcomes, not just IOPS. Start with the claim-to-ready time under light load, then repeat under stress. Add tests that match your reality: burst provisioning during deploys, rolling node drains, and snapshot-heavy backup windows.

Capture p95 and p99 for provisioning time, attach time, and resize time. Include Kubernetes API rate limits in your review because throttling can mimic storage slowdown. Compare results across StorageClasses because the controller often applies different logic per class.

Controller Plugin Deployment Comparison Matrix

Use this table to compare common controller deployment patterns and their trade-offs in Kubernetes Storage environments.

| Pattern | What teams gain | What teams risk | Best fit |

|---|---|---|---|

| Single replica controller | Simple ops and easier debugging | One pod can block provisioning during failure | Small clusters, dev/test |

| Multi-replica with leader election | Better availability and faster recovery | Feature coverage for attach, resize, and snapshots | Production clusters |

| Split controllers by workload tier | Isolation for critical namespaces | More moving parts and policy drift | Large multi-tenant platforms |

| Controller plus full sidecar stack | Feature coverage for attach, resize, snapshots | More upgrades and version pinning | Enterprises using full CSI feature set |

Predictable Kubernetes Storage Control with Simplyblock™

Simplyblock™ supports Kubernetes Storage through CSI and focuses on predictable operations under load. The platform targets NVMe/TCP deployments and emphasizes CPU-efficient data-path design, which helps storage nodes keep headroom for background work during spikes. That headroom supports stable day-2 behavior when the cluster triggers reschedules, rebuilds, snapshot tasks, and provisioning bursts.

Simplyblock also supports multi-tenancy and QoS controls in the storage layer. Those controls help platform teams protect p99 latency for critical services while other teams run noisy workflows. In Software-defined Block Storage environments, this combination reduces operational friction and improves service consistency across clusters.

Where CSI Controller Plugins Are Headed Next

Teams want fewer manual guardrails and more automated safety. Expect stronger default rate limits, better backoff behavior, and more direct signals that tie claim delays to root causes. Controller architectures will also keep improving around scale-out, especially for clusters that run heavy stateful services.

As NVMe/TCP grows in production, platform teams will push for tighter control over lifecycle bursts. They will also demand clearer separation between critical and non-critical workflows, so backup storms do not slow customer-facing deploys.

Related Terms

Teams often review these glossary pages alongside the CSI Controller Plugin when they tune provisioning speed, attach flows, and reliability for Kubernetes Storage.

CSI Driver vs Sidecar

CSI Topology Awareness

Block Storage CSI

QoS Policy in CSI

Questions and Answers

The CSI Controller Plugin is a key component of the CSI architecture responsible for handling non-node-specific volume operations like provisioning, attaching, and snapshotting. It runs as part of the storage controller and enables advanced features in platforms like Simplyblock’s block storage.

While the Controller Plugin handles global operations like volume creation and deletion, the Node Plugin performs node-level tasks such as mounting or unmounting. This separation of concerns improves scalability and supports features like cross-node volume migration.

Yes. Dynamic provisioning depends on the Controller Plugin to create volumes in response to PersistentVolumeClaim (PVC) requests. Without it, only static volumes can be used. Simplyblock’s architecture supports dynamic provisioning via a Kubernetes-native CSI stack.

Absolutely. Most advanced CSI drivers implement snapshot and clone functionality through the Controller Plugin. These capabilities are essential for database protection, CI/CD workflows, and rapid test environment creation.

Key metrics include provisioning latency, attach/detach errors, and API communication with the control plane. Logs from the Controller Plugin often reveal issues with volume provisioning or access control. For resilient setups, Simplyblock provides observability integrations for CSI components.