Kubernetes Topology Constraints

Terms related to simplyblock

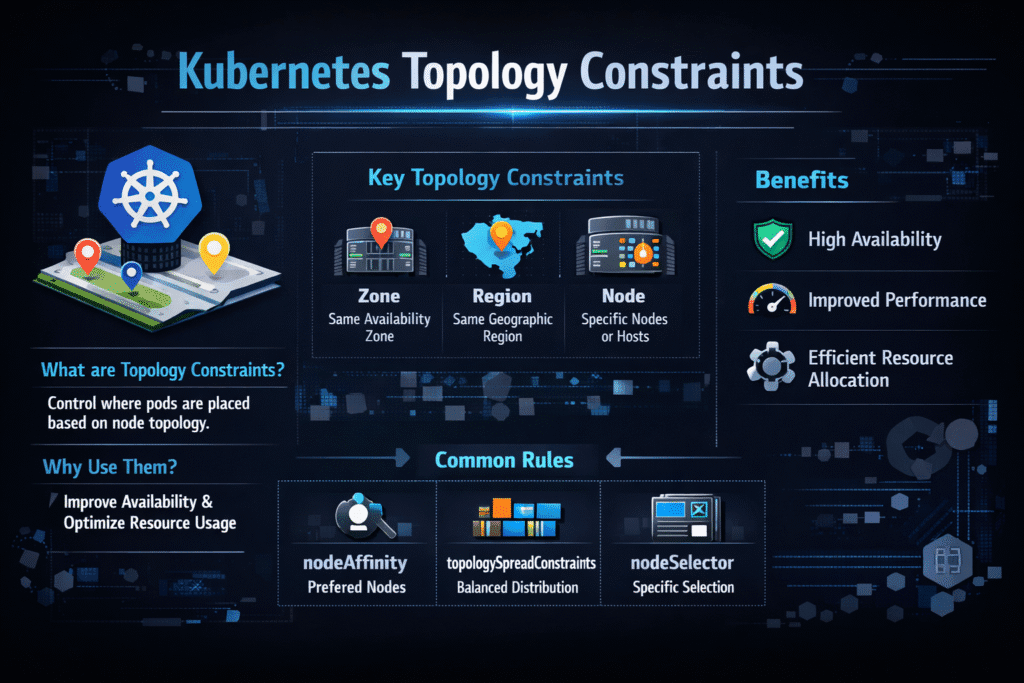

Kubernetes Topology Constraints tell the scheduler how to place Pods across failure domains such as zones, nodes, and racks. Teams use them to keep replicas apart, reduce the blast radius of a failure, and avoid uneven placement that can turn a small outage into a platform incident.

Topology controls sit next to other scheduling levers like node selectors, affinity and anti-affinity, taints, and tolerations. The difference is intent: topology constraints focus on distribution across domains, not just “run here” or “do not run there.” For stateful services, topology also shapes data risk, because volume location and attach rules can limit where a Pod can run.

Kubernetes Topology Constraints – What the Scheduler Actually Enforces

Topology spread constraints work through labels and skew. You define a topology key (for example, a zone label), a max skew, and a selector that matches the Pods you want to balance. The scheduler then tries to keep the counts even across each domain value.

Two details drive most outcomes in production.

First, your labels must stay clean. If teams add custom topology keys and forget to apply them everywhere, the scheduler cannot spread evenly, and Pods may pile up in a single domain.

Second, constraints can block scheduling. A strict rule can keep a rollout from finishing when one zone runs low on capacity. That is not a bug. The rule does its job, and your platform needs a plan for that trade-off.

🚀 Make Kubernetes Topology Constraints Work with Stateful Storage

Use simplyblock guidance on CSI Topology Awareness to keep Kubernetes Storage schedulable over NVMe/TCP with Software-defined Block Storage.

👉 Read CSI Topology Awareness →

Kubernetes Topology Constraints in Kubernetes Storage Decisions

Kubernetes Storage adds a hard reality: a Pod must run where it can mount its volume. That creates a two-sided placement problem. The scheduler picks a node, and the storage layer must provide a compatible volume in the same failure domain, or the Pod can sit pending.

Topology-aware provisioning helps reduce that mismatch. With the right binding behavior, Kubernetes can wait until it knows where the Pod will run, then provision the volume in a matching domain. This keeps stateful rollouts smoother in multi-zone clusters, and it cuts “wrong zone” volume mistakes.

Software-defined Block Storage can also reduce tight pins. When the storage layer supports flexible placement policies and strong isolation, platform teams can meet availability goals without forcing every application team to manage complex node rules.

Kubernetes Topology Constraints with NVMe/TCP Storage Networks

Transport choice affects how painful “not local” becomes. NVMe/TCP runs over standard Ethernet, so teams can scale storage networks without specialized fabrics. That matters when topology constraints force a reschedule and the storage path changes.

Efficient I/O paths also help during disruption. If a drain or rollout shifts replicas across domains, the platform benefits from lower CPU overhead in the data path. SPDK-based user-space designs can reduce jitter and keep tail latency steadier while the scheduler makes placement choices under pressure.

This is where Kubernetes Storage, NVMe/TCP, and topology intent meet: the scheduler places Pods, the storage layer attaches volumes, and the network path must stay predictable.

Signals That Show Whether Topology Rules Help or Hurt

Look past “Pod scheduled” events and measure user impact.

Track application p95 and p99 latency, cross-zone traffic, and retry rates during normal load. Add rollout metrics such as time-to-ready, reschedule counts, and attach or mount time for stateful Pods. When topology rules work, latency stays stable, and rollouts finish without long pending windows.

Run a controlled test before you tighten constraints. Keep a steady I/O profile, apply a new spread rule, and watch for longer scheduling time or tail-latency jumps. If performance moves, you likely introduced remote I/O, contention, or both.

Moves That Improve Topology Constraint Results

- Start with zone spreading for replica sets and stateful sets, then tighten rules only for tier-1 services.

- Keep topology labels consistent, audited, and owned by the platform team.

- Use node affinity for true hardware needs, and use spread constraints for availability goals, so you avoid over-pinning by default.

- Align storage provisioning with scheduling intent, so volumes land where Pods can run.

- Add multi-tenant QoS in the storage layer, so one workload cannot erase the value of careful placement.

Comparing Topology Tools for Availability and Data Locality

The table below compares common ways teams enforce topology intent, including how each option behaves for stateful workloads.

| Mechanism | Primary goal | What it controls | Common downside | Best fit |

|---|---|---|---|---|

| Topology spread constraints | Even distribution across domains | Replica placement | Can block scheduling when capacity skews | High availability across zones and nodes |

| Pod anti-affinity | Prevent co-location | “Do not share” rules | Rules become too strict over time | Critical replicas that must stay apart |

| Node affinity | Target specific nodes | Hardware or pool selection | Sticky placement complicates drains | GPUs, local NVMe, special NICs |

| Topology-aware volume provisioning | Match the volume domain to the Pod domain | Volume placement | Wrong settings cause pending Pods | Multi-zone Kubernetes Storage |

| Software-defined Block Storage policies | Placement, isolation, and QoS | Data and tenancy controls | Bad defaults can hide contention | Shared clusters with mixed workloads |

Topology-Aware Kubernetes Storage with Simplyblock™

Simplyblock™ supports Kubernetes Storage with Software-defined Block Storage that fits both hyper-converged and disaggregated designs. That flexibility helps platform teams apply topology intent without building a separate storage island per workload class.

For performance-sensitive services, simplyblock supports NVMe/TCP and an SPDK-based data path to reduce overhead and keep latency steadier during rollouts, drains, and reschedules. Multi-tenancy and QoS controls help preserve intent when several teams share the same cluster.

Next Steps for Kubernetes Topology Constraints in Large Clusters

Teams want tighter alignment between scheduling intent and real cluster state. Expect more automated correction when scaling changes domain balance, better visibility into “why this node,” and stronger ecosystem patterns for topology-aware storage workflows.

As stateful workloads expand in Kubernetes, topology rules will matter more, not less. The winners will treat topology as a platform contract, and they will pair it with storage that can keep up under change.

Related Terms

Teams review these pages with Kubernetes Topology Constraints to keep scheduling and storage aligned.

- What is Kubernetes Node Affinity

- What is Local Node Affinity

- What is CSI Driver

- What is Dynamic Provisioning in Kubernetes

Questions and Answers

Topology constraints define rules for scheduling pods and provisioning volumes based on node, zone, or region. These constraints optimize data locality and prevent cross-zone latency—crucial for applications that require fast, predictable access to persistent storage.

CSI drivers use topology keys to provision volumes in specific zones or nodes based on pod placement. This ensures storage is co-located with compute, enabling high performance and resilience. Simplyblock supports topology-aware CSI provisioning out of the box.

Hard constraints (like requiredDuringSchedulingIgnoredDuringExecution) enforce strict placement rules, while soft ones (preferredDuringScheduling...) suggest best-effort affinity. Using a mix enables a balance between performance and availability, especially in multi-zone deployments.

Yes. StorageClasses can include allowedTopologies to restrict where volumes can be provisioned. This ensures persistent volumes are created in the same zone as the pod, reducing egress costs and latency. It’s key to Kubernetes cost optimization.

Simplyblock’s CSI integration honors Kubernetes topology keys for both provisioning and attachment. This allows high-performance block volumes to be scheduled close to workloads, improving throughput and availability across zones or failure domains.