Kubernetes CSI Inline Volumes

Terms related to simplyblock

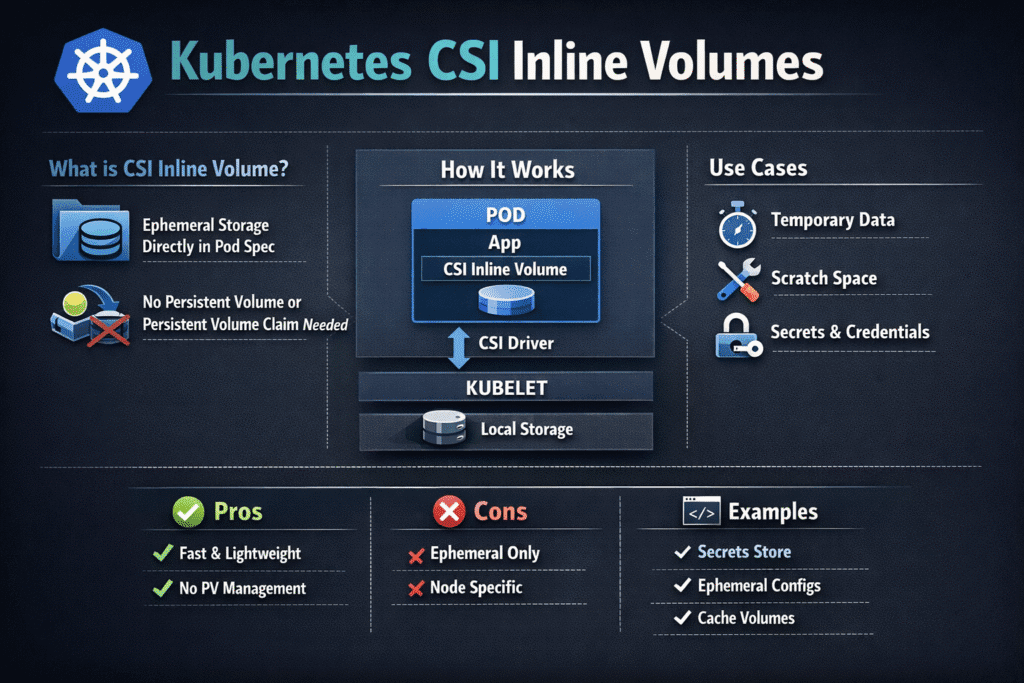

Kubernetes CSI Inline Volumes let a Pod request CSI-backed storage directly in the Pod spec, and Kubernetes ties that volume’s lifecycle to the Pod. Teams use this pattern when they need fast, short-lived storage for caches, scratch space, staging, or pipeline spillover, and they do not want a full PersistentVolumeClaim workflow.

Inline volumes work best when the data has a tight lifecycle and a simple recovery story. If a workload can rebuild the data, inline volumes can reduce overhead. If the workload needs a durable state, PVCs still fit better.

From a platform view, inline volumes change who “owns” storage choices. App teams can encode driver parameters in Pod templates. Platform teams usually respond with guardrails, validated templates, and policy checks to keep Kubernetes Storage consistent.

Standardizing Inline Volume Requests Without Creating YAML Sprawl

Inline volumes can spread storage settings across many manifests, so the key optimization goal is repeatability. Put the “how” into a small set of approved templates, then keep the “what” inside application deployment logic.

In practice, most teams win by limiting the number of allowed inline configurations. They define a small menu of scratch tiers, such as “default scratch” and “high-IO scratch.” They also align each tier to a known storage pool, network path, and QoS policy on the backend.

This approach supports executive goals as well. It lowers operational risk, and it makes spending and performance easier to predict across clusters, environments, and teams.

🚀 Deploy the Simplyblock CSI Driver for Kubernetes CSI Inline Volumes

Use Simplyblock to run pod-scoped, CSI-driven volumes over NVMe/TCP, with QoS controls for steady latency.

👉 Install Simplyblock CSI on Kubernetes →

Where Inline CSI Volumes Fit Inside Kubernetes Storage Choices

Inline volumes sit next to PVC-backed volumes, host-local paths, and ephemeral volume types. They do not replace PVCs. Instead, they fill the gap for Pod-scoped storage that should come and go with the workload.

If you run mixed workloads, you typically want one storage platform that supports both “scratch” and “state.” That is where the Kubernetes Storage strategy matters. When the same backend provides ephemeral and persistent paths, you reduce tool sprawl, and you keep one set of metrics, alerts, and runbooks.

For storage architects, the key is to align the data lifecycle with the storage lifecycle. Scratch data should not drive long-term capacity planning. Durable data should not depend on Pod lifetime.

Kubernetes CSI Inline Volumes and NVMe/TCP for Low-Latency Scratch I/O

Many inline volume use cases stress small-block random I/O and high concurrency. NVMe/TCP can support those workloads over standard Ethernet, which helps teams scale without specialized fabrics.

NVMe/TCP also fits well when you run Software-defined Block Storage across racks or zones. You keep a consistent network model, and you avoid protocol fragmentation. When you pair NVMe/TCP with a user-space dataplane like SPDK, you often improve CPU efficiency for the storage path, which matters in dense Kubernetes clusters.

This matters for cost. The CPU that handles storage overhead does not run your apps. A more efficient I/O stack can raise pod density and reduce the need for extra nodes.

Measuring and Benchmarking Kubernetes CSI Inline Volumes Under Real Pod Churn

Benchmarking inline volumes requires both storage metrics and orchestration metrics. Storage throughput and IOPS only tell part of the story. Mount and teardown timing can limit scale-out jobs, CI workloads, and batch pipelines.

Measure these signals:

Latency distribution, including p50, p95, and p99, because tail latency drives queueing and backpressure.

IOPS and throughput at realistic concurrency, because single-pod tests often mislead.

Pod startup impact, because inline volumes add CSI operations during scheduling and kubelet workflows.

Failure behavior during drains and reschedules, because inline volumes depend on clean attach, mount, unmount, and cleanup.

Run tests that look like production. Use the same node pools, the same network policies, and the same noisy-neighbor conditions you expect at peak hours.

Approaches That Improve Inline Volume Performance in Production

The best gains come from removing bottlenecks that sit outside the raw storage device. Apply these moves in a consistent order:

- Keep inline volumes for scratch data, and move durable state to PVCs with clear StorageClass policies.

- Place I/O-heavy Pods on node pools with predictable network paths to the storage layer, especially when you run NVMe/TCP.

- Cap bursty jobs with QoS so one build farm does not starve a customer-facing service.

- Track p99 latency and CSI operation time in the same dashboard, then tune based on both signals.

- Reuse validated templates so teams do not invent new parameters per service.

Side-by-Side Options for Inline and Persistent Volume Models

Most teams choose between inline volumes and PVCs based on lifecycle and governance. The table below frames the trade-offs in a way that maps to day-2 operations.

| Option | Lifecycle | Best fit | Governance model | Main drawback |

|---|---|---|---|---|

| CSI inline volume | Pod-scoped | Cache, scratch, staging | App templates plus policy guardrails | Parameter sprawl if teams freestyle |

| PVC with StorageClass | Independent of Pod | Databases, queues, durable state | Central policy with claims | More objects, but better control |

| Node-local path | Node-scoped | Tight node affinity, niche cases | Per-node rules | Low portability, higher risk |

Kubernetes CSI Inline Volumes with Simplyblock™ for Predictable Scratch Performance

Simplyblock™ targets predictable performance for Kubernetes Storage by delivering Software-defined Block Storage over NVMe/TCP, with a user-space dataplane based on SPDK principles. That combination matters for inline volumes because scratch workloads often burst and collide.

Simplyblock supports multi-tenancy and QoS, so platform teams can protect tier-1 services while still letting ephemeral jobs move fast. It also supports flexible deployment models, including hyper-converged, disaggregated, and mixed designs. That flexibility helps when different clusters demand different shapes, but leadership wants one operating model.

The practical outcome is simple: teams keep one storage platform, run both ephemeral and persistent paths, and enforce predictable behavior through policy.

What to Expect Next for Pod-Scoped CSI Storage

Inline volume adoption will keep rising in pipelines and batch-heavy platforms, especially where teams want fast scratch space without extra objects. At the same time, platform teams will push harder on policy-driven templates, safer defaults, and better observability for CSI operation timing.

Hardware trends also matter. DPUs and IPUs can offload parts of the storage and network path, which can reduce CPU pressure in large clusters. As these designs mature, Kubernetes Storage platforms that already optimize the I/O path will have an easier path to adopt offload options.

Related Terms

Teams often review these glossary pages alongside Kubernetes CSI Inline Volumes when they set standards for Kubernetes Storage, NVMe/TCP, and Software-defined Block Storage.

CSI (Container Storage Interface)

HostPath

Tail Latency

Zero Copy I/O

Questions and Answers

Kubernetes CSI Inline Volumes allow volumes to be defined directly within pod specifications instead of using PersistentVolumeClaims. These are ideal for short-lived, ephemeral workloads. However, they must be supported by the installed Container Storage Interface (CSI) driver.

Use CSI Inline Volumes for ephemeral use cases such as init containers, short-lived pods, or tightly scoped test environments. For production-grade stateful workloads, it’s better to use persistent storage for Kubernetes to ensure durability and recoverability.

No. Inline volumes are limited in functionality — they typically don’t support expansion, snapshots, or reuse across pods. If you need those features, use block storage provisioned via dynamic PVCs with a CSI-compliant backend.

Not all CSI drivers support inline volumes. They require a VolumeLifecycleMode set to Ephemeral. Simplyblock’s supported technologies focus on persistent, high-performance workloads, where PersistentVolumeClaims are generally preferred over inline volumes.

Inline volumes lack the lifecycle separation that PVCs provide, making them harder to manage, secure, and audit. In multi-tenant Kubernetes environments, using PVCs with RBAC and storage classes is a safer and more scalable choice.