NetApp Trident

Terms related to simplyblock

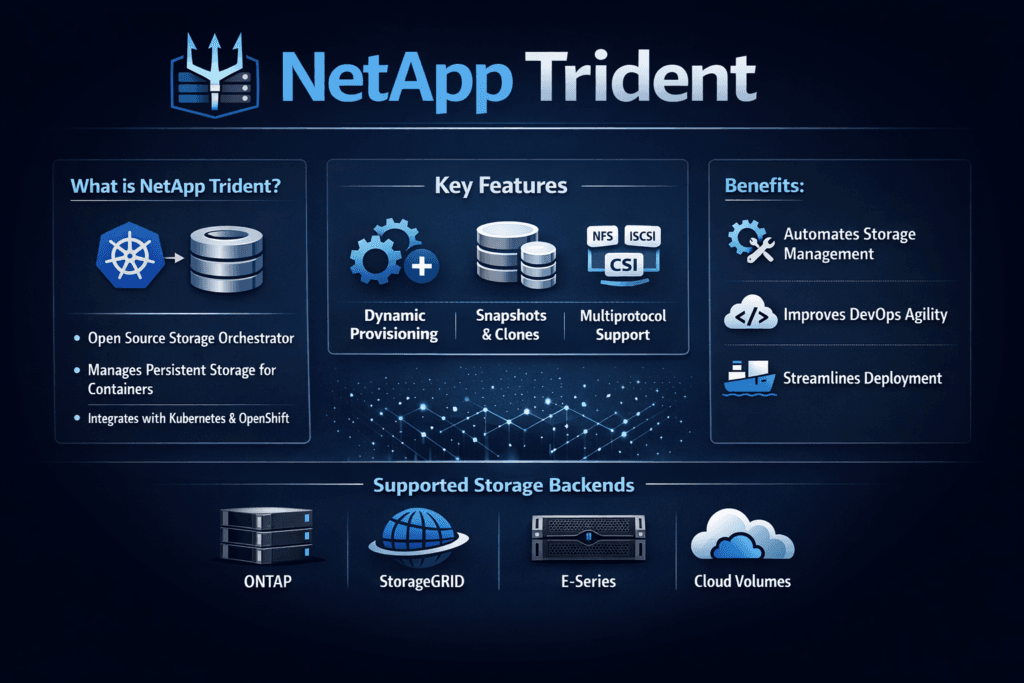

NetApp Trident is NetApp’s open-source storage orchestrator for containers. It connects Kubernetes and OpenShift to NetApp storage backends through the Container Storage Interface (CSI), so teams can provision persistent volumes with StorageClasses and PersistentVolumeClaims.

Trident handles the storage control plane tasks that platform teams care about: dynamic provisioning, resize workflows, and clean lifecycle behavior during node changes. Your storage system still delivers the data services, while Trident translates Kubernetes intent into backend actions.

This matters most for stateful apps. When storage provisioning stays consistent, teams ship faster, reduce ticket volume, and avoid “hand-built” volumes that break during upgrades. Kubernetes Storage also benefits from clear policy tiers, because developers stop guessing which storage option fits each workload.

How NetApp Trident Works with CSI and OpenShift

Trident plugs into the cluster as a CSI-based component and responds to volume requests that come from Kubernetes objects. A StorageClass expresses policy, and the PVC triggers Trident to create a matching volume on a supported backend.

Operators typically pair Trident with clear platform rules. They define a small set of StorageClasses, map each class to a service tier, and standardize naming across clusters. This approach improves auditability and reduces drift.

Trident also introduces an extra layer to debug when latency spikes show up. Teams often inspect the pod, the node, the CSI logs, Trident settings, and the backend array before they find the real cause.

🚀 Standardize CSI Provisioning Beyond NetApp Trident

Use simplyblock to run Software-defined Block Storage for Kubernetes Storage, with cleaner CSI workflows and fewer edge-case mounts.

👉 Use simplyblock CSI guidance →

NetApp Trident vs Software-defined Block Storage on Bare Metal

Trident orchestrates external storage systems. Many enterprises like this model because it fits established storage teams and centralized operations. It also means performance depends on the backend system and the network path between compute and storage.

Software-defined Block Storage shifts the storage data plane onto commodity servers, often close to compute. It exposes volumes through CSI as well, but it scales out by adding nodes instead of scaling up a single array. This design can lower latency, increase throughput per rack, and reduce dependence on one platform.

For Kubernetes Storage at scale, the choice often comes down to operating model. If you want array-backed governance, Trident fits. If you want a SAN alternative with elastic scale on bare metal, software-defined designs often fit better.

NVMe/TCP in modern container storage designs

NVMe/TCP delivers NVMe-oF performance over standard Ethernet and TCP/IP. It helps teams avoid specialized fabrics in the early stages, while still improving the storage data path compared with older network storage stacks.

Trident does not “enable” NVMe/TCP by itself. It provisions what the backend supports. If the backend exposes NFS or iSCSI, Trident follows that path. If you want NVMe/TCP end-to-end, you typically choose a backend platform that offers NVMe/TCP volumes through CSI.

How to test provisioning speed and I/O behavior

A clean test plan separates control-plane time from data-plane performance. First, measure time-to-volume for a new PVC, time-to-attach after a pod moves, and behavior during node drains. Next, measure average latency plus p95 and p99 latency under read-heavy, write-heavy, and mixed loads.

Collect node and network signals at the same time. CPU pressure, retransmits, and noisy neighbors often mimic “storage driver problems.” A joint view shortens the path to the real bottleneck.

Tuning moves that reduce storage tickets

Teams usually get the biggest wins by tightening policy and placement before they tweak backend details.

- Keep StorageClasses limited, and map each class to a clear tier, so teams stop guessing.

- Use topology-aware placement so pods and volumes stay aligned with zones or node pools when needed.

- Apply QoS limits to protect tail latency and stop one workload from dominating shared pools.

- Standardize on NVMe/TCP where you want scale on Ethernet, and reserve RDMA fabrics for the strictest tiers.

Storage architecture comparison for platform teams

This table frames the decision in terms that map to cost, risk, and operational load.

| Approach | What you run in the cluster | What drives performance | Where ops work lands |

|---|---|---|---|

| Trident + NetApp backend | CSI orchestration plus external storage | Backend system limits and network path | Backend tuning, CSI logs, and lifecycle checks |

| Cloud block CSI driver | CSI driver and cloud volumes | Cloud tier, quota, and zone rules | Cloud constraints and multi-zone planning |

| Software-defined Block Storage | Distributed data plane plus CSI | NVMe/TCP data path and node resources | Capacity planning, policy, and QoS guardrails |

NetApp Trident Migration Paths with Simplyblock™

Many teams keep Trident where it fits their existing arrays, then add simplyblock for new Kubernetes Storage tiers that need elastic scale, higher throughput per node, or simpler operations on bare metal. Others migrate service by service by moving one StorageClass at a time, which keeps rollback simple.

Simplyblock focuses on Software-defined Block Storage with NVMe/TCP support and an SPDK-based, user-space data path. This design reduces CPU overhead and helps keep latency steady under mixed load.

What changes next in CSI-driven storage operations

Kubernetes platforms will keep pushing toward stricter policy and clearer day-2 automation. Teams will also compare NVMe/TCP designs more often as they modernize networks and chase stable p99 latency.

Vendors and platform teams will invest more in CSI health signals, so they can catch attach, mount, and resize issues before apps feel them. Expect more storage standards in cluster templates, too, because shared “golden” StorageClasses reduce drift across fleets.

Related Terms

Teams often review these glossary pages alongside NetApp Trident when they standardize Kubernetes Storage and Software-defined Block Storage.

CSI Driver vs Sidecar

CSIDriver Object

IO Path Optimization

NVMe-oF Discovery Controller

Questions and Answers

NetApp Trident is a dynamic storage provisioner (CSI driver) for Kubernetes that integrates NetApp storage systems. It automates persistent volume creation, but unlike cloud-native storage solutions, it depends on NetApp backends and lacks full flexibility in multi-cloud or hybrid deployments.

While Trident works only with NetApp storage, Simplyblock’s CSI integration supports any environment using NVMe over TCP, offering faster provisioning, better performance, and no vendor lock-in—ideal for modern Kubernetes workloads.

No, NetApp Trident does not natively support NVMe over TCP. This limits its ability to take advantage of newer high-performance networking protocols. For NVMe/TCP-native CSI drivers, Simplyblock is a better alternative.

Trident can handle persistent volumes but may introduce latency due to its reliance on traditional storage protocols. For AI pipelines or data-heavy tasks, NVMe-native solutions with lower p99 latency are more efficient.

NetApp Trident is tightly coupled with NetApp’s proprietary storage platforms, limiting flexibility in open environments. It also lacks native support for features like multi-tenant encryption and software-defined storage provisioning, which are key in scalable, modern data infrastructure.