AI Storage Companies

Terms related to simplyblock

AI Storage Companies build storage systems that keep AI pipelines moving without long stalls. They focus on fast reads, steady writes, and low p99 latency, because a single delay can idle costly GPUs. Training, feature engineering, and inference also stress storage in different ways, so the platform must handle mixed I/O without sharp drops.

Many teams first notice the issue as “slow training.” The root cause often sits in the storage path: small random reads for shards, large sequential pulls for checkpoints, and bursts of metadata work from many workers at once. The right design keeps bandwidth high, keeps latency steady, and keeps operations simple across clusters.

Software-defined Block Storage helps by turning raw media into a shared service with a clear policy. Kubernetes Storage adds another demand: it expects volumes to follow workloads during reschedules, upgrades, and node churn.

Choosing AI Storage Companies for GPU Data Pipelines

Comparison gets easier when you separate marketing claims from platform behaviors. Strong options show stable performance during routine events, not only in a clean lab. Look for multi-tenant controls, clear QoS, and predictable rebuild behavior. Also, verify how the vendor handles data placement, network paths, and noisy neighbors when many jobs run at once.

Cost also matters, but cost follows architecture. A platform that protects p99 latency can reduce GPU idle time, which often saves more than any storage discount.

🚀 Set QoS for AI Pipelines Across Kubernetes Storage

Use simplyblock to run Software-defined Block Storage with per-tenant limits, so GPU jobs avoid I/O spikes.

👉 Use simplyblock for Multi-Tenancy and QoS →

AI Storage Companies in Kubernetes Storage

Kubernetes Storage works best when storage behaves like an API-driven service. Pods move, nodes drain, and clusters roll through updates. Storage must keep up without forcing manual work.

AI stacks often mix workloads in one cluster. One team may run a vector service with tight latency targets while another team runs training jobs that push bandwidth hard. A Kubernetes-first design needs a policy that isolates those needs at the volume level. StorageClasses, quotas, and per-volume limits help keep service levels intact when demand spikes.

Software-defined Block Storage fits this model because it can apply performance and protection rules per workload, per namespace, and per tenant.

AI Storage Companies and NVMe/TCP

NVMe/TCP extends NVMe semantics over standard Ethernet. That makes it practical for teams that want to scale without specialized fabrics, while still aiming for low overhead. NVMe/TCP also supports disaggregated designs, where compute scales on its own and storage scales as a shared pool.

For AI, NVMe/TCP often helps in two places. It can speed access to shared training data, and it can keep block latency steady for stateful services that sit next to the pipeline. Teams that move off legacy arrays also use NVMe/TCP as a SAN alternative when they want simpler day-2 operations.

Measuring and Benchmarking Platform Fit

A useful benchmark matches real AI traffic. Pure sequential tests can hide the small I/O patterns that stall pipeline stages. Mixed profiles reveal more: random reads for shards, writes for logs and checkpoints, and bursts from many workers.

Metrics should map to business outcomes. Track sustained bandwidth, p95 and p99 latency, and CPU cost per I/O on storage nodes. Then correlate those results with the GPU duty cycle during training. When latency stays flat under churn, training stays smooth.

Also, test behavior during change. Run the same profile while you trigger resync, rebuild, snapshot, and node loss. These events expose weak control planes and weak isolation.

Proven Levers to Improve Results

Teams can raise throughput and reduce stalls without a full rewrite. Start with the data path, then lock the gains in with policy.

- Use NVMe/TCP when you need shared access over Ethernet and predictable scale.

- Apply QoS limits per workload to reduce noisy-neighbor impact.

- Keep hot data close to compute in hyper-converged zones, and split storage out when you need independent scale.

- Test rebuild and resync under load, then tune for stable p99.

- Standardize on Software-defined Block Storage so teams share one policy model across clusters.

- Favor efficient user-space I/O paths where they fit, because CPU waste shows up as latency.

What You Actually Compare

The table below groups AI-focused storage options by how teams deploy them, then maps each to common AI patterns in Kubernetes Storage and NVMe/TCP environments.

| Category | Best fit | Strength | Common trade-off | Notes for Kubernetes Storage |

|---|---|---|---|---|

| NVMe-oF SDS (block) | Needs a good network design | Strong latency control, scale-out pools | Needs good network design | Often pairs well with CSI and per-volume QoS |

| Parallel file systems | Large shared datasets | High read bandwidth | More moving parts | Great for shared files, less ideal for small-block services |

| Managed cloud block | Fast start, managed ops | Simple provisioning | Costs and limits at scale | Watch caps, region spread, and egress |

| Object storage | Data lakes, artifacts | Low cost at scale | Not for tight latency | Use for staging and archive, not always for hot I/O |

| Legacy SAN arrays | Existing estates | Familiar tooling | Rigid scale, more fabric work | Often clashes with cloud-native workflows |

Predictable AI Data Paths with Simplyblock™

Simplyblock™ targets stable performance for AI pipelines by combining NVMe-first design with Software-defined Block Storage controls. Teams can use it to run Kubernetes Storage for mixed AI workloads with multi-tenancy and QoS, so one job cannot starve another.

NVMe/TCP support helps when you want shared pools over standard Ethernet without complex fabric changes. Simplyblock also uses an SPDK-based user-space data path, which can reduce CPU overhead in I/O-heavy setups and help keep tail latency steady when demand climbs.

Where the Market Is Going Next

AI infrastructure keeps pushing toward tighter latency targets, higher parallelism, and more automation. Kubernetes adoption continues to grow for end-to-end pipelines, so CSI behavior and day-2 operations matter more each quarter.

Hardware offload will also play a bigger role. DPUs, IPUs, and SmartNICs can move more work off host CPUs, which helps both throughput and consistency. AI Storage Companies that win long-term will prove stability during churn, not just speed in ideal tests.

Related Terms

Teams often review these glossary pages alongside AI Storage Companies when they set measurable targets for Kubernetes Storage and Software-defined Block Storage.

Storage Metrics in Kubernetes

QoS Policy in CSI

IO Path Optimization

Storage Composability

Questions and Answers

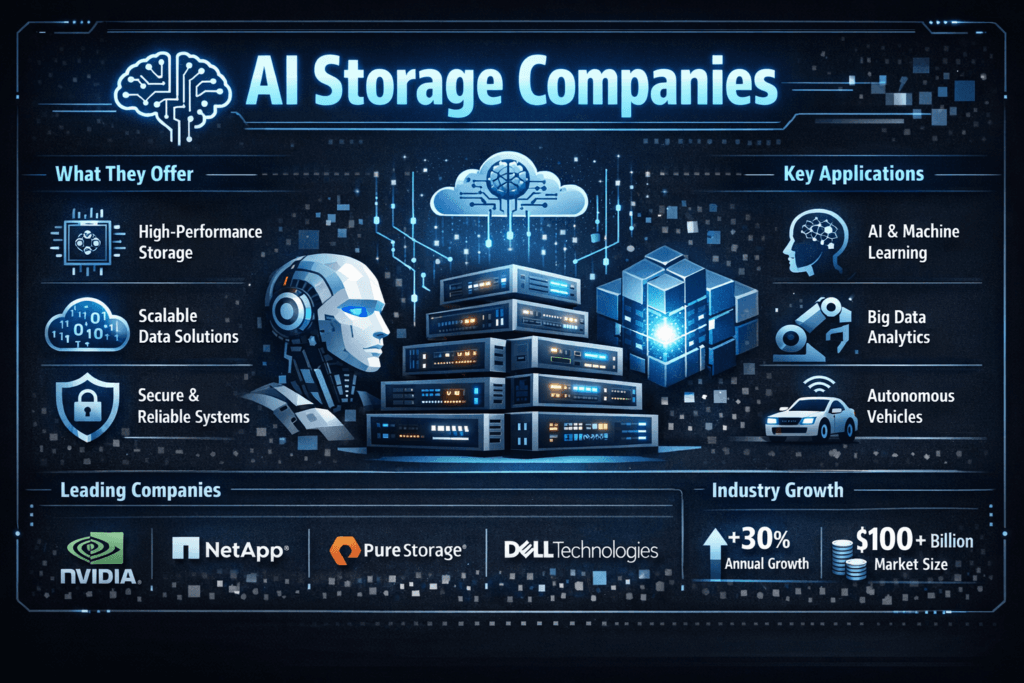

AI storage companies design infrastructure to handle massive data volumes with high IOPS and low latency. They optimize for GPU workloads, model training, and unstructured data, often using NVMe-based storage and distributed file systems for scalability.

Simplyblock offers Kubernetes-native NVMe storage with built-in snapshots, encryption, and NVMe over TCP support. Unlike traditional vendors, Simplyblock focuses on software-defined, cloud-native environments ideal for AI/ML pipelines and elastic infrastructure.

AI workloads require fast data access for training, inference, and feature engineering. Storage with low p99 latency and high throughput ensures GPU utilization stays optimal, reducing model training time and increasing pipeline efficiency.

AI storage should support parallel I/O, high throughput, and fast recovery. Features like incremental backup, NVMe acceleration, and dynamic scaling help meet performance needs across deep learning, analytics, and real-time inference workloads.

Many legacy vendors struggle to support container-native, distributed AI environments. Modern software-defined storage providers like Simplyblock offer faster provisioning, better scaling, and protocol support like NVMe/TCP designed for AI-scale performance.