Multi-Tenant Storage Architecture

Terms related to simplyblock

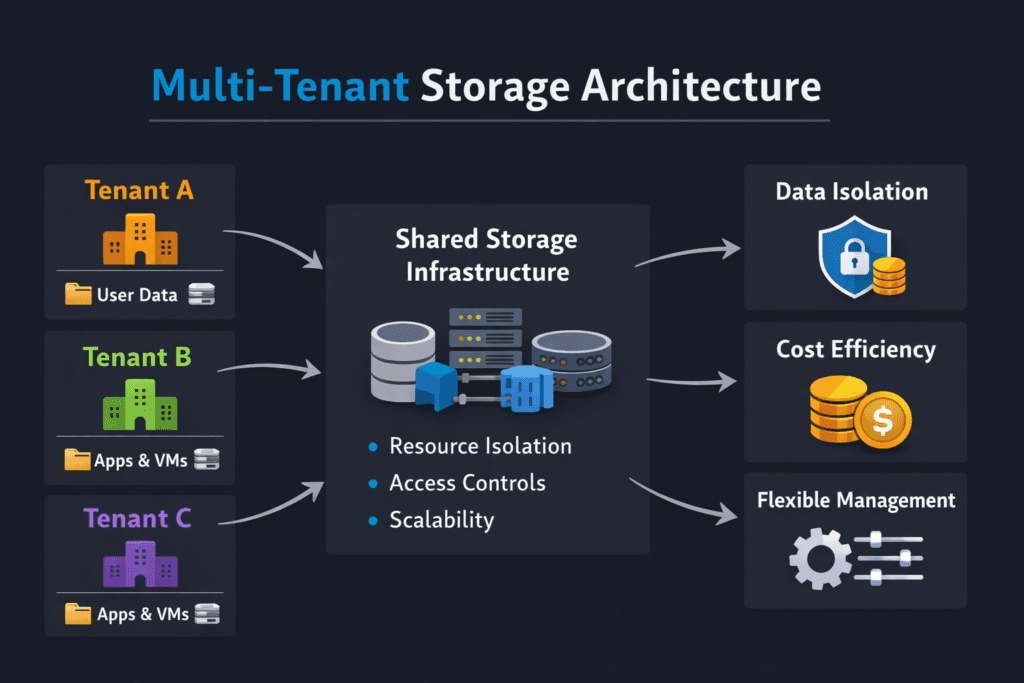

Multi-Tenant Storage Architecture describes how one storage platform serves many teams or customers while keeping data safe, performance steady, and operations manageable. It matters most in shared Kubernetes Storage environments, where one noisy workload can raise tail latency for other teams. A strong design focuses on isolation, predictable performance, clear failure domains, and fast self-service.

Multi-tenancy also changes how you think about risk. You no longer optimize a single workload in a single cluster. You optimize fairness, repeatability, and guardrails across many workloads that scale at different times.

Optimizing Multi-Tenant Storage Architecture with Policy-Driven Isolation

Multi-tenancy works best when policy drives behavior. Quotas, encryption boundaries, and QoS rules turn “shared” into “controlled.” Capacity limits stop runaway provisioning. Performance limits prevent one tenant from consuming queues and bandwidth that other tenants need for stable latency.

Isolation also needs observability. Platform teams should track per-tenant latency percentiles, IOPS, throughput, and error rates. Those signals help teams spot contention early and enforce SLO targets consistently.

🚀 Enforce Multi-Tenant Storage Policies on NVMe/TCP, Natively in Kubernetes

Use simplyblock to apply quotas and QoS per tenant, and keep Kubernetes Storage predictable at scale.

👉 Use simplyblock Multi-Tenancy and QoS →

Multi-Tenant Storage Architecture in Kubernetes Storage

Kubernetes increases pressure on shared storage because it makes provisioning fast and continuous. Namespaces, StorageClasses, and CSI workflows reduce friction, but they also amplify blast radius when guardrails are missing. A single namespace can exhaust capacity. A bursty tenant can create contention that shows up as p99 spikes for other teams.

A practical pattern combines three controls. Namespace-level quotas protect capacity. Per-volume QoS protects latency and throughput targets. Placement rules reduce hot-spotting by spreading heavy volumes across nodes and failure domains. When teams benchmark, they should test inside the cluster because cgroups, CPU limits, and scheduling shape what the application sees.

Multi-Tenant Storage Architecture and NVMe/TCP

NVMe/TCP fits multi-tenant designs because it scales on standard Ethernet and supports disaggregated storage without a specialized fabric. That helps platform teams grow capacity and performance by adding nodes while keeping the transport consistent across clusters.

The datapath still decides outcomes. CPU overhead per I/O can become the limiting factor long before NVMe media saturates. Efficient user-space I/O paths can reduce overhead and help keep tail latency steadier at higher concurrency, which matters when many tenants push IOPS at once.

Measuring Tenant Isolation and Noisy-Neighbor Risk

Benchmarking multi-tenancy means you test interference, not only peak. Start with one tenant and capture baseline latency percentiles. Add a second tenant that pushes random I/O and watch p99 drift. Add a third tenant that streams large reads and watch throughput stability. Repeat the tests during background work, such as rebuild or rebalance windows, since those conditions often expose real risk.

- Run a baseline test with one tenant, then add two noisy tenants with fixed limits, and measure p99 drift.

- Validate quotas by trying to exceed capacity from a single namespace.

- Trigger a controlled maintenance event, then measure rebuild impact on latency and throughput.

- Re-run the same suite after CSI, kernel, or topology changes, and compare deltas.

Approaches for Improving Isolation Without Over-Fragmenting Clusters

Teams often choose between “one shared pool” and “many dedicated pools.” Dedicated pools reduce contention, but they can waste capacity and slow provisioning. Shared pools improve utilization, but they demand stronger policy and clearer telemetry. Many platforms land on hybrid lanes: shared infrastructure with strict limits, plus reserved headroom for critical tenants.

Hardware offload can help in dense clusters. DPUs/IPUs can reduce host CPU contention and improve fairness under load by moving parts of the storage datapath closer to the network edge.

Comparison of Multi-Tenant Storage Patterns

The table below compares common patterns teams use when they design multi-tenant storage for Kubernetes.

| Pattern | Isolation strength | Efficiency | Ops effort | Best fit |

|---|---|---|---|---|

| Shared pool + quotas + QoS | High (policy-based) | High | Medium | Multi-team Kubernetes platforms |

| Per-tenant storage pools | Very high | Medium | Medium | Regulated tenants, strict chargeback |

| Per-tenant clusters | Maximum | Low–Medium | High | Hard isolation, separate lifecycles |

| Hybrid lanes (shared + reserved) | High | High | Medium | Mixed OLTP and batch tenants |

Simplyblock™ for Multi-Tenant Storage That Stays Predictable

Simplyblock™ supports multi-tenant Software-defined Block Storage with per-tenant controls that help keep shared clusters stable. Teams can segment tenants, apply quotas and QoS, and reduce noisy-neighbor impact in Kubernetes Storage environments.

Simplyblock also supports NVMe/TCP for standard Ethernet deployments and uses SPDK-based, user-space design choices to reduce datapath overhead.

Where Multi-Tenant Storage Design Is Going Next

Teams increasingly standardize policy across clusters. They define quota and QoS once, then enforce it everywhere. They also invest in workload replay so they can test real tenant behavior during upgrades and topology changes, not only synthetic averages.

Expect more automation that links telemetry to guardrails. Better observability will help teams separate CPU-bound, network-bound, and media-bound cases quickly and apply the right policy response without trial and error.

Related Terms

Teams review these alongside Multi-Tenant Storage Architecture to set measurable isolation and performance targets in Kubernetes Storage.

- Storage Quality of Service (QoS)

- Storage offload on DPUs

- MAUS Architecture

- Kubernetes capacity tracking for storage

Questions and Answers

Multi-tenant storage systems isolate workloads using per-tenant encryption, dedicated logical volumes, and namespace-based access controls. With platforms like Simplyblock, each tenant can be assigned unique encryption keys and IOPS limits to prevent noisy-neighbor effects.

Challenges include maintaining security boundaries, performance isolation, and reliable access control. Proper implementation requires CSI support, per-volume encryption, and resource throttling. Simplyblock solves this by offering multi-tenant persistent volumes with integrated replication and encryption.

Yes, NVMe over TCP fits well in multi-tenant environments by delivering fast, isolated access over standard Ethernet. Combined with tenant-aware volume provisioning, it ensures both high performance and secure data separation at scale.

Cloud-native databases, SaaS platforms, and internal platform teams benefit from shared yet isolated storage backends. Multi-tenant storage supports Kubernetes environments, ensuring secure, scalable, and high-performance infrastructure for diverse users and apps.

Simplyblock enables tenant-aware storage by offering per-volume encryption, fine-grained access control, and seamless CSI integration. Each tenant gets isolated resources with the flexibility to scale, snapshot, or replicate volumes—ideal for stateful Kubernetes workloads and DBaaS use cases.