SPDK vs Kernel Storage Stack

Terms related to simplyblock

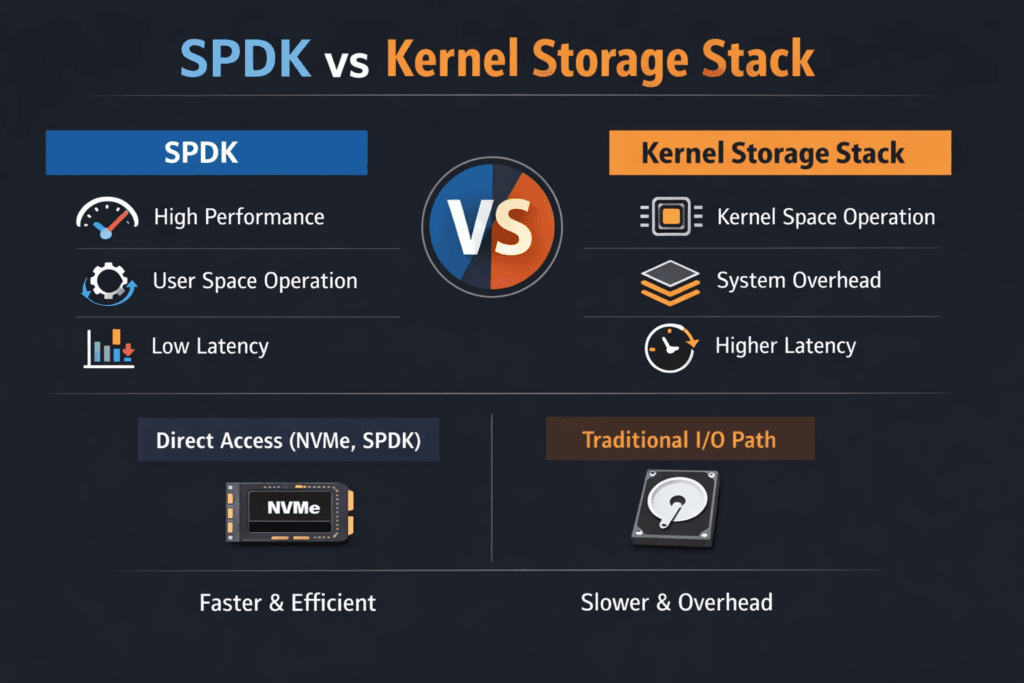

SPDK vs Kernel Storage Stack compares two ways to run the block I/O fast path from an app to NVMe devices or to networked NVMe-oF targets. The Linux kernel stack routes I/O through the kernel’s block layer, schedulers, and drivers. SPDK (Storage Performance Development Kit) runs the data path in user space and often uses polling loops and pinned threads to reduce context switches and extra memory copies.

Teams usually pick between these paths based on two goals: operational simplicity and performance stability. The kernel stack often wins on familiarity and broad hardware support. SPDK often wins when CPU efficiency and tail latency drive the business impact, especially for high-IOPS services on Kubernetes Storage and scale-out storage tiers.

SPDK vs Kernel Storage Stack – what changes in the I/O path

The kernel stack provides a general framework that supports many devices and workloads. It also layers scheduling, accounting, and safety features that ops teams value. That flexibility can cost CPU at high concurrency, and it can add latency variance when the host runs mixed workloads.

SPDK takes a narrower approach. It keeps the hot path in user space, and it optimizes for predictable, high-rate I/O. Many SPDK deployments pair with NVMe-oF targets that serve remote namespaces over Ethernet or other fabrics. The result often looks like “more IOPS per core” and tighter p99 latency when the system runs under sustained load.

🚀 Standardize Storage Performance Tiers in Kubernetes

Use simplyblock to run Software-defined Block Storage with QoS controls that keep latency predictable at scale.

👉 Talk to simplyblock about Kubernetes storage tiers →

SPDK vs Kernel Storage Stack in Kubernetes Storage

Kubernetes Storage adds churn by design. Pods restart. Nodes drain. Volumes attach, detach, and remount all day. A storage backend needs automation and guardrails, not only a fast benchmark chart.

Kernel-based paths fit well when teams want standard tools, wide driver coverage, and predictable debugging workflows. SPDK-based paths help when performance tiers need stable tail latency across many tenants and high queue depth. CSI integration matters in both cases because Kubernetes relies on CSI to provision, attach, expand, snapshot, and clone volumes.

When you run Software-defined Block Storage in Kubernetes, the control plane should set intent (tiers, replicas, QoS), and the data plane should deliver that intent under load. If those layers do not align, p99 latency becomes the first visible symptom.

SPDK vs Kernel Storage Stack with NVMe/TCP and NVMe-oF

NVMe/TCP runs on standard Ethernet, which makes it a common choice for cloud-native and baremetal clusters. SPDK supports NVMe-oF TCP targets and can serve namespaces over TCP with a user-space fast path.

Kernel initiators and targets can also support NVMe/TCP in many environments, and they benefit from mature kernel networking behavior. The trade-off usually shows up in overhead and tuning. User-space paths can reduce extra copies and kernel transitions. Kernel paths can simplify day-2 ops for teams that standardize on kernel tooling.

Network design sets the ceiling for both options. Queue parallelism, NUMA placement, NIC queues, and congestion behavior decide whether NVMe/TCP stays steady when concurrency rises.

Production-grade benchmarks that do not lie

A good benchmark answers one question: can the stack hold the latency target while it sustains the required throughput on real hardware and real paths? Synthetic single-host runs often miss the pain points that show up in Kubernetes.

Model mixed reads and writes. Include small blocks. Track p50, p95, and p99 latency. Then repeat the test while you trigger recovery events, because rebuilding and rebalancing work can raise tail latency fast. In Kubernetes Storage, run tests through real PVCs and StorageClasses so the CSI and network path match production.

Also track CPU per I/O. If a design needs a lot of CPU to hit throughput, it raises cost and reduces headroom during failures.

When the kernel stack still makes sense

The kernel stack often fits best when you value broad coverage, simpler troubleshooting, and common security controls. It can also win for moderate I/O rates where overhead does not dominate. Teams that rely on standard tooling and want fewer moving parts often stick with the kernel path and still meet their SLOs.

Kernel innovation keeps moving, too. io_uring expands async I/O options inside the kernel ecosystem, which can help some workloads reduce overhead without switching stacks.

Tuning moves that reduce tail latency

- Pin hot threads and align them with NUMA locality and NIC queues to reduce jitter.

- Keep MTU consistent and avoid heavy oversubscription on storage traffic.

- Separate workload tiers and enforce QoS so noisy neighbors do not crush p99 latency.

- Validate p99 under mixed read/write load, not only pure reads.

- Re-test during failure, rebuild, and rebalance to confirm stability.

Side-by-side trade-offs for user-space vs kernel I/O

The table below summarizes typical outcomes teams see in production when they compare the two approaches.

| Area | Kernel storage stack | SPDK user-space stack |

|---|---|---|

| CPU efficiency | Good, varies by path | Often higher at scale |

| Tail latency under burst | Can vary with scheduler noise | Often tighter with pinned polling loops |

| Ops familiarity | Very high | Medium, needs user-space tuning |

| Hardware coverage | Broad | More focused on NVMe-centric setups |

| Fit for Kubernetes Storage tiers | Strong baseline | Strong for high-IOPS, multi-tenant tiers |

Simplyblock™ approach for policy control plus a fast data path

Simplyblock™ combines a control plane for Software-defined Block Storage with an SPDK-based data plane aimed at CPU efficiency and predictable latency. It targets Kubernetes-first environments where automation, tenant isolation, and QoS matter as much as raw throughput.

Teams can use NVMe/TCP for broad deployment on Ethernet and keep consistent storage policies across clusters. This pairing helps platform teams standardize tiers while keeping performance behavior easier to predict under mixed workloads.

What comes next – DPUs, offload, and hybrid designs

More teams now offload parts of networking and storage work to DPUs and SmartNICs. That shift can free host CPU for apps and smooth out jitter under load.

Hybrid designs also grow in popularity, where teams keep kernel tooling for general tiers and use user-space acceleration for strict latency tiers.

Related Terms

Teams use these terms when comparing user-space and kernel I/O paths for Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

SPDK runs entirely in user space with polling I/O and CPU pinning, while the Linux kernel stack uses interrupt-driven I/O with context switching. SPDK delivers lower latency and higher IOPS, making it ideal for NVMe over TCP in performance-critical environments.

SPDK typically outperforms the kernel stack in terms of latency, IOPS, and CPU efficiency, especially under high concurrency. Its zero-copy, lockless architecture is optimized for software-defined block storage platforms needing predictable, low-latency behavior.

SPDK is ideal for dedicated storage appliances, NVMe-oF targets, and hyperscale environments where maximum I/O performance and CPU efficiency are critical. For general-purpose workloads or shared nodes, the kernel stack may still offer easier management and flexibility.

Yes, SPDK can be integrated with Kubernetes via NVMe-oF targets that expose volumes to the cluster. When paired with a CSI-based solution like Simplyblock, it supports high-performance persistent storage for stateful workloads in containerized environments.

While Simplyblock doesn’t publicly confirm SPDK use, its architecture mirrors SPDK principles like polling I/O, encryption, and distributed NVMe over TCP delivery. These capabilities enable low-latency storage for Kubernetes and VMs without requiring specialized hardware.