CSI Architecture

Terms related to simplyblock

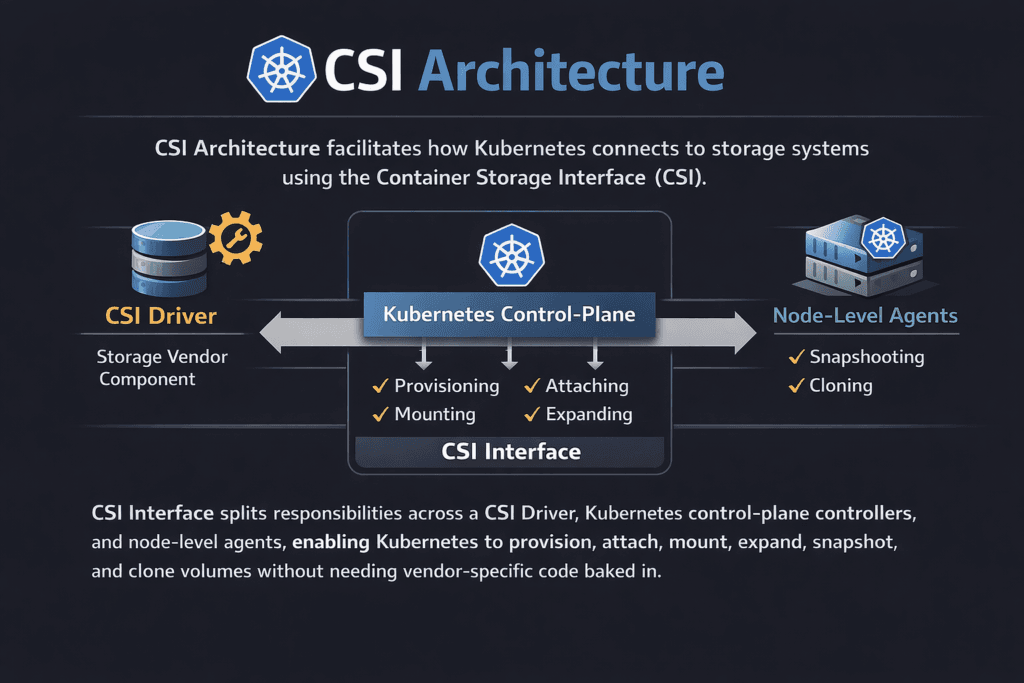

CSI Architecture describes how Kubernetes connects to storage systems through the Container Storage Interface (CSI). It splits responsibilities across a driver (the storage vendor component), Kubernetes control-plane controllers, and node-level agents. This separation lets Kubernetes provision, attach, mount, expand, snapshot, and clone volumes without baking vendor code into Kubernetes itself.

For executives, CSI matters because it reduces lock-in and speeds platform change. For operators, CSI matters because it turns storage tasks into repeatable API calls. That repeatability is the base layer for Kubernetes Storage at scale, especially when teams run stateful apps and multi-tenant clusters.

CSI Architecture components that operators touch daily

CSI uses a clear split between “controller” logic and “node” logic. The controller side handles tasks like volume create, delete, attach, detach, snapshot, and resize. The node side handles staging and publishing, which is where mounts, formats, and paths get set up for pods.

Most real deployments also run CSI sidecars. Sidecars watch Kubernetes objects and call the CSI driver over gRPC when something changes. Common sidecars include external-provisioner, external-attacher, external-snapshotter, external-resizer, and node-driver-registrar.

This modular setup keeps upgrades safer. You can update a sidecar or a driver without rewriting Kubernetes.

🚀 Standardize Storage Workflows with CSI in Kubernetes

Use simplyblock to automate provisioning, snapshots, and QoS for stateful workloads at scale.

👉 Deploy simplyblock CSI for Kubernetes Storage →

CSI Architecture flows across provisioning, attaching, and mounting in Kubernetes Storage

In Kubernetes Storage, the flow starts when a team creates a PVC. The external-provisioner notices the claim and asks the CSI driver to create a volume. Next, Kubernetes schedules a pod. If the volume needs attachment, the external-attacher coordinates that step. Finally, kubelet triggers node calls that stage and publish the volume, which makes it usable inside the pod.

Two practical details drive reliability:

Topology rules decide where Kubernetes should place the workload and volume, which reduces cross-zone latency and avoids failure-domain mistakes.

Lifecycle speed matters. Slow NodePublishVolume calls can turn a rolling upgrade into a long outage window for stateful sets.

CSI Architecture with NVMe/TCP and Software-defined Block Storage

CSI does not limit what sits underneath it. Teams can run local SSDs, cloud volumes, SAN, or Software-defined Block Storage and still use the same Kubernetes objects. That is why CSI pairs well with modern storage planes that expose fast block access over fabrics.

When the data plane uses NVMe/TCP, Kubernetes gains a common Ethernet-based path for remote NVMe access. In practice, that can simplify rollout across racks and clusters, while keeping the storage interface consistent for apps.

A strong architecture still needs guardrails. Multi-tenancy and QoS keep one namespace from reshaping latency for the entire cluster, which helps protect p99 behavior for databases and queues.

Measuring CSI performance and reliability signals

CSI success shows up in day-2 metrics more than raw IOPS charts. Track how long the platform takes to complete common workflows: dynamic provisioning time, attach time, NodeStage/NodePublish time, resize completion time, and snapshot creation time.

Also measure failure behavior. Break a node, force a reschedule, and watch recovery time. If mounts stall, look at node plugin logs and sidecar logs first. If provisioning stalls, review external-provisioner events and driver responses.

Tuning actions that reduce mount delays and noisy-neighbor risk

- Set clear StorageClasses by tier, and map each tier to a distinct pool or policy set, so mixed workloads do not fight for the same backend limits.

- Use topology awareness for multi-zone clusters to keep volumes near workloads and reduce cross-zone traffic.

- Keep sidecar versions and driver versions aligned, and roll them with a controlled canary process.

- Watch NodePublishVolume latency during upgrades, and alert on spikes because they often predict app start delays.

- Apply QoS limits per tenant when the backend supports it, so batch scans do not drown log writes.

Storage integration comparison for CSI-based clusters

The table below compares common backend patterns teams use behind CSI when they build Kubernetes Storage for stateful workloads.

| Backend pattern | Strengths in Kubernetes | Common limits | Typical fit |

|---|---|---|---|

| Cloud block volumes | Fast start, managed durability | Cost and caps at scale | Small to mid clusters |

| Traditional SAN | Central control, mature tooling | Change control overhead | Legacy data centers |

| Local SSD + replication | Very low latency | Harder ops, less portable | Edge or single-rack tiers |

| Software-defined Block Storage | Policy control, scale-out growth | Needs tier planning | Platform teams at scale |

Predictable CSI operations with Simplyblock™ at scale

Simplyblock™ integrates with Kubernetes through its CSI driver so teams can automate provisioning and lifecycle tasks with standard objects. It targets high-performance stateful workloads and supports NVMe/TCP-based volumes for Ethernet deployments.

Because simplyblock runs as Software-defined Block Storage, platform teams can standardize tiers, apply QoS for tenant isolation, and choose hyper-converged, disaggregated, or hybrid layouts without changing how developers request storage.

Where CSI designs are heading next

CSI keeps moving toward richer automation. Snapshot and clone workflows continue to mature, and topology features keep improving for multi-zone reliability. Sidecar modularity also stays central, because it lets teams patch specific functions without touching the entire stack.

At the same time, data planes evolve. Faster transports and user-space storage paths improve CPU efficiency and latency stability, which raises the value of a clean CSI control surface that Kubernetes can drive.

Related Terms

Teams reference these terms when designing CSI Architecture for Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

The CSI architecture (Container Storage Interface) defines a standardized way for storage vendors to expose block and file storage to Kubernetes. It decouples storage provisioning from Kubernetes internals, enabling dynamic volume management via CSI drivers.

CSI architecture includes the external provisioner, node plugin, and controller plugin. These components handle volume creation, attachment, and mounting. Platforms like Simplyblock integrate via CSI to provide NVMe-over-TCP persistent volumes to Kubernetes clusters.

CSI allows Kubernetes to automatically create, mount, and manage volumes based on a user’s request. StorageClasses define the parameters, while the CSI driver handles provisioning. Simplyblock uses this model to offer multi-tenant encrypted volumes with replication and performance tuning.

Yes, CSI drivers can be designed to enforce per-tenant policies, isolate storage resources, and apply encryption at the volume level. Solutions like Simplyblock use CSI to support secure, multi-tenant Kubernetes storage with scalability and performance isolation.

Simplyblock uses CSI to seamlessly deliver high-performance NVMe over TCP volumes to Kubernetes clusters. The CSI driver handles volume lifecycle, encryption, and replication—enabling stateful applications to run with persistent storage that’s fast, secure, and cloud-native.