NVMe over TCP Cost Comparison

Terms related to simplyblock

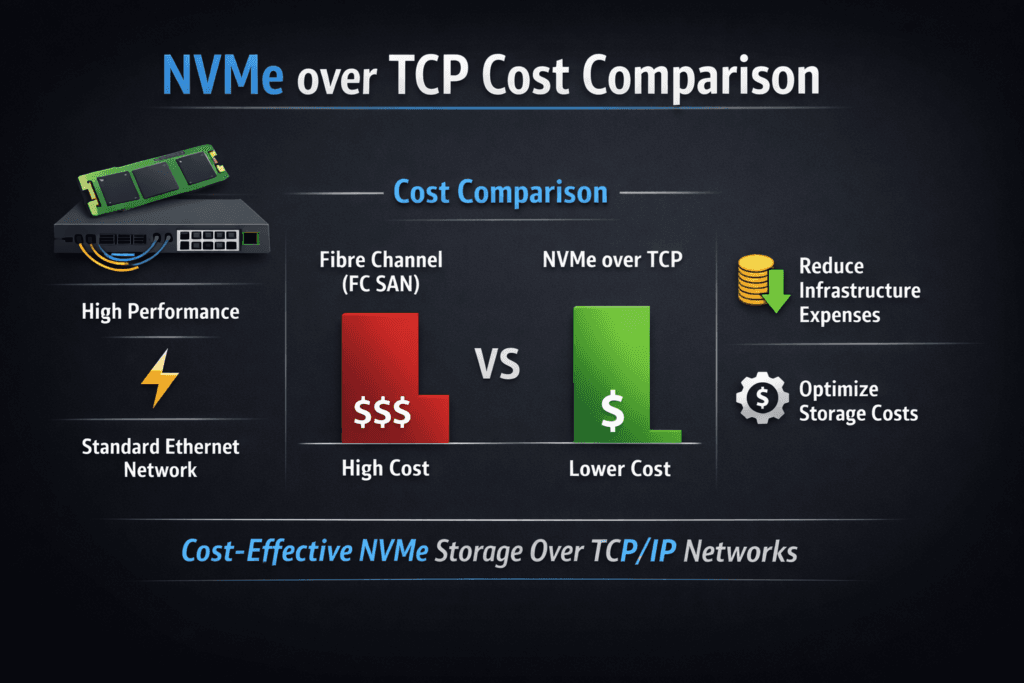

NVMe over TCP Cost Comparison looks at the full cost of running NVMe-oF storage over standard Ethernet and TCP/IP versus options like Fibre Channel SAN, iSCSI, and NVMe/RDMA. “Cost” includes hardware, licenses, staff time, power, rack space, and the hidden cost of missed performance targets. Teams often overspend when they chase latency with extra nodes, premium cloud volumes, or multiple storage stacks.

NVMe/TCP often changes the math because it runs on common Ethernet gear, scales with Kubernetes Storage growth, and avoids the special fabric and tooling that Fibre Channel needs. When you pair NVMe/TCP with Software-defined Block Storage, you also gain policy controls, multi-tenancy, and QoS, which help keep spend tied to real service levels instead of constant tuning.

Cost levers that keep NVMe/TCP spend under control

A solid cost plan starts with the data path and the ops model. If a platform needs niche adapters, separate switch domains, and a dedicated admin team, costs rise fast. If it runs on standard servers and Ethernet, you can forecast spending with less risk.

Software-defined Block Storage shifts cost from appliances and locked stacks to a software layer that uses NVMe devices in common nodes. That shift also helps with buying and scaling. You can grow in smaller steps, reuse known server SKUs, and avoid forklift upgrades.

Cost control also depends on fairness. When the storage platform enforces QoS, teams stop paying for “buffer capacity” that exists only to survive noisy neighbors. That matters in Kubernetes Storage, where many apps share the same backend.

🚀 NVMe over TCP Cost Comparison: Prove the Numbers with Benchmarks

Use Simplyblock to benchmark NVMe/TCP with fio and tie results to real workload cost and SLO targets.

👉 Benchmark NVMe/TCP with Simplyblock →

NVMe over TCP Cost Comparison in Kubernetes Storage

Kubernetes Storage adds two common cost drivers: platform sprawl and performance insurance. Teams buy extra nodes when storage latency swings under load. They also add new storage products when one backend cannot meet mixed workload needs.

NVMe/TCP fits Kubernetes growth patterns. It scales out with nodes, and it supports disaggregated or hyper-converged layouts, based on your tier design. That flexibility reduces the need for parallel stacks.

Daily operations also affect cost. Kubernetes teams rely on automation, clear policies, and fast change. A storage system that matches that workflow reduces admin time, upgrade risk, and incident load.

NVMe over TCP Cost Comparison and NVMe/TCP

When teams compare NVMe/TCP to Fibre Channel SAN, they often see savings in fabric complexity, special hardware, and change overhead. Fibre Channel can deliver strong performance, but it usually brings dedicated switches, HBAs, zoning, and separate processes.

When teams compare NVMe/TCP to iSCSI, they look at performance per dollar. NVMe/TCP uses NVMe semantics, which match SSD parallelism and deep queues better than older designs. Better efficiency can reduce the number of nodes needed to hit IOPS and latency targets.

When teams compare NVMe/TCP to NVMe/RDMA, the trade shifts to “cost of performance.” RDMA can cut CPU overhead and latency, but it can raise network demands and increase ops effort. Many teams use NVMe/TCP for broad tiers, then reserve RDMA for workloads with strict latency budgets.

Measuring and Benchmarking Total Cost of Ownership

A cost comparison needs workload data. Start with what the business feels: p95 and p99 latency, database commit time, and pipeline throughput. Then connect those metrics to capacity used, nodes required, and staff time.

Benchmarking should reflect real paths. Run tests through Kubernetes Storage using PVCs and CSI, not only on raw nodes. Measure CPU per I/O as well, because high CPU cost forces you to buy more compute just to keep storage responsive.

Track incident cost, too. Count hours spent on tuning, upgrades, and “why is it slow today” work. A platform with stable tail latency and clear QoS policies reduces that cost even when hardware spend looks similar.

Approaches for Improving Cost Efficiency

Most savings come from removing waste and avoiding overbuild. These actions usually improve cost efficiency without giving up performance:

- Standardize on NVMe/TCP for broad tiers to keep network and host needs consistent

- Use Software-defined Block Storage to scale in smaller steps and reduce appliance lock-in

- Enforce QoS and multi-tenancy so one workload cannot force cluster-wide overprovisioning

- Pick a topology per tier, and avoid one-size-fits-all layouts across every workload

- Benchmark with production-like profiles so you size for real p99 behavior, not peak charts

Cost Drivers Compared Across Common Storage Options

The table below summarizes where costs tend to appear and what they tend to buy you.

| Option | Primary cost drivers | Typical hidden cost | Cost control levers |

|---|---|---|---|

| Fibre Channel SAN | HBAs, FC switches, zoning, vendor ecosystem | Slow changes, separate ops processes | Strict tiering, stable standards |

| iSCSI over Ethernet | More nodes to meet latency targets, tuning | CPU overhead at scale | Careful sizing, QoS |

| NVMe/TCP + Software-defined Block Storage | Ethernet capacity, NVMe nodes, software ops | Network design discipline | Scale-out pools, QoS, automation |

| NVMe/RDMA tiers | RDMA NICs, fabric tuning | Skills and ops overhead | Use only for strict latency tiers |

Lowering NVMe/TCP TCO with Simplyblock™

Simplyblock™ targets a lower total cost by improving performance efficiency and reducing operational drag. It uses an SPDK-based, user-space, zero-copy architecture, which helps cut CPU overhead in the hot path. That efficiency can reduce node counts for the same workload goal, especially for IOPS-heavy tiers.

Simplyblock supports NVMe/TCP and NVMe/RoCE, so teams can keep one platform while still offering distinct service tiers. Multi-tenancy and QoS controls help protect critical workloads and stop noisy-neighbor events that drive costly overprovisioning. In Kubernetes Storage, stable behavior often saves more money than chasing peak results.

Future Directions and Advancements in Cost-Aware NVMe Storage

DPUs and IPUs will shift parts of storage and networking work off host CPUs. That shift can improve performance per node and reduce the need for spare capacity. Ethernet fabrics will also improve congestion handling, which helps tail latency and lowers “insurance” spend.

Expect stronger policy-based automation in storage. Platforms will translate intent—latency targets, bandwidth caps, durability rules—into enforced behavior. That change reduces manual tuning and makes cost planning more accurate across clusters.

Related Terms

Use these pages to support NVMe/TCP cost planning across Kubernetes Storage and Software-defined Block Storage.

- SAN Replacement with NVMe over TCP

- NVMe over TCP vs NVMe over RDMA

- Storage Quality of Service (QoS)

- NVMe Multipathing

Questions and Answers

NVMe over TCP eliminates the need for Fibre Channel switches or RDMA-capable NICs, running on standard Ethernet. This lowers CapEx significantly compared to legacy SANs. It’s ideal for modernizing storage stacks using distributed NVMe architectures without high hardware costs.

Yes. Fibre Channel requires expensive switching fabrics and HBAs, while NVMe/TCP uses commodity networks. A properly tuned scale-out storage architecture built on NVMe over TCP offers similar performance with far lower deployment and maintenance expenses.

Simplyblock’s platform is software-defined and hardware-agnostic, allowing users to deploy high-performance block storage on standard infrastructure. It provides SAN replacement capabilities without proprietary hardware or licensing overhead.

Operational costs are reduced by simplifying network design, avoiding vendor lock-in, and enabling easier scaling. NVMe/TCP integrates seamlessly with Kubernetes via CSI, as shown in Simplyblock’s Kubernetes storage platform, streamlining Day 2 operations.

Yes. Compared to Ceph’s complexity and performance overhead, NVMe/TCP platforms like Simplyblock offer better efficiency at scale. Its Ceph replacement architecture shows improved performance-per-dollar, especially for latency-sensitive workloads.