Performance Isolation in Multi-Tenant Storage

Terms related to simplyblock

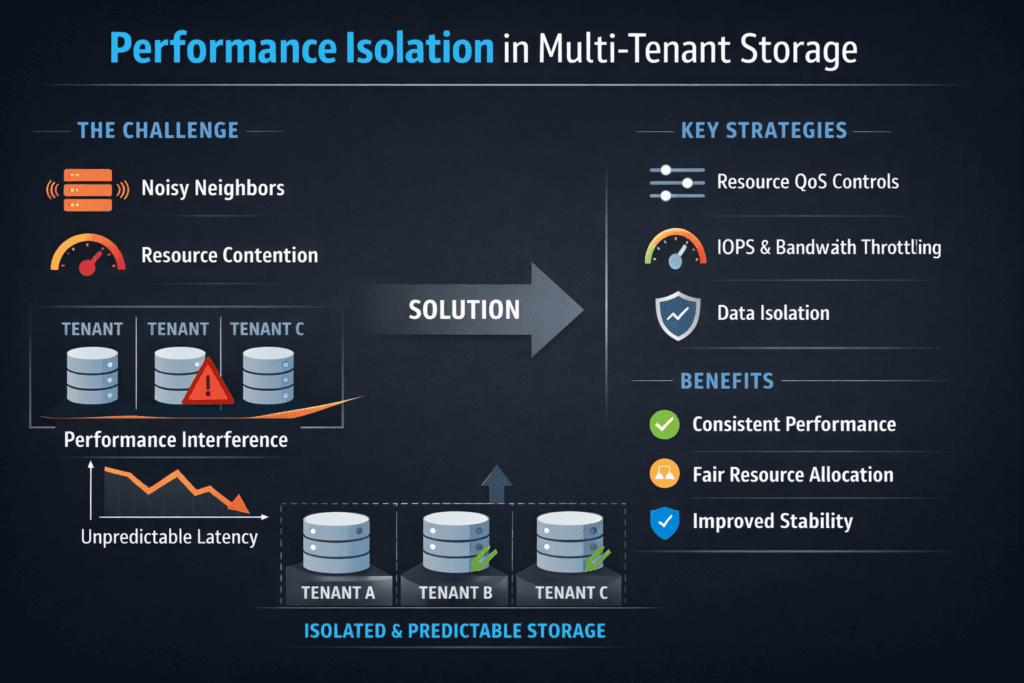

Performance isolation in multi-tenant storage means one tenant cannot steal IOPS, bandwidth, or latency budget from another tenant on shared hardware. Without isolation, a backup job or analytics burst can raise queue depth, push p99 latency up, and trigger timeouts in unrelated apps. This problem shows up fast in shared Kubernetes clusters and “platform as a service” setups because workload mix changes all day.

Executives care because isolation protects revenue-facing SLAs. Platform teams care because isolation reduces firefights and overprovisioning. In practice, the goal is simple: keep tail latency steady while the platform stays shared.

Why noisy neighbors happen and how to spot them early

Noisy neighbors usually come from shared queues and shared CPU. One tenant drives deep queues, and everyone else waits. The symptoms look like random latency spikes, uneven throughput across pods, and sudden drops in database TPS.

Good signals include p95 and p99 latency, not averages. Watch CPU per I/O, too, because CPU pressure often creates the first bottleneck in software paths. Track these metrics during normal load and also during events like reschedules and rolling updates.

🚀 Stop Noisy Neighbors in Shared Kubernetes Storage

Use Simplyblock to enforce tenant-level QoS and keep NVMe/TCP latency stable at scale.

👉 Use Simplyblock for Multi-Tenancy and QoS →

Performance Isolation in Multi-Tenant Storage for Kubernetes Storage

Kubernetes Storage raises the bar for isolation because the scheduler keeps moving workloads. A “quiet” tenant at noon can turn into a heavy tenant at 2 p.m. Storage must hold steady during that swing, or you will see jitter across the cluster.

Isolation also ties into how you offer storage tiers. If you sell “gold” and “silver” tiers, you need clear limits and clear guarantees. CSI-based provisioning makes the policy layer easy, but the storage platform must enforce those limits at runtime.

Performance Isolation in Multi-Tenant Storage and NVMe/TCP

NVMe/TCP helps multi-tenant designs because it runs on standard Ethernet and scales across nodes. It also keeps a familiar NVMe command model, which can support high IOPS with the right implementation.

Even so, NVMe/TCP does not “solve” isolation on its own. Tenants still share target CPU, NIC queues, and storage queues. A strong design keeps the datapath lean and applies hard limits where contention happens. That’s where an NVMe-first approach and careful queue control matter, especially when tenant demand spikes.

Proving Performance Isolation in Multi-Tenant Storage with benchmarks

A fair test isolates one variable at a time. Start with one tenant, then add tenants in steps. Keep block size and read/write mix fixed, and measure how p99 latency shifts as you add load.

Run two benchmark modes. First, test a steady load to measure baseline drift. Next, add a “bully” tenant that ramps up quickly. If your isolation works, the bully tenant hits its limit, and the other tenants keep stable latency.

Controls that improve isolation without wasting hardware

Strong isolation blends policy and enforcement. Policy sets the target. Enforcement keeps it real under pressure. The best results usually come from platforms that support per-tenant limits plus per-volume controls, so you can match how teams actually consume storage.

Use this short checklist to improve isolation in a shared cluster:

- Set per-tenant IOPS and bandwidth caps that match your SLOs.

- Add per-volume QoS so one app cannot drown the tenant’s other apps.

- Separate latency tiers so batch jobs do not share the same pool as OLTP.

- Keep CPU headroom on storage nodes so limits work under stress.

- Validate limits during reschedules, failovers, and rebuild activity.

Comparing isolation strategies in shared storage

The table below compares common ways teams try to stop noisy neighbors in shared environments.

| Approach | What it does well | Where it breaks down | Best fit |

|---|---|---|---|

| Per-volume QoS limits | Simple, direct control on a workload | Needs good defaults and monitoring | Mixed app fleets |

| Per-tenant quotas and caps | Protects teams and customers | Can hide app-level hotspots | Platform teams, SaaS |

| Pool-level tiering | Keeps OLTP away from batch | Adds planning overhead | Clear tiered services |

| “One tenant per cluster” | Strong isolation | High cost, slow scale | Regulated or premium tiers |

Keeping p99 Stable with Simplyblock™

Simplyblock™ targets multi-tenant Software-defined Block Storage where teams need steady p99 latency on shared infrastructure. It supports per-tenant controls and QoS, so one workload cannot flatten the rest of the cluster. It also supports NVMe/TCP and leans on SPDK-oriented design choices to keep datapath overhead low, which helps preserve CPU for real work.

What changes next for shared-storage isolation

More teams now treat p99 latency as a product metric. That shift pushes storage platforms to deliver hard limits, not “best effort.” Expect more use of offload options, clearer tiering models, and tighter CSI policy mapping so platform teams can offer storage as a service with fewer exceptions.

Related Terms

Quick references for storage QoS limits and noisy-neighbor control in Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

In shared environments, one tenant’s high I/O workload can degrade performance for others. Performance isolation ensures predictable latency and throughput per tenant, especially in multi-tenant Kubernetes storage architectures.

NVMe over TCP supports scalable, queue-based I/O paths that can be isolated per volume or tenant. This enables fine-grained performance control without complex network overlays or costly hardware segregation.

Common mechanisms include IOPS and bandwidth throttling, dedicated I/O queues, and per-tenant volume provisioning. Simplyblock uses these techniques to provide secure, isolated block storage within container and VM workloads.

Yes. When properly designed with volume-level QoS and NVMe/TCP backing, multi-tenant storage can support databases, queues, and other low-latency applications. Simplyblock’s stateful workload support delivers both performance and isolation at scale.

Simplyblock assigns performance policies per volume, uses traffic shaping, and supports encryption per tenant. This ensures fairness while maintaining high performance in multi-tenant storage deployments.