Storage Scaling Without Downtime

Terms related to simplyblock

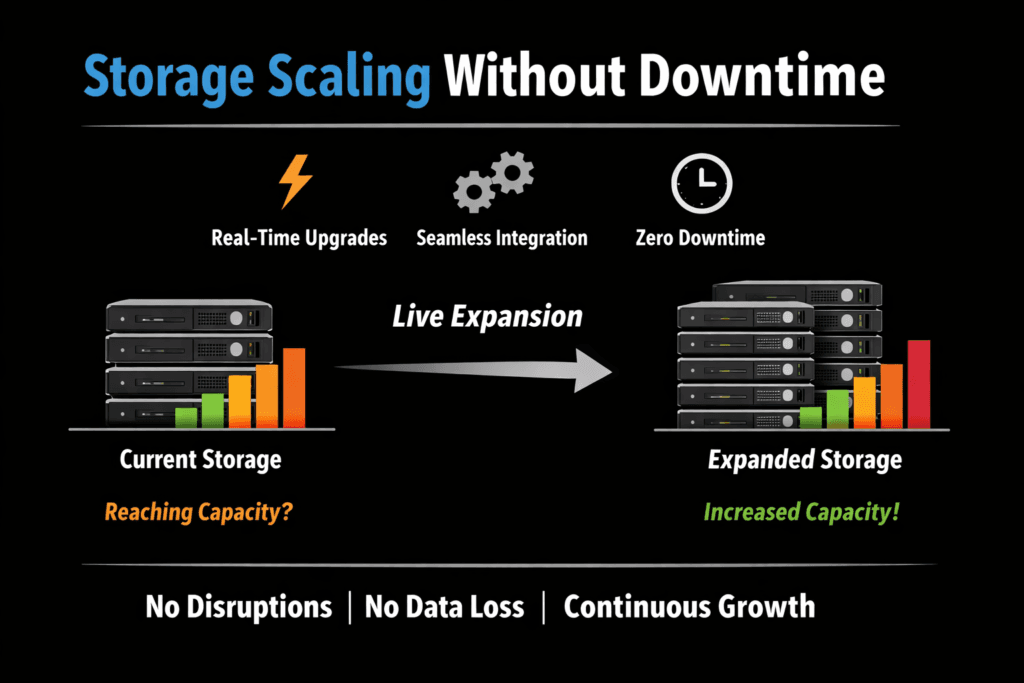

Storage Scaling Without Downtime means increasing capacity, throughput, or IOPS while applications continue to serve reads and writes. For executives, it reduces revenue risk during growth events and platform changes. For platform teams, it removes maintenance windows from day-to-day operations and keeps Kubernetes Storage stable as workloads expand.

Zero-downtime scaling depends on a few technical realities. The storage layer must accept new resources online, rebalance data without stalling foreground I/O, and maintain predictable tail latency while background work runs. A Software-defined Block Storage architecture helps because it scales on standard servers and avoids the “single controller” bottleneck that often forces disruptive upgrades.

Optimizing Growth While Applications Stay Online

Downtime usually shows up when scaling triggers a controller restart, a long resync, a metadata rebuild, or an overloaded migration. You can avoid those failure modes by using a scale-out design that adds nodes first, then rebalances gradually. That pattern also lets teams expand in smaller increments, which reduces blast radius and keeps operational risk low.

Successful scaling also requires control over background activity. Rebuild and rebalance traffic must not starve production I/O, and the system must apply scheduling and throttling policies that protect latency-sensitive volumes. If the storage platform cannot enforce that separation, scaling events may remain “online” in theory but still feel like downtime to users because response time becomes unacceptable.

🚀 Scale Storage Without Downtime, Natively in Kubernetes

Use Simplyblock to expand capacity and performance live, without maintenance windows.

👉 Use Simplyblock for Zero-Downtime Kubernetes Storage Scaling →

Storage Scaling Without Downtime in Kubernetes Storage

In Kubernetes, scaling without downtime often starts with online PVC expansion and continues with backend pool expansion. Kubernetes can request more capacity, but the storage layer determines whether that growth stays smooth under load. A platform that integrates cleanly with CSI workflows can expand volumes while pods keep running, and it can also spread data placement changes across time so the cluster avoids a single painful rebalance surge.

Deployment topology affects results. Hyper-converged layouts scale by adding worker nodes that contribute storage, while disaggregated layouts scale by adding storage nodes that serve many workers. Hybrid models can mix both so teams can keep critical databases on isolated storage nodes while letting general workloads use capacity closer to compute. Each option can scale without downtime if the control plane handles membership changes safely and the data plane protects latency during background movement.

Storage Scaling Without Downtime and NVMe/TCP

NVMe/TCP enables scale-out performance using standard Ethernet and familiar operational tooling. It transports the NVMe command set over TCP, which makes it practical for Kubernetes environments that prioritize repeatable automation. For many teams, NVMe/TCP becomes the default protocol for scaling storage performance because it avoids the specialized networking requirements that often accompany RDMA.

Protocol choice matters during growth events. When nodes join a cluster, the storage layer must maintain stable queueing behavior and predictable latency. NVMe/TCP can deliver strong performance when the system uses efficient I/O processing and avoids extra memory copies. That efficiency becomes more important as the cluster grows because CPU overhead can quietly turn into the limiting factor long before the network saturates.

Measuring and Benchmarking Scaling Performance

To validate Storage Scaling Without Downtime, teams must measure what users experience during a scaling event, not only peak numbers on an idle system. Average latency hides the “bad minutes” that cause real incidents. Tail latency, jitter, and throughput consistency during rebuild and rebalance matter more than a single top-line IOPS figure.

A practical benchmark design keeps the workload stable while the platform changes underneath it. Run a steady read/write profile, expand capacity or add a node, and then observe performance while the system rebalances. Record p95 and p99 latency, not just p50. Track how long the system takes to converge to steady state after the change. Repeat the test at different utilization levels because scaling behavior often degrades when pools run hot.

Approaches for Improving Scaling Performance

The most reliable way to improve non-disruptive scaling is to protect production I/O from background work while keeping metadata operations lightweight. Use policies that cap rebuild bandwidth, and apply QoS so noisy tenants do not crush latency for critical volumes. Keep failure-domain settings aligned with your SLA targets, and test “scale plus failure” scenarios because real life rarely schedules these events separately.

A short checklist helps teams avoid common pitfalls:

- Set clear latency SLOs and enforce them with QoS during rebuild and rebalance.

- Expand early, and avoid running pools near full because compaction and placement changes become harsher.

- Keep NVMe/TCP networking consistent across nodes, and validate MTU, congestion behavior, and NIC offloads.

- Test online expansion in the same way it will happen in production, including filesystem resize and application behavior.

Scaling Model Comparison for Always-On Environments

Before choosing an approach, it helps to compare how different storage models behave when capacity and performance must grow while the cluster stays live.

| Approach | Typical scaling trigger | Downtime risk | Performance during rebalance | Fit for Kubernetes Storage |

|---|---|---|---|---|

| Traditional SAN expansion | Controller upgrades, shelf add, fabric changes | Medium–High | Often degrades during migrations | Automation gaps are common |

| Hyper-converged SDS | Add worker nodes, expand pools | Low | Good with QoS controls | Strong fit, shared resources |

| Disaggregated SDS | Add storage nodes, rebalance pools | Low | Good to excellent with isolation | Strong fit, clearer separation |

| Managed cloud block | API resize, add volumes | Low | Depends on provider throttles | Depends on whether the provider throttles |

How Simplyblock™ Delivers Predictable Always-On Scaling

Simplyblock™ focuses on Software-defined Block Storage with Kubernetes-native workflows and NVMe-first data paths. It targets online growth by supporting scale-out expansion, controlled background movement, and tenant-aware performance controls. That combination matters because the hardest part of scaling without downtime is keeping latency stable while data placement changes in the background.

Its SPDK-based, user-space architecture reduces overhead and avoids extra copies in the I/O path, which helps preserve CPU efficiency at scale. That efficiency becomes a practical advantage as clusters grow, leaving more headroom for applications and reducing the risk that “background work” becomes the hidden bottleneck. With built-in multi-tenancy and QoS, teams can expand capacity and throughput while keeping predictable service for critical workloads, including databases and analytics pipelines running on Kubernetes.

What’s Next for Non-Disruptive Storage Scaling

More platforms are moving toward policy-driven scaling, in which the system treats latency targets as hard constraints and automatically adjusts background activity. More deployments are also pushing storage functions to DPUs and IPUs to reduce host CPU overhead, especially for encryption, compression, and transport processing. At the same time, protocol stacks will keep standardizing around NVMe/TCP as the operational default for Ethernet-based NVMe-oF, with RDMA used selectively for the most latency-sensitive tiers.

Kubernetes will continue to pull storage deeper into cluster operations. Teams will increasingly expect expansion, placement optimization, and performance isolation to behave like native controllers rather than separate storage workflows. The storage platforms that win in this environment will scale linearly, protect tail latency during change, and keep operational steps predictable under automation.

Related Terms

Teams often use these related terms when planning how to scale storage capacity and performance without downtime.

NVMe over RoCE

StorPool

Storage Pool

Persistent Storage

Questions and Answers

Storage can scale without downtime by using distributed architectures that support online volume expansion and dynamic provisioning. Simplyblock enables seamless scaling through its Kubernetes-native storage platform, allowing volumes to grow while workloads remain online.

A scale-out architecture allows new nodes to be added without interrupting existing workloads. Simplyblock’s scale-out storage design distributes data across nodes, enabling capacity and performance expansion without service disruption.

Yes. NVMe over TCP supports distributed block storage systems that allow volumes to be resized and rebalanced across nodes dynamically. This ensures both performance and capacity can grow without downtime.

StatefulSets rely on PersistentVolumeClaims, which can be resized if supported by the storage backend. Simplyblock provides stateful workload support with online expansion and replication, maintaining availability during scaling events.

Simplyblock uses distributed NVMe-backed storage with replication and dynamic provisioning. Its software-defined storage architecture allows nodes and volumes to scale independently, ensuring uninterrupted performance for production workloads.