Kubernetes Storage for MongoDB

Terms related to simplyblock

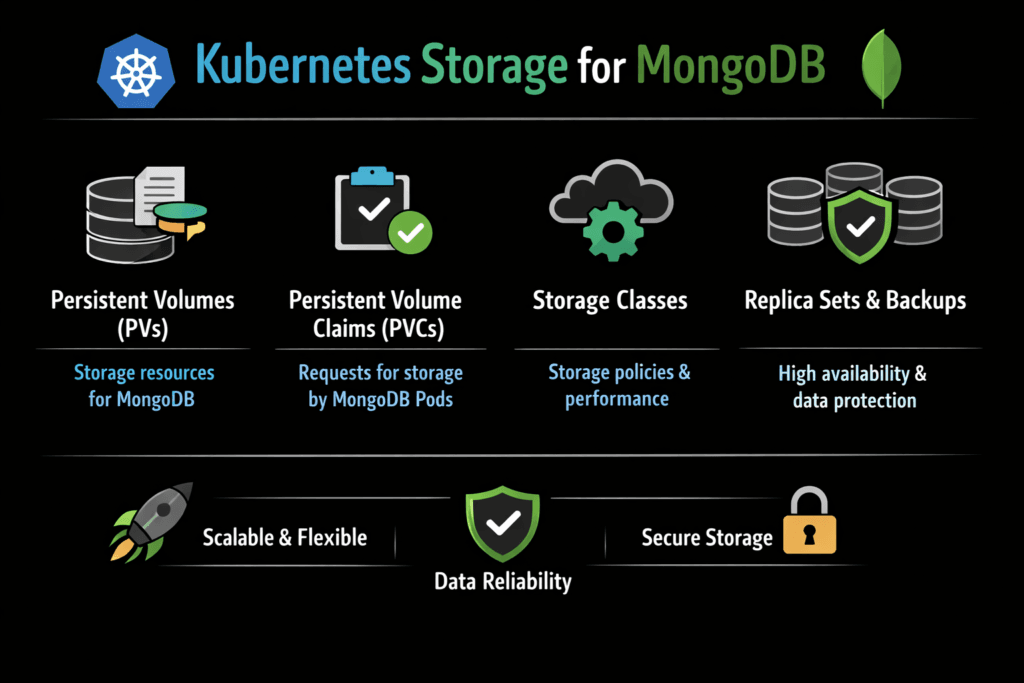

Kubernetes Storage for MongoDB refers to the storage architecture, policies, and runtime behavior that keep MongoDB durable and fast while pods move, nodes drain, and clusters scale. MongoDB’s I/O pattern mixes small random reads, steady journal writes, and periodic checkpoint bursts. That mix makes tail latency the deciding factor, not peak throughput. When storage jitter rises, MongoDB often shows slower queries, longer replica catch-up, delayed elections, and uneven performance across members, even if CPU and memory look fine.

On Kubernetes, the storage layer also has to handle attach and mount workflows, volume expansion, snapshot operations, and failure recovery, all while maintaining consistent service levels. Executives usually see this as risk and predictability: stable database SLOs, fewer incidents during upgrades, and growth without disruptive migrations. Platform teams see it as repeatability: a StorageClass that behaves the same way across clusters, with clear performance guardrails.

Proven ways to remove database I/O bottlenecks

Teams get better results when they treat storage as a platform service rather than a per-team workaround. That usually means using Software-defined Block Storage with policy-driven provisioning, isolation, and consistent behavior during node failures and rolling updates. When the storage layer exposes clear controls for replication or erasure coding, QoS, snapshots, and expansion, MongoDB operators can standardize runbooks and reduce one-off tuning.

CPU efficiency matters as much as raw IOPS. Kernel-heavy paths and extra copies waste cycles that MongoDB could use for query work. Storage stacks built around user-space I/O and zero-copy design can reduce overhead and tighten p99 latency under load, especially in multi-tenant clusters.

🚀 Scale MongoDB Storage Without Rebuilding Your Kubernetes Platform

Use Simplyblock to add performance and capacity with NVMe/TCP-backed volumes and policy-based QoS.

👉 Use Simplyblock for Kubernetes Storage →

Kubernetes-native persistence patterns for MongoDB

MongoDB typically runs best with stable identity and stable disks, so StatefulSets and PersistentVolumes matter. Replica set members should keep their volumes across restarts, and the scheduler should avoid frequent re-placement when possible. Storage choices should also account for fast rebuild and recovery operations, because resync time becomes a cost multiplier as data grows.

Durability also depends on how the storage backend handles write ordering and latency. Journal writes need steady completion times, and checkpoint bursts should not starve foreground reads. For that reason, a storage class aimed at MongoDB benefits from explicit performance policy, controlled queue depth, and consistent replica placement across failure domains.

Kubernetes Storage for MongoDB under NVMe/TCP fabrics

NVMe/TCP carries NVMe commands over standard Ethernet, which makes it a practical fit for data centers that want NVMe-oF behavior without specialized RDMA networking. For MongoDB, the main value is consistent block access with high parallelism. NVMe/TCP also simplifies operations in many environments while still delivering low latency and strong throughput for database workloads.

A Kubernetes platform can map MongoDB volumes onto NVMe/TCP-backed pools and use policy to separate hot and warm tiers. That approach supports hyper-converged layouts, where compute and storage share nodes, and disaggregated layouts, where storage nodes scale independently. Either way, the goal stays the same: steady latency for reads and journal writes, plus fast recovery during node events.

Benchmarking Kubernetes Storage for MongoDB with real workload signals

A useful benchmark mirrors the database’s behavior and captures latency distributions, not only averages. Block tests can validate IOPS and throughput, but MongoDB-specific testing should also measure end-to-end query latency, write concern timing, and replica catch-up under stress. Kubernetes adds another validation layer because rescheduling, node drains, and rolling upgrades can change I/O paths and contention patterns.

Strong benchmarks include steady-state load plus disruption tests. If performance drops sharply during a single node drain, the storage design is brittle, even if peak numbers look good in a quiet lab. Measure p50, p95, and p99, then repeat runs at different concurrency levels to reveal queueing effects.

Practical ways to improve MongoDB storage consistency in Kubernetes

Most performance gains come from reducing tail latency and preventing noisy-neighbor interference. The most reliable changes tend to be platform-level, not per-pod tweaks:

- Define a dedicated StorageClass for MongoDB with explicit performance policy and isolation.

- Use QoS controls to cap bursty tenants and protect database volumes from contention.

- Keep replica members on storage with consistent latency, and separate hot and warm pools when needed.

- Use snapshots and fast cloning to speed testing, rollback, and recovery workflows.

- Prefer architectures that reduce CPU overhead in the I/O path, especially at higher concurrency.

These steps make MongoDB behavior more repeatable across clusters and reduce the gap between staging and production.

Choosing the right storage architecture for MongoDB workloads

The table below summarizes common Kubernetes storage approaches for MongoDB and how they typically behave when you factor in rescheduling, multi-tenancy, and tail latency.

| Storage approach | Best-fit scenario | Tail-latency stability | Operational complexity | Notes |

|---|---|---|---|---|

| Local NVMe with Local PV | Pinned workloads, strict node affinity | High until pods move | Medium | Fast, but mobility and failover require careful design |

| Traditional SAN over iSCSI/FC | Legacy environments | Medium | Medium | Shared arrays can introduce contention and noisy effects |

| General-purpose SDS (shared block) | Mixed workloads | Medium–High | Medium–High | Results depend on policy, tuning, and tenant isolation |

| NVMe/TCP-backed Software-defined Block Storage | Performance-focused Kubernetes | High | High | Strong fit for stable p99 and scalable growth |

Running MongoDB on Simplyblock™ for a consistent database I/O

Simplyblock targets steady database performance by combining NVMe/TCP connectivity, Kubernetes-first operations, and a scale-out design. An SPDK-based user-space I/O path can reduce CPU overhead and avoid kernel bottlenecks, which helps keep latency steadier under mixed read and write pressure. Multi-tenancy and QoS controls help protect MongoDB replicas from noisy neighbors, while flexible deployment models support hyper-converged, disaggregated, or mixed layouts in the same platform footprint.

This model also fits day-2 operations. Teams can standardize StorageClasses, keep behavior consistent during rolling upgrades, and scale capacity and performance without reworking the cluster for every growth step. As MongoDB data grows, repeatability matters more than chasing occasional peak benchmarks.

What’s next for MongoDB persistence on Kubernetes

Storage stacks are trending toward lower overhead data paths, stronger isolation primitives, and more offload to DPUs and IPUs so the host CPU stays focused on database work.

Expect wider adoption of NVMe-oF transports in Ethernet-first environments, improved policy-based performance controls, and more automation around snapshots, clones, and rebuild workflows. For MongoDB-heavy clusters, the practical outcome is fewer latency spikes during routine operations, plus smoother scaling as tenant counts and dataset sizes rise.

Related Terms

Teams often pair Kubernetes Storage for MongoDB with these terms when tuning latency, recovery, and multi-tenant performance.

Questions and Answers

MongoDB requires low-latency block storage with consistent IOPS for journaling and replication. Simplyblock’s Kubernetes storage platform delivers NVMe-backed persistent volumes designed for high-performance, stateful database workloads.

High storage latency increases write acknowledgment times and replication lag across MongoDB replica sets. Using NVMe over TCP helps maintain sub-millisecond latency, improving insert throughput and query responsiveness in production clusters.

MongoDB performs best on block storage due to predictable I/O patterns and reduced overhead. Simplyblock provides optimized block volumes for stateful Kubernetes workloads, ensuring stable performance under concurrent reads and writes.

High availability requires replicated volumes and fast failover to avoid data loss. Simplyblock’s scale-out storage architecture supports distributed replication, helping maintain resilience during node or pod failures.

Simplyblock combines NVMe performance with software-defined flexibility, offering encrypted, dynamically provisioned persistent volumes. Its distributed block storage architecture ensures consistent throughput and performance isolation for MongoDB replica sets in Kubernetes.