On-Prem vs Cloud Storage Performance

Terms related to simplyblock

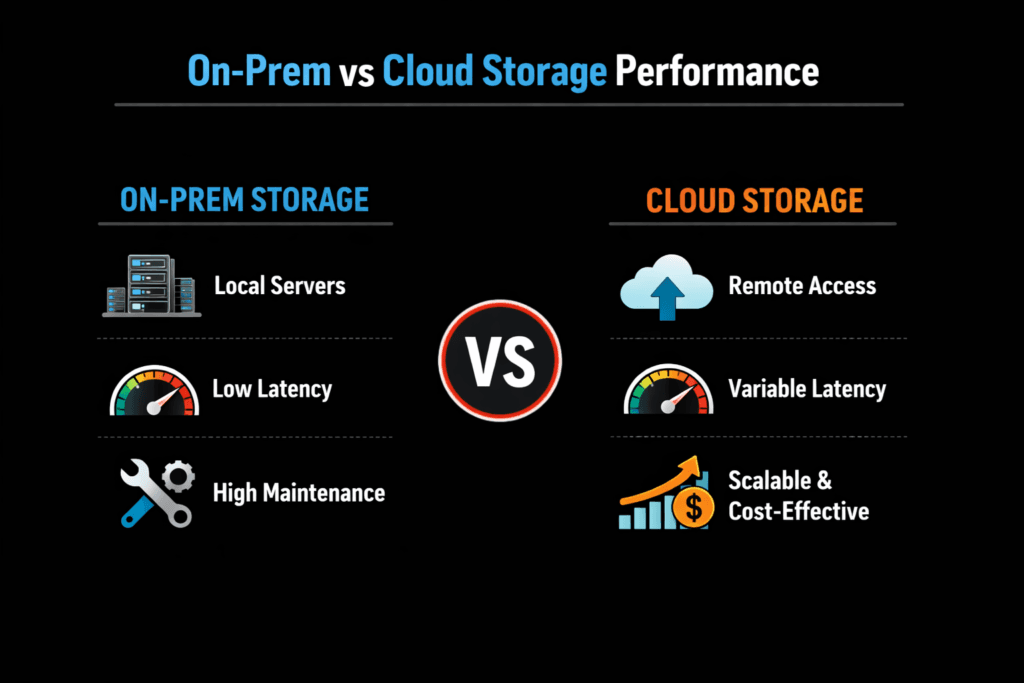

On-Prem vs Cloud Storage Performance compares how storage behaves when you run workloads on your own hardware versus a public cloud. Performance here means more than peak IOPS. It also includes p95 and p99 latency, write consistency, rebuild impact, and how often the system hits hidden limits.

On-prem environments often win on raw latency because NVMe sits close to the CPU, and the network path stays under your control. Cloud environments can still perform well, but you share underlying systems with other tenants, and you inherit service limits tied to instance type, disk type, and region. Those details matter most for databases, streaming systems, and search workloads that punish jitter.

For Kubernetes Storage leaders, the real question is simple: which model gives you repeatable outcomes for the same StatefulSet, at the cost level you can defend.

Optimizing On-Prem vs Cloud Storage Performance with Platform-Grade Storage

Teams get the best results when they optimize the full data path, not just the media. On-prem stacks often deliver strong latency until the kernel, interrupt load, or storage daemons eat CPU. Cloud stacks often deliver steady baseline performance until the workload hits a throughput cap, a burst bucket, or a noisy-neighbor event.

Software-defined Block Storage helps in both cases because it can enforce I/O policies, isolate tenants, and scale capacity without forcing a forklift upgrade. In Kubernetes Storage, that policy layer also reduces platform risk because you can treat storage as code through a StorageClass and CSI, rather than as a one-off per cluster. The CSI specification has become the standard interface that lets Kubernetes integrate storage systems in a consistent way.

🚀 Build a SAN Alternative That Performs On-Prem and in Cloud-Like Environments

See how Software-defined Block Storage and SPDK reduce CPU overhead.

👉 Our Technology →

On-Prem vs Cloud Storage Performance in Kubernetes Storage Deployments

On-Prem vs Cloud Storage Performance changes shape once you run the workload through Kubernetes Storage. Scheduling, node placement, and network topology can turn a fast device into a slow service.

On-prem Kubernetes clusters commonly run hyper-converged, where storage and compute share nodes, or disaggregated, where storage runs on dedicated nodes. Hyper-converged can cut network hops, but it also increases resource contention. Disaggregation reduces contention, but it makes the network part of the storage path.

In cloud Kubernetes, the “disk” often lives behind a managed block service, and the VM family, the disk class, and the region set hard limits. For example, providers document that disk performance depends on multiple factors, including disk type, size, and instance shape.

On-Prem vs Cloud Storage Performance and NVMe/TCP Networking

On-Prem vs Cloud Storage Performance often comes down to whether the network and CPU can keep up with storage demand. NVMe/TCP matters because it brings NVMe-oF semantics over standard Ethernet, which fits most data centers and many private-cloud builds without an RDMA-only fabric.

With NVMe/TCP, CPU efficiency becomes a first-class performance lever. Simplyblock uses an SPDK-based, user-space, zero-copy datapath to reduce overhead in the hot path, helping keep CPU cost down and tail latency steadier as concurrency rises. This approach supports Kubernetes Storage patterns where you need fast volumes, plus isolation across teams and workloads.

Measuring and Benchmarking Storage Performance That Matters

Benchmarking should match the workload and capture percentiles. Average latency hides problems that break SLOs. Track p50, p95, and p99 latency, plus sustained throughput, and watch CPU usage on both the app nodes and the storage nodes.

Use two test layers. Synthetic tests show the raw block behavior and queue depth sensitivity. Workload tests show what users feel, including commit time, compaction stalls, and replica catch-up. Keep the Kubernetes manifests stable between runs so you do not “benchmark the scheduler.”

If you need cloud-specific baselines, start with provider documentation for block volume types and performance limits, then confirm with your own tests.

Approaches for Improving On-Prem vs Cloud Storage Performance

Use a small set of changes, measure each one, and keep the rollout controlled.

- Set clear p99 latency targets per workload class, and enforce them with QoS and rate limits.

- Reduce CPU overhead in the I/O path by preferring efficient user-space stacks where they fit, and by leaving headroom on busy nodes.

- In the cloud, pick disk classes that match your write pattern, and validate that the instance type can deliver the disk’s advertised limits.

- In on-prem, avoid “hot” storage nodes by spreading tenants and replicas across failure domains, and by monitoring rebuild impact.

- Standardize Kubernetes Storage through CSI-backed StorageClasses so teams stop tuning storage in ad hoc ways.

Side-by-Side Performance Factors

The table below summarizes the most common performance tradeoffs teams see when they compare on-prem and cloud for block storage in Kubernetes.

| Dimension | On-Prem storage | Cloud block storage |

|---|---|---|

| p99 latency | Often lower when the local NVMe is close to the compute | Can be consistent, but jitter appears at caps or contention |

| Throughput scaling | You scale by adding nodes and network capacity | You scale by picking bigger disks and instances, within limits |

| CPU overhead | You control the full stack, so tuning matters | Provider handles much of the stack, but you still pay CPU on clients |

| Cost shape | CapEx plus ops, strong unit cost at scale | OpEx, easy start, cost can rise with provisioned performance |

| Control and compliance | Full control over placement and retention | Strong managed features, but less control over placement details |

Simplyblock™ for Consistent Results Across On-Prem and Cloud

Simplyblock™ targets consistent storage behavior for Kubernetes Storage by combining Software-defined Block Storage with NVMe/TCP support and an SPDK-based data path. That combination helps teams reduce CPU overhead, keep latency steadier under concurrency, and apply multi-tenant QoS so one workload does not drain I/O from another.

This matters in both models. On-prem, you can push closer to the hardware limits of NVMe while keeping the platform manageable. In cloud-adjacent or private-cloud builds, NVMe/TCP lets you run a familiar Ethernet fabric and still deliver high-performance block volumes to StatefulSets.

Future Directions in Storage Performance Comparison

Teams will keep pushing toward fewer moving parts in the hot path and tighter control of tail latency. Expect more disaggregated designs, more policy-driven Kubernetes Storage, and more use of DPUs and IPUs for offload in high-throughput environments. SPDK-style user-space paths will also keep gaining ground where CPU efficiency and predictable p99 matter.

Related Terms

Teams comparing On-Prem vs Cloud Storage Performance often reference these pages for Kubernetes Storage and NVMe/TCP planning:

Questions and Answers

On-prem storage typically delivers lower latency and higher IOPS because it runs on dedicated hardware with direct network access. Cloud storage can introduce variability due to multi-tenancy and shared infrastructure. Solutions based on distributed block storage architecture can bring cloud-like flexibility to on-prem environments.

Yes. On-prem NVMe storage often achieves microsecond latency and consistent throughput. Platforms leveraging NVMe over TCP allow enterprises to match or exceed cloud performance while retaining infrastructure control.

Cloud storage performance can fluctuate due to noisy neighbors, shared bandwidth, and tier-based throttling. Enterprises seeking predictable results often deploy scale-out storage architectures on-prem or in hybrid environments to maintain consistent I/O performance.

High-end cloud tiers can approach on-prem performance, but at a higher cost. For latency-sensitive workloads like databases, using Kubernetes-native storage solutions with NVMe backends can provide predictable, high-speed performance across environments.

Simplyblock provides software-defined storage that runs on commodity hardware and supports Kubernetes and VM workloads. Its software-defined storage platform enables enterprises to achieve near-NVMe performance on-prem while maintaining cloud-native scalability and flexibility.