SAN vs NVMe over Fabrics

Terms related to simplyblock

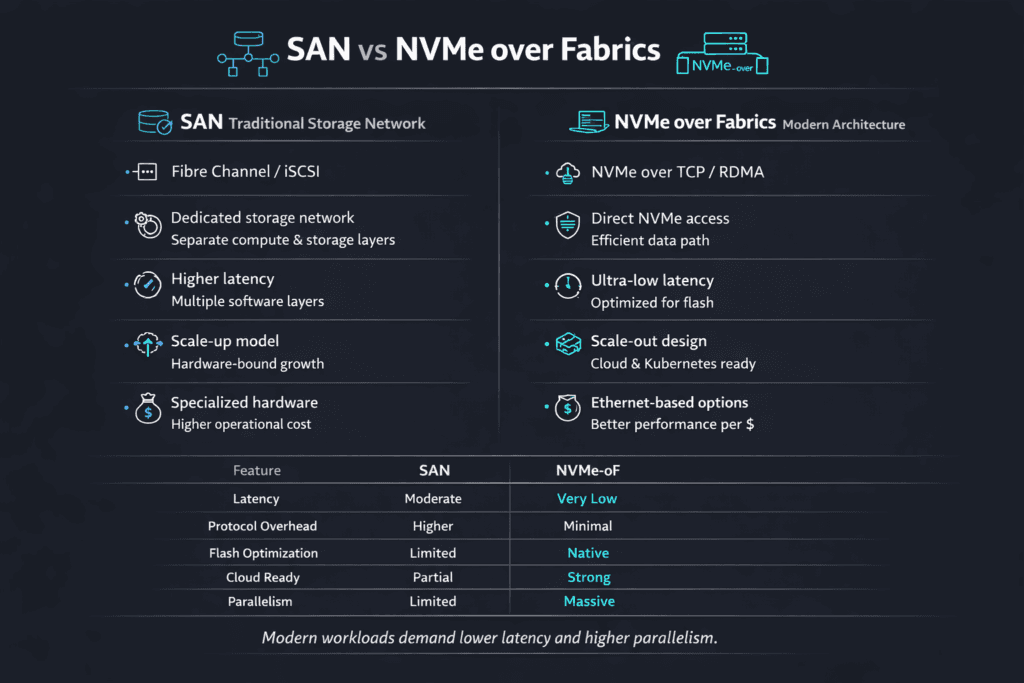

SAN vs NVMe over Fabrics compares two ways to deliver shared block storage to many servers. A traditional SAN usually uses Fibre Channel or iSCSI and a storage array with controllers, cache, and RAID. NVMe over Fabrics (NVMe-oF) extends the NVMe command set across a network, so hosts can reach remote NVMe media with less protocol overhead.

The real gap shows up in tail latency, CPU cost, and scaling behavior. SAN designs can deliver strong uptime and familiar ops, but controller bottlenecks and protocol layers often add jitter under load. NVMe-oF keeps the NVMe queue model across the fabric, which helps when you push high concurrency and many volumes.

Executives tend to ask one question: which option holds p99 steady while the business grows. Platform teams ask a second question: which option fits Kubernetes without adding another operations island?

Modern Shared-Storage Choices for Performance-Critical Workloads

A SAN can still make sense when you want mature workflows, strict change control, and known failure modes. Many teams already know how to run zoning, multipath, snapshots, and array upgrades. That maturity often comes with higher cost, more vendor lock-in, and less flexibility when app teams ask for rapid change.

NVMe-oF often wins when you need low latency and scale-out growth. It also fits disaggregated designs, where compute and storage scale on separate nodes. The key is the data path. A lean path keeps latency tight. A heavy path burns CPU and adds jitter, even with fast drives.

Software-defined Block Storage can help in both models because it turns performance and protection into policy. It also fits Kubernetes Storage well, since teams can express intent in a StorageClass and let the storage layer enforce it.

🚀 Choose the Right NVMe-oF Transport for Your Fabric

Use simplyblock guidance to compare NVMe/TCP, RDMA, and NVMe/FC tradeoffs.

👉 Compare NVMe-oF transports →

SAN vs NVMe over Fabrics in Kubernetes Storage

SAN vs NVMe over Fabrics becomes more practical and more visible once you run stateful apps through Kubernetes Storage. Kubernetes changes volume lifecycle, node placement, and failure handling. It also increases churn because reschedules, scale events, and rolling updates happen often.

SAN-backed CSI drivers can work, but many teams hit friction when they scale clusters or isolate tenants. Shared controller pools can turn into shared pain when multiple teams compete for IOPS. NVMe-oF aligns well with Kubernetes patterns when the storage layer scales out and enforces QoS per volume or tenant.

Kubernetes Storage also makes latency variance expensive. One noisy neighbor can slow many StatefulSets if the platform lacks hard limits. Strong isolation matters as much as raw media speed.

SAN vs NVMe over Fabrics and NVMe/TCP

SAN vs NVMe over Fabrics includes several NVMe-oF transports, and NVMe/TCP often becomes the default choice on standard Ethernet. NVMe/TCP avoids the need for an RDMA-only fabric while still keeping NVMe semantics over the network. That makes rollout easier across many racks and many clusters.

NVMe/TCP puts pressure on CPU efficiency, because the host must process more network and storage work. That is where SPDK-style user-space I/O helps. Simplyblock uses an SPDK-based, zero-copy approach to cut context switches and reduce overhead in the hot path. Lower overhead typically means steadier p99 latency at high queue depth, and it also leaves more CPU for apps and control-plane work.

Benchmarking Storage – Latency, IOPS, and CPU Cost

Benchmarks should reflect real workload shapes and measure percentiles. Average latency hides the spikes that break SLOs. Track p50, p95, and p99 for reads and writes, then track CPU on both app nodes and storage nodes.

Use two test layers. A synthetic tool shows queue depth behavior and saturation points. A workload test shows what users feel, such as database commit time, log flush stalls, or index read latency. Keep your Kubernetes manifests stable across runs, or you will end up measuring scheduling changes instead of storage changes.

When you compare SAN and NVMe-oF, look for the “knee.” Many SAN stacks hold steady until the controllers saturate. Many NVMe-oF stacks hold steady until CPU or network paths saturate. Those limits drive real cost and sizing.

Practical Ways to Improve Fabric-Based Block Storage

Apply a small set of actions, and measure each change. That keeps the comparison honest and easy to repeat.

- Set clear p99 targets per workload class, and enforce them with QoS and rate limits.

- Validate multipath, queue depth, and timeout settings so retries do not amplify load.

- Reserve CPU headroom on storage nodes and app nodes, especially with NVMe/TCP.

- Separate noisy tenants with hard limits, not only best-effort pools.

- Standardize Kubernetes Storage via StorageClasses, so teams stop tuning by hand.

Side-by-Side Performance Factors

The table below highlights the tradeoffs teams usually see when they compare a traditional SAN to NVMe over Fabrics for shared block storage in Kubernetes.

| Dimension | Traditional SAN (FC/iSCSI) | NVMe over Fabrics (NVMe-oF) |

|---|---|---|

| Tail latency (p99) | Can rise under controller pressure | Often tighter when tuned and isolated |

| Parallel I/O | May bottleneck at controllers | NVMe queue model scales with concurrency |

| CPU efficiency | Often hides cost in array controllers | Shifts focus to host and target CPU paths |

| Network approach | Dedicated FC or IP storage network | Ethernet with NVMe/TCP, plus RDMA or NVMe/FC options |

| Kubernetes fit | Works, but can feel rigid at scale | Often matches CSI lifecycles and fast provisioning |

Consistent Database QoS with Simplyblock™ Software-defined Block Storage

Simplyblock™ provides Software-defined Block Storage for Kubernetes Storage with NVMe/TCP support, multi-tenancy, and QoS controls. That mix helps teams replace “best effort” shared storage with policy-backed behavior that holds up under concurrency.

The SPDK-based data path reduces overhead in the hot path. That matters when you run many volumes, many tenants, and many threads. With NVMe/TCP, simplyblock can deliver SAN-like shared storage on Ethernet while keeping operations aligned with Kubernetes workflows.

What Comes Next for SAN vs NVMe over Fabrics

SAN vs NVMe over Fabrics will stay a real choice for years. Many enterprises will keep SAN for stable legacy estates. Many platforms will use NVMe-oF for new stateful workloads that demand low latency and scale-out growth.

Expect broader use of NVMe/TCP in Ethernet-first data centers, more NVMe/FC where Fibre Channel already exists, and more offload to DPUs and IPUs for data-path work. The winning designs will keep p99 tight, enforce tenant fairness, and scale without forklift upgrades.

Related Terms

Short companion terms teams review during SAN vs NVMe over Fabrics evaluations:

- Storage Area Network (SAN)

- NVMe-oF (NVMe over Fabrics)

- NVMe over TCP (NVMe/TCP)

- SPDK (Storage Performance Development Kit)

Questions and Answers

A traditional SAN relies on centralized storage arrays using Fibre Channel or iSCSI, while NVMe over Fabrics (NVMe-oF) distributes NVMe storage across network transports like TCP or RDMA. Architectures built on distributed block storage remove single-controller bottlenecks common in legacy SAN systems.

Yes. NVMe-oF reduces protocol overhead and supports parallel I/O queues, delivering lower latency and higher IOPS than most SAN deployments. Implementations using NVMe over TCP can achieve near-local NVMe performance over standard Ethernet networks.

Not necessarily. While SAN often requires dedicated Fibre Channel switches and HBAs, NVMe over Fabrics—especially TCP-based deployments—runs on commodity Ethernet. This makes it more flexible and scalable within a scale-out storage architecture.

NVMe over Fabrics is better suited for Kubernetes because it integrates natively with CSI and supports distributed scaling. Simplyblock’s Kubernetes-native storage platform enables high-performance persistent volumes without SAN complexity.

Simplyblock replaces traditional SAN arrays with software-defined NVMe over Fabrics storage. Its SAN replacement architecture provides enterprise features like replication and encryption while delivering higher performance and simpler operations.