Hyperconverged vs Disaggregated Storage

Terms related to simplyblock

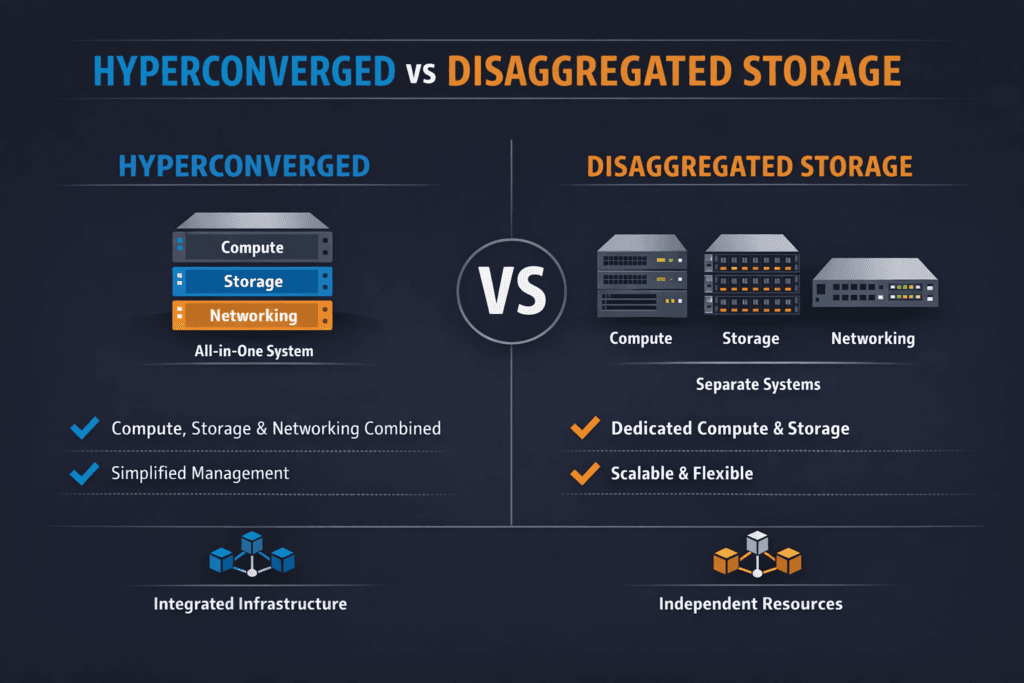

Hyperconverged vs Disaggregated Storage compares two ways to place compute and storage in a platform. Hyperconverged storage runs storage services on the same nodes that run applications, which keeps I/O close and can cut network hops. Disaggregated storage separates storage nodes from compute nodes, which lets each layer scale on its own and improves capacity pooling.

In Kubernetes Storage, this choice shapes day-two work as much as it shapes performance. A cluster that runs many stateful workloads needs fast provisioning, clean failure behavior, and stable tail latency under mixed load. Software-defined Block Storage helps when it applies the same policy, QoS, and automation across both topologies.

When Locality Wins and When Pooling Wins

Teams pick hyperconverged designs when they want tight locality, simpler, small-footprint installs, and clear ownership at the node level. The model fits edge clusters, smaller data centers, and platforms where each node group maps to a specific service. It also reduces surprise network costs because most reads and writes stay inside the node.

Teams pick disaggregated designs when they want higher fleet use, easier growth, and stronger separation between app compute and storage duties. This model supports large shared clusters where storage grows faster than compute, or where several teams share one storage pool. It also lines up with a SAN alternative strategy because the storage layer becomes a service, not a per-node feature.

Both models can work. The best choice depends on whether your main limit is node-local CPU and NVMe slots, or pooled capacity and independent scaling.

🚀 Run Hyperconverged or Disaggregated Kubernetes Storage on NVMe/TCP

Use Simplyblock to switch topologies without reworking your app stack, and keep tail latency in check.

👉 Use Simplyblock for Kubernetes Storage →

Hyperconverged vs Disaggregated Storage for Kubernetes Storage

Kubernetes Storage makes the trade-off more concrete because pods move, nodes drain, and PVCs attach and detach all day. Hyperconverged layouts often deliver low latency for hot workloads because the data path stays short. They can also suffer when storage services compete with apps for CPU, cache, and PCIe lanes on the same host.

Disaggregated layouts make scheduling easier because compute nodes focus on apps, and storage nodes focus on I/O. They also raise the importance of the network path because every read and write crosses the fabric. If the network jitters, p99 latency jitters, too. That is why topology rules, tenant isolation, and QoS matter more in disaggregated Kubernetes Storage.

Software-defined Block Storage can support either model, plus mixed layouts where hot tiers run hyperconverged and shared capacity runs disaggregated.

Hyperconverged vs Disaggregated Storage with NVMe/TCP Fabrics

NVMe/TCP brings NVMe-oF semantics over standard Ethernet, so teams can run a high-performance block fabric without forcing a specialized network everywhere. In disaggregated designs, NVMe/TCP often becomes the main lever for keeping latency and throughput stable as the platform grows. In hyperconverged designs, NVMe/TCP can connect nodes into a shared pool while still allowing locality-first placement.

The storage data path matters as much as the transport. SPDK-style user-space I/O reduces overhead, cuts extra copies, and can improve IOPS per core. That CPU efficiency helps in hyperconverged clusters where apps and storage share hosts, and it helps in disaggregated clusters where many compute nodes generate parallel I/O streams.

If you want Kubernetes Storage that stays steady under churn, NVMe/TCP plus a lean data path usually beats heavier legacy stacks.

Hyperconverged vs Disaggregated Storage Performance Testing

A good benchmark plan shows where each topology breaks first. Average IOPS hides the problem in shared clusters, so measure percentiles, queue behavior, and CPU use.

Test the storage layer with mixed random read/write profiles and step up the queue depth until latency stops behaving. Record p50, p95, and p99 latency, and chart CPU use on both initiator and target nodes. Then repeat inside Kubernetes Storage against PVCs, and include reschedules, rolling updates, and rebuild work in the run.

Also, add a noisy-neighbor test. Run one workload that floods I/O while another workload tries to hold a latency SLO. That scenario exposes whether your Software-defined Block Storage layer can enforce fairness.

Operational levers that reduce tail latency

Use this short checklist to cut latency spikes in either topology:

- Set p95 and p99 targets per workload tier, and alert on drift, not just outages.

- Align pod placement with storage placement so the platform avoids extra hops by default.

- Enforce per-volume or per-tenant QoS so batch work cannot starve transactional services.

- Keep the I/O path lean, and track CPU-per-IOPS as a first-class metric.

- Validate failover and rebuild behavior under load, because recovery changes the latency curve.

Side-by-side comparison of Kubernetes-ready storage topologies

This table highlights the trade-offs that usually matter most for executives and platform owners.

| Dimension | Hyperconverged | Disaggregated |

|---|---|---|

| Latency profile | Often lower due to locality | Depends heavily on fabric stability |

| Scaling | Scale compute and storage together | Scale compute and storage independently |

| Resource contention | Shared CPU and PCIe with apps | Cleaner separation of duties |

| Failure blast radius | Node loss affects both layers | Storage node loss isolates from compute |

| Fleet utilization | Can strand capacity on nodes | Better pooling across clusters |

| Kubernetes Storage fit | Strong for hot tiers and smaller footprints | Strong for shared platforms and growth |

| NVMe/TCP impact | Enables pooling without abandoning locality | Core transport for predictable remote block |

Simplyblock™ for topology choice without re-platforming

Simplyblock™ supports Kubernetes Storage across hyperconverged, disaggregated, and mixed deployments, so teams can match topology to each workload tier. NVMe/TCP provides a consistent fabric for remote volumes, and simplyblock’s SPDK-based user-space data path reduces overhead for high-parallel I/O. That approach helps control tail latency while keeping CPU headroom for applications.

For shared clusters, simplyblock adds multi-tenancy and QoS controls so one namespace does not consume the entire I/O budget. For platform roadmaps, it also supports a SAN alternative strategy through Software-defined Block Storage that runs on standard hardware and scales by adding nodes.

Where platforms are heading next

Kubernetes Storage roadmaps keep pushing toward stronger topology awareness, stricter isolation, and faster recovery workflows. Mixed designs will become more common because teams want node-local performance for hot paths and pooled capacity for everything else.

DPUs and IPUs will also matter more as clusters grow, because CPU cost often becomes the limit before NVMe media does. NVMe/TCP should stay a common baseline since it fits standard Ethernet operations and supports scale-out growth.

Related Terms

These glossary pages support Kubernetes Storage, NVMe/TCP, and Software-defined Block Storage decisions.

- Pod Affinity and Storage

- Storage IO Path in Kubernetes

- Fio NVMe over TCP Benchmarking

- NVMe Multipathing

Questions and Answers

Hyperconverged infrastructure (HCI) tightly couples compute and storage within the same nodes, while disaggregated storage separates them into independent layers. Modern platforms based on distributed block storage architecture allow independent scaling of compute and storage resources.

Disaggregated storage scales more efficiently because compute and storage can grow independently. In contrast, hyperconverged systems require adding full nodes even if only storage is needed. Architectures built on scale-out storage design avoid this inefficiency.

Yes. Kubernetes benefits from flexible scaling and dynamic provisioning. Simplyblock’s Kubernetes-native storage platform supports disaggregated storage models that align with containerized, cloud-native architectures.

NVMe over TCP enables high-performance block storage over standard Ethernet, making it ideal for separating storage from compute without sacrificing latency or throughput.

Enterprises seek better cost efficiency, independent scaling, and improved performance isolation. Simplyblock’s software-defined storage platform delivers these benefits while maintaining enterprise features like replication and encryption.