Persistent Storage for Kubernetes Databases

Terms related to simplyblock

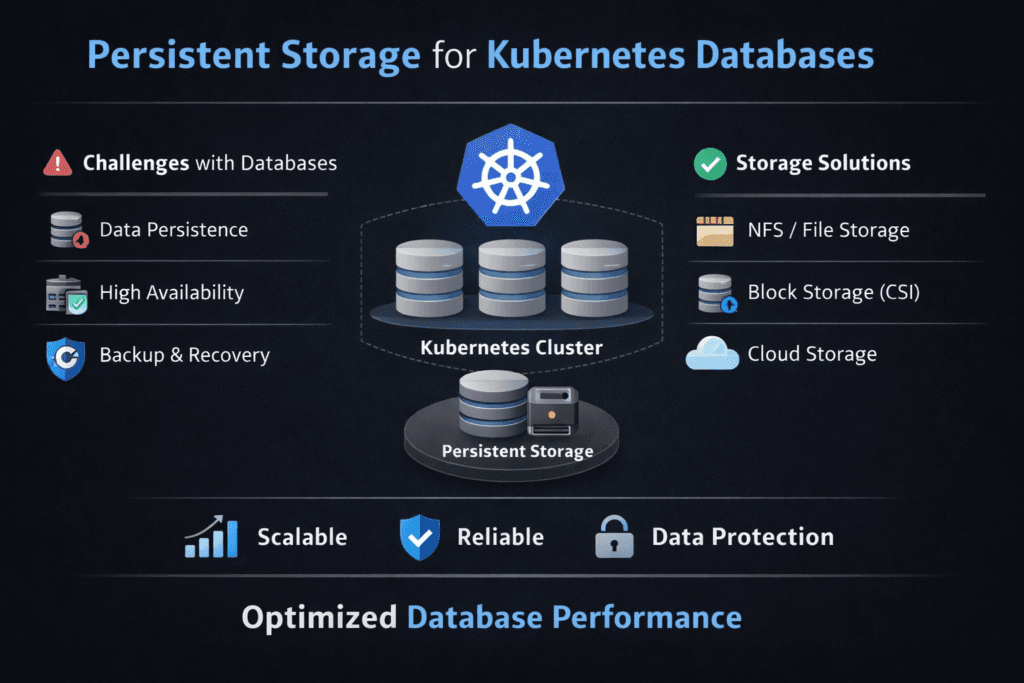

Persistent Storage for Kubernetes Databases means a database keeps its data and performance even when pods restart, nodes drain, or clusters scale. It covers durability, data placement, and the I/O path from the database process to the block device. Teams choose it because database nodes churn in Kubernetes, but data must not.

This topic sits at the center of the Kubernetes Storage strategy. Databases push small, random I/O, they write logs in tight loops, and they punish latency spikes. A strong Software-defined Block Storage layer gives you consistent behavior across clusters, bare-metal nodes, and cloud instances, without tying you to a single array.

Why Databases Break First When Storage Wobbles

Databases show storage flaws early. They write WAL or redo logs with strict ordering. They flush pages under pressure. They spike I/O during compaction, index builds, vacuum, or checkpoint.

A storage layer that looks fine for stateless services can fall apart under database patterns. You might see slow commits, rising replication lag, or sudden timeouts. These failures rarely come from “not enough IOPS” alone. They often come from tail latency, queue contention, or rebuilding work-stealing bandwidth at the wrong time.

🚀 Run Kubernetes Databases on Persistent Storage That Stays Fast

Use Simplyblock to keep NVMe/TCP latency stable while you scale volumes and clusters live.

👉 Use Simplyblock for Persistent Storage on Kubernetes →

Persistent Storage for Kubernetes Databases and PVC-to-Volume Mapping

Kubernetes turns database storage into policy. A StorageClass sets the rules. A PVC requests capacity and access mode. The CSI driver creates and attaches the volume to the node that runs the pod.

Good mapping keeps the database predictable. It avoids surprise rebinds. It keeps topology rules clear. It makes expansion a routine task instead of a weekend event.

This area also drives executive risk. If the mapping fails during an upgrade, the database stays down. If the mapping drifts, recovery takes longer. If the mapping allows noisy neighbors, the platform creates outages without any node failures.

Persistent Storage for Kubernetes Databases and NVMe/TCP Data Paths

NVMe/TCP helps databases in Kubernetes because it delivers NVMe-class semantics over standard Ethernet. It also supports scale-out designs that keep storage pools shared while compute nodes scale independently. That matters when a database fleet grows faster than the cluster layout.

The data path still matters more than the protocol name. Databases benefit when the storage fast path stays lean, avoids extra copies, and uses CPU efficiently. If the path burns CPU per I/O, latency rises under load. If the path adds jitter, the commit time becomes unstable.

Performance Signals That Matter for Stateful I/O

When you size storage for databases, average latency misleads. A database can look fine at p50 and still miss SLOs at p99. Focus on signals that track user impact.

Use a short set of checks and keep them consistent across test runs:

- p95 and p99 latency for reads and writes

- WAL or redo log write latency

- steady IOPS at fixed concurrency

- throughput during checkpoints or compaction

- latency change during rebuild or rebalance

Tuning Moves That Improve Database Stability

Start with the basics, then tune the platform with intent. Place hot volumes on the right media tier. Set QoS so background work cannot crush the write path. Keep rebuild limits strict during peak hours.

Also, align storage settings with database behavior. Some engines want fast fsync. Some want high write bandwidth. Some value read cache more than raw write IOPS. Match the storage profile to the engine, then validate it with real queries and realistic concurrency.

Options Side-by-Side for Running Databases on Kubernetes

The table below compares common approaches for running database storage in Kubernetes. It focuses on operational risk, latency stability, and how teams scale.

| Approach | Latency stability | Day-2 operations | Failure recovery | Best fit |

|---|---|---|---|---|

| Local SSD on each node | Strong until a node fails | Hard during upgrades and reschedules | Strong, but the tooling can be rigid | Single-node, edge, heavy caching |

| Cloud block volumes | Varies by tier and neighbor load | Easy to start, limits show later | Provider-driven recovery paths | Small to mid fleets |

| Traditional SAN | Often steady, depends on fabric | Slower change cycles | Strong, but tooling can be rigid | Central IT, stable environments |

| Software-defined block storage on NVMe nodes | Strong with QoS and clean I/O path | Fast automation and expansion | Fast rebuild with clear policies | Multi-tenant database platforms |

Persistent Storage for Kubernetes Databases with Simplyblock™

Simplyblock™ targets database-grade behavior by combining Software-defined Block Storage with Kubernetes-native operations. It supports NVMe/TCP, multi-tenancy, and QoS so you can protect the database write path while the platform handles routine work like scaling and recovery.

Its SPDK-based, user-space fast path helps reduce overhead in the I/O stack. That CPU efficiency matters for database fleets because every extra copy and context switch shows up as tail latency. With the right policies, teams can scale capacity, keep commit time stable, and avoid rebuild storms that look like outages.

Where Database Storage on Kubernetes Goes Next

Database platforms will push harder on guardrails. Teams will codify storage SLOs, enforce per-volume QoS, and treat p99 as a first-class KPI. More stacks will offload parts of the data path to DPUs or IPUs to reduce jitter.

At the same time, more organizations will standardize the protocol layer. NVMe/TCP fits that trend because it works on common Ethernet and scales across disaggregated designs. The winners will pair that transport with a fast data path, strict isolation, and simple day-2 operations.

Related Terms

Teams reference these related terms when building durable, low-latency database storage in Kubernetes.

Kubernetes Storage for PostgreSQL

Kubernetes StatefulSet

Storage Quality of Service (QoS)

Write-Ahead Log (WAL)

Questions and Answers

Kubernetes databases require low-latency, high-IOPS block storage with replication and durability. Simplyblock provides Kubernetes-native storage backed by NVMe over TCP, ensuring consistent performance and high availability for stateful database workloads.

Persistent storage directly impacts transaction latency, commit speed, and replication consistency. Slow volumes can bottleneck even optimized databases. Architectures built on distributed block storage maintain predictable I/O performance across nodes.

Block storage is recommended for databases because it offers lower overhead and predictable I/O patterns. Simplyblock supports optimized volumes for stateful Kubernetes workloads that demand high throughput and minimal latency.

High availability requires synchronous replication, automatic failover, and resilient volume management. A scale-out storage architecture ensures persistent volumes remain accessible even during node or hardware failures.

Simplyblock delivers NVMe-backed, software-defined storage with encryption, replication, and CSI integration. Its NVMe over TCP platform enables sub-millisecond latency and scalable performance for production database clusters.