Ceph vs NVMe over TCP

Terms related to simplyblock

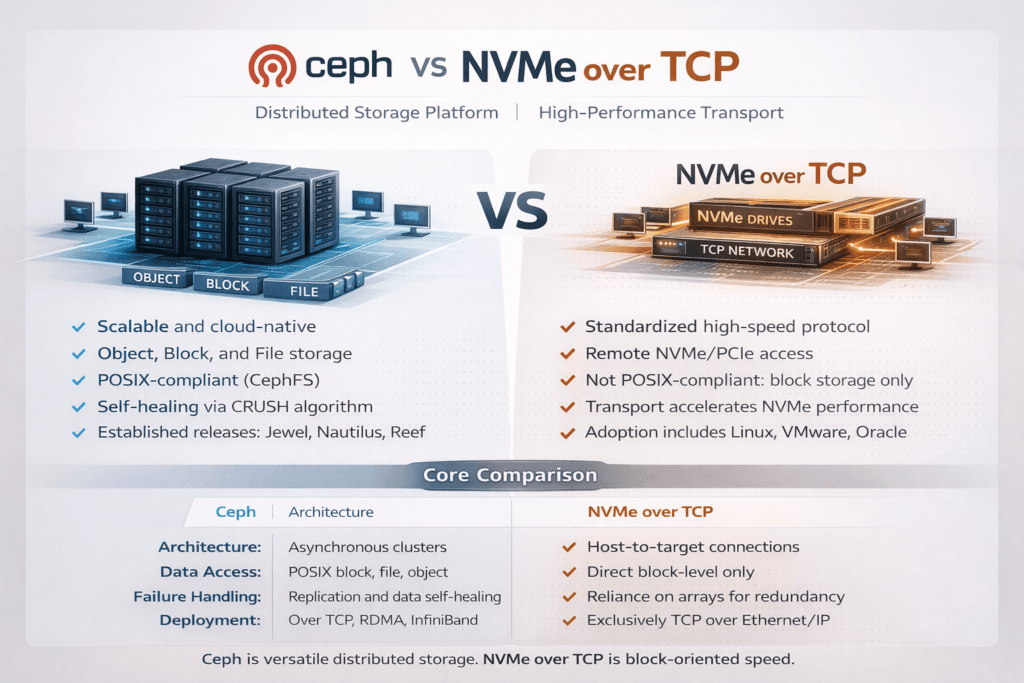

Ceph vs NVMe over TCP usually comes down to architectural intent. Ceph is a distributed storage system that presents block storage through RADOS Block Device (RBD) and balances data with CRUSH placement, background scrubbing, and recovery workflows. NVMe over TCP extends NVMe block semantics across standard Ethernet using TCP/IP, which can reduce protocol overhead compared to legacy stacks and can simplify shared block access for latency-sensitive workloads.

For CIOs and CTOs, the decision tends to hinge on predictable latency under rebuild and node failure, Day-2 operational load, and CPU efficiency. For platform and SRE teams, it comes down to how often storage behavior changes during recovery, how much tuning is required, and how cleanly the data path aligns with Kubernetes scheduling and multi-tenant isolation.

Decision Drivers for Ceph vs NVMe over TCP

Ceph can perform well, but it often requires careful tuning to keep tail latency in check when the cluster runs background tasks such as backfill, recovery, or scrubbing. Its strength shows up when teams want a broad, general-purpose distributed system with mature ecosystem support.

NVMe over TCP tends to shine when the priority is shared block storage with tighter latency characteristics on standard Ethernet. It aligns with disaggregated storage designs and SAN-alternative initiatives because it keeps the block protocol modern and avoids SCSI-era overhead. When paired with Software-defined Block Storage built around NVMe semantics, NVMe/TCP can deliver more IOPS per CPU core and reduce jitter during burst traffic.

🚀 Validate “Ceph vs NVMe/TCP” With Real Benchmark Data

Use Simplyblock’s benchmark report to quantify IOPS, throughput, and tail-latency improvements.

👉 Simplyblock Performance Report →

Kubernetes-Centric Operational Model

Kubernetes Storage adds its own constraints because pods move, nodes drain, and noisy neighbors appear. Ceph-backed volumes, often delivered via CSI and an operator pattern, can work well, but behavior may shift when placement groups go degraded, and the system prioritizes recovery work. That variability matters for databases and message queues that surface p99 latency immediately.

An NVMe/TCP-backed Kubernetes Storage model can behave closer to direct-attached flash from the application viewpoint while keeping shared volumes. It also supports clean separation between compute and storage nodes in disaggregated layouts, plus mixed deployments where some nodes stay hyper-converged for cost or locality reasons.

Protocol and Data-Path Mechanics

NVMe/TCP uses TCP as the transport, which fits existing routed networks and common operational tooling. The result is usually easier adoption than RDMA-first fabrics, especially in environments where standardization, troubleshooting, and change control matter as much as peak microbenchmarks.

Ceph relies on a more complex distributed pipeline. Client I/O interacts with the cluster map and data distribution logic, then the system handles replication or erasure coding while it maintains consistency. That design provides resilience, but the control plane and background maintenance can influence foreground latency.

Validating Performance and SLO Fit

Benchmarking should match production behavior and include failure-mode tests. Use a consistent methodology across both options and capture throughput, IOPS, p50 latency, p95 latency, p99 latency, and CPU per IOPS. Run tests from inside containers to reflect the real storage path in Kubernetes, and repeat them under load from multiple namespaces to simulate multi-tenant contention.

A meaningful test plan includes steady-state read-heavy and write-heavy profiles, mixed ratios, and realistic block sizes. Add a scenario where a node fails or a disk drops, because rebuild behavior often decides whether an architecture can meet database SLOs. Track network retransmits, CPU saturation, and queue depth behavior, because each can inflate tail latency without obvious alarms.

Tactics That Increase Consistency

The most reliable gains usually come from reducing contention and tightening the I/O path. Apply these changes in small steps and keep each step measurable.

- Pin critical storage networking work to dedicated CPU cores, and keep application cores separate.

- Isolate storage traffic on the network to reduce congestion and retransmissions during burst periods.

- Set queue depths based on observed concurrency instead of maximizing depth by default.

- Control rebuild and recovery rates so background work cannot starve foreground I/O.

- Enforce multi-tenant QoS so one workload cannot dominate shared volumes.

Ceph vs NVMe over TCP Tradeoffs in Production

This table summarizes common differences teams see when they compare a Ceph RBD stack with an NVMe/TCP-centered approach for shared block storage.

| Dimension | Ceph (RBD) | NVMe/TCP-based Software-defined Block Storage |

|---|---|---|

| Primary value | General-purpose distributed storage | High-performance shared block volumes over Ethernet |

| Operational tuning | Often required for stable tail latency | More network-and-CPU focused, fewer cluster knobs |

| Tail latency under rebuild | Can vary during backfill and recovery | Easier to keep stable with QoS and tight data path |

| Kubernetes fit | Mature operator patterns, broader ecosystem | Strong for disaggregated and performance-first layouts |

| Hardware and network | Commodity hardware, higher CPU overhead | Commodity Ethernet, no RDMA requirement |

| Typical best use | Mixed workloads, broad storage services | Databases, analytics, and SAN-alternative block storage |

Predictable Outcomes with Simplyblock™

Simplyblock™ targets Kubernetes Storage use cases that need consistent behavior under load while keeping operations lean. It provides Software-defined Block Storage designed around NVMe semantics, and it supports NVMe/TCP as a first-class transport. Its SPDK-based, user-space, zero-copy architecture is built to reduce context switches and improve CPU efficiency, which helps when clusters scale and when multiple tenants share the same storage pool.

For teams weighing Ceph against NVMe/TCP, simplyblock focuses on the areas that typically cause SLO misses: tail latency, resource contention, and performance isolation. Multi-tenancy and QoS allow platform teams to define guardrails per workload tier, and flexible deployment options support hyper-converged, disaggregated, and mixed designs, including bare-metal footprints.

Future Directions for Ceph vs NVMe over TCP

Ceph development continues to focus on better performance consistency, improved manageability, and stronger integration patterns for cloud-native environments. Many organizations will keep using Ceph where feature breadth and ecosystem maturity matter most.

NVMe/TCP momentum will likely keep growing in environments that prioritize predictable latency on standard Ethernet. More deployments will adopt disaggregated storage, tighter QoS policies, and CPU offload patterns using DPUs or IPUs. As that happens, the difference between “fast in a lab” and “stable in production” will matter more, and architectures that reduce data-path overhead and enforce isolation will gain more mindshare.

Related Terms

Teams often cross-reference these terms for Ceph vs NVMe over TCP performance planning.

- SPDK (Storage Performance Development Kit)

- NVMe over TCP (NVMe/TCP)

- NVMe over RoCE (RDMA)

- Kubernetes Storage (Disaggregated vs Hyper-converged)

Questions and Answers

If your workload is dominated by small random I/O, NVMe/TCP often delivers tighter p95/p99 because the host talks directly to remote NVMe namespaces over Ethernet, reducing protocol overhead compared to a heavier Ceph data path. The gap is most visible when Ceph background work (recovery, backfill) competes with client I/O. Validate with fio NVMe over TCP benchmarking and compare against Ceph behavior under load.

Ceph couples durability with cluster rebalancing and recovery workflows that can reshuffle data and impact performance during node loss or expansion. NVMe/TCP is a transport, so recovery depends on the storage system behind the target, but the data path stays simple and predictable. If you’re capacity-expanding frequently, model the operational blast radius using storage rebalancing impact and the target’s NVMe over TCP architecture.

Ceph clients can spend more CPU on networking, checksums, and cluster interactions as concurrency rises, especially in mixed read/write profiles. NVMe/TCP initiators still use TCP/IP, but the command set is optimized for flash and parallel queues, often improving IOPS per core on modern hosts. The deciding factor is whether you need Ceph’s unified system or a thinner block path over NVMe/TCP on standard Ethernet.

Ceph (via RBD) hides placement behind the cluster, while NVMe/TCP deployments often expose more explicit locality and pathing choices. In multi-AZ clusters, your biggest risk is cross-zone latency or misplacement that inflates p99. Align Pod placement with storage topology using topology-aware storage scheduling and check how your transport and backend handle storage fault domains vs availability zones.

The common pitfall is focusing on transport speed and ignoring day-2 ops: data movement, failure domains, and cutover mechanics. A safer approach defines the target data plane, control plane, and rollback plan before moving volumes, then tests degraded-mode latency and rebuild limits. Use a structured Ceph replacement architecture and validate the new path with NVMe/TCP performance checks under peak load.