Qdrant

Terms related to simplyblock

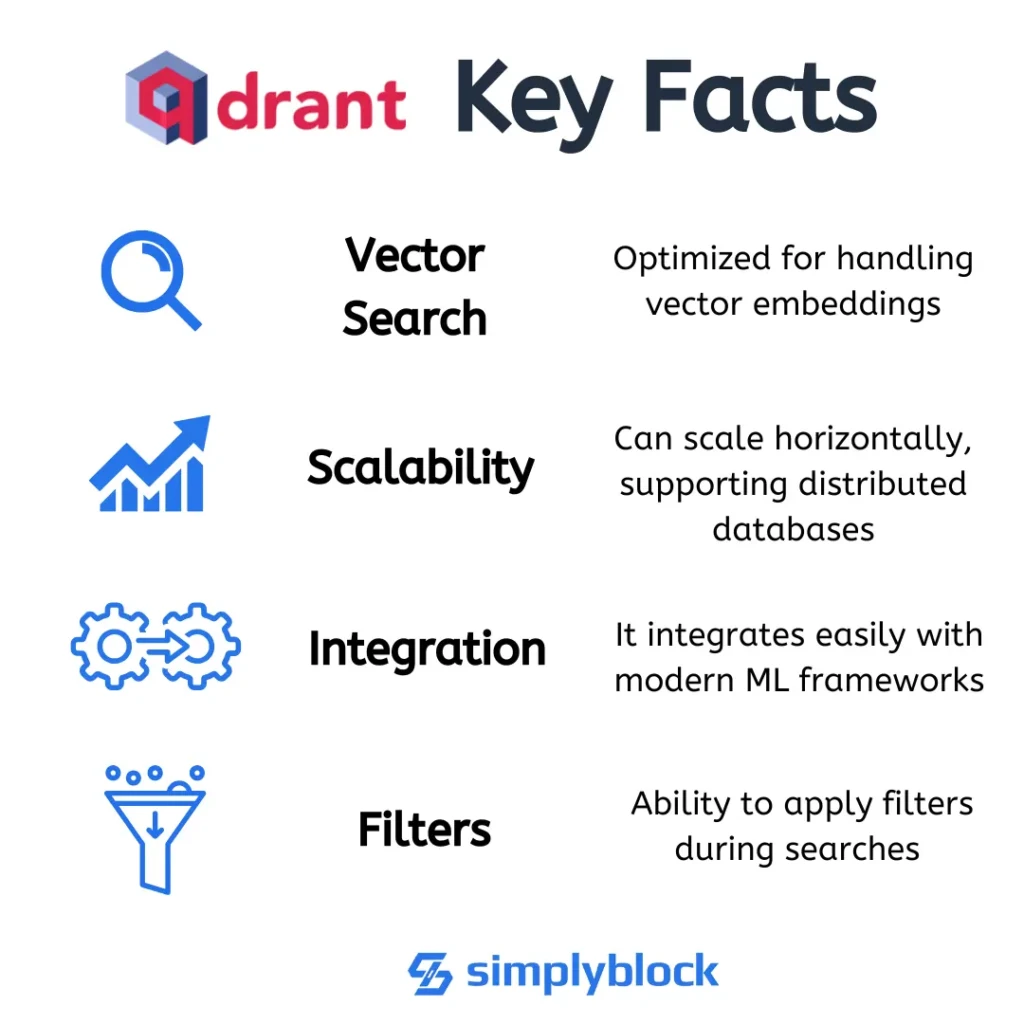

Qdrant is an open-source vector search engine designed for storing and querying high-dimensional embeddings. It powers similarity search, enabling machine learning applications like semantic search, recommendation systems, and AI-driven retrieval. Built in Rust for performance and safety, Qdrant supports approximate nearest neighbor (ANN) indexing, high-throughput ingestion, and real-time querying with strong filtering capabilities.

Unlike traditional databases, Qdrant is optimized for unstructured data and vector representations—such as sentence embeddings, image features, and audio vectors—used in deep learning and NLP pipelines.

How Qdrant Works

Qdrant organizes and indexes embeddings (vectors) using HNSW (Hierarchical Navigable Small World) graphs, an efficient algorithm for fast, approximate nearest neighbor searches in high-dimensional spaces.

Each vector is stored along with associated payloads, or metadata, which can be filtered during search operations. This makes Qdrant highly effective for hybrid queries—combining semantic similarity with structured filters like tags, categories, or timestamps.

Key architectural features:

- REST and gRPC APIs for integration

- JSON schema for payload data

- Filtering: Boolean, numeric, geospatial

- Real-time updates: Insert, delete, and upsert without downtime

- Horizontal scaling: Distributed deployments via clustering

For high-speed workloads, pairing Qdrant with NVMe-backed block storage, such as simplyblock, ensures consistent performance for both read- and write-heavy vector operations.

Use Cases for Qdrant

Qdrant is built to enable production-grade machine learning and data science workflows at scale. Common use cases include:

- Semantic Search: Index embeddings of documents or user queries to retrieve the most relevant results.

- Recommendation Engines: Suggest products or content based on user preferences or behavioral embeddings.

- AI Chatbots (RAG): Retrieve context-aware responses by embedding past dialogue or external documents.

- Multimodal Search: Combine text, image, and metadata for hybrid search experiences.

- Anomaly Detection: Use vector clustering to identify outliers in time-series or event-driven data.

These applications benefit from low-latency, scalable storage infrastructures. Vector-heavy workloads are performance-sensitive, and simplyblock’s NVMe over TCP architecture provides the throughput and durability needed for such real-time AI systems.

Qdrant vs Other Vector Databases

Qdrant stands out with a strong focus on filtering, performance, and open-source flexibility. Below is a comparison with other vector search engines:

| Feature | Qdrant | Pinecone | Weaviate | FAISS (DIY) |

|---|---|---|---|---|

| License | Open-source (Apache 2.0) | Closed (managed) | Open-source (BSD) | Open-source (BSD) |

| ANN Algorithm | HNSW | Proprietary | HNSW | HNSW / IVF |

| Metadata Filtering | Strong (JSON-like) | Yes | Yes | Manual (via code) |

| Real-time Updates | Yes | Yes | Yes | Complex setup |

| Cloud Native Deployment | Yes (K8s-ready) | Managed only | Yes | Manual orchestration |

| Built-in REST API | Yes | Yes | Yes | No |

Qdrant is ideal for developers and AI teams seeking full control over the vector search stack with a fast, secure, and filter-rich backend.

Storage and Performance Considerations

Qdrant handles real-time vector workloads that can bottleneck on storage if not optimized. Performance drivers include:

- Insert/update throughput: Fast ingestion of new or updated vectors

- Index rebuilding: Demands sequential and parallel I/O capacity

- Query latency: Affected by ANN algorithm and disk performance

With NVMe-class block storage from simplyblock, users benefit from:

- Sub-millisecond read/write latency

- Advanced erasure coding for storage efficiency

- Thin provisioning to optimize capacity usage

- Reliable, distributed storage for AI/ML workloads

Whether you’re running Qdrant locally, on bare metal, or in Kubernetes, high-performance persistent storage enables smoother indexing, recovery, and scalability.

Qdrant in Kubernetes and Edge Environments

Qdrant offers a ready-to-deploy Docker image and Helm chart for Kubernetes. In containerized deployments, storage performance becomes critical for vector retrieval SLAs and index health.

Running Qdrant with simplyblock’s Kubernetes-native CSI provides:

- Dynamic PersistentVolumes

- Fast volume cloning for testing

- Secure multi-tenancy via QoS controls

- Storage that scales across nodes, zones, or hybrid cloud

At the edge, Qdrant’s lightweight binary and fast disk I/O support make it a strong candidate for real-time inference and low-latency retrieval applications.

Related Terms

Teams often review these glossary pages alongside Qdrant when they’re tuning index build and compaction behavior, keeping similarity search response times consistent, and validating the storage engine characteristics behind vector-heavy workloads.

RocksDB

Log-Structured Merge Tree (LSM Tree)

Read Amplification

Zero-Copy I/O

External References

- Qdrant Official Website

- HNSW Paper (Efficient ANN)

- Vector Search – Wikipedia

- Embedding Models (Hugging Face)

- Semantic Search Concepts

Questions and Answers

Qdrant is an open-source vector database optimized for high-speed similarity search across embeddings from models like OpenAI or Hugging Face. It’s ideal for semantic search, recommendation engines, and AI-native apps that need real-time vector indexing and retrieval.

Yes, Qdrant supports containerized and Kubernetes-native deployments. For stateful workloads, combining Qdrant with NVMe-powered Kubernetes storage ensures high IOPS, low latency, and persistent volume resilience across restarts.

Vector workloads demand fast, consistent I/O. NVMe over TCP is a strong match for Qdrant, especially in high-throughput use cases like multi-modal search or real-time inference across large datasets.

Qdrant allows you to manage data security yourself. For production environments, using encryption-at-rest at the storage layer ensures compliance and isolation—critical for multi-tenant AI applications or regulated industries.

Qdrant offers more flexibility through open-source, self-hosted deployment, making it ideal for on-prem or private cloud use. While Pinecone abstracts infrastructure, Qdrant with software-defined storage gives you full control over performance, cost, and compliance.