Bare-Metal Storage for Kubernetes

Terms related to simplyblock

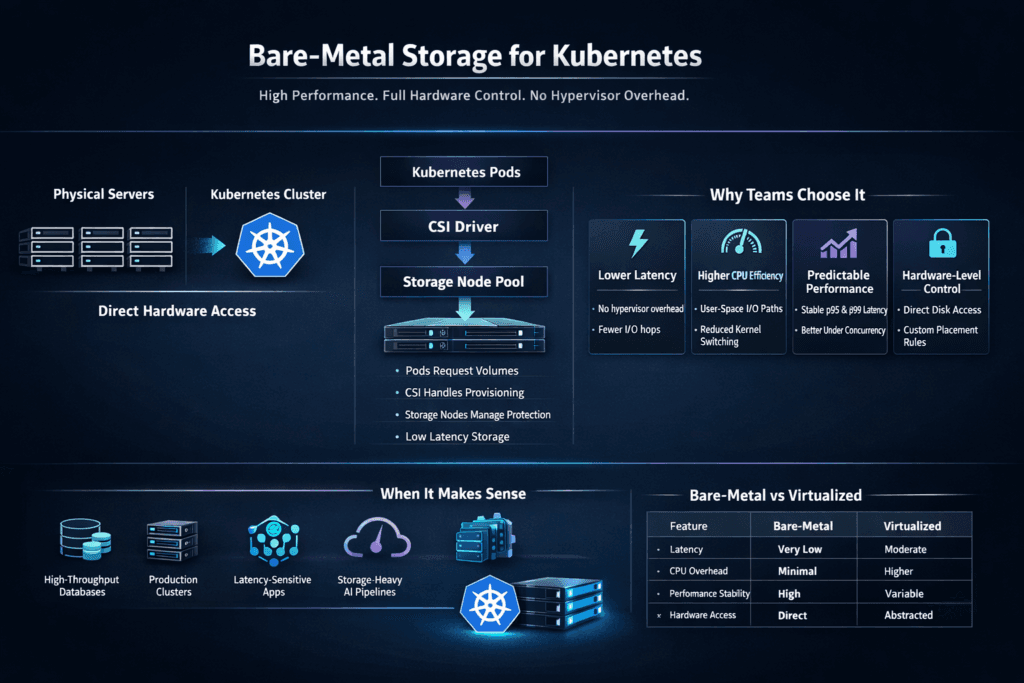

Bare-Metal Storage for Kubernetes means you run Kubernetes storage on direct hardware, not on a hypervisor layer. You attach NVMe and SSD devices to nodes, then let a CSI-based storage stack present volumes to pods. This setup cuts overhead, reduces jitter, and gives you clear control over CPU, memory, PCIe lanes, and network paths.

Teams choose bare metal when they need steady latency for databases, message queues, search, and analytics. They also choose it when they want a SAN alternative that scales with standard servers. With Kubernetes Storage, the storage layer must keep up with scheduling, resizing, snapshots, and recovery without adding operational friction. Software-defined Block Storage fits that goal when it keeps the I/O path lean and the day-2 work simple.

Optimizing Bare-Metal Storage for Kubernetes with user-space I/O

A bare-metal design starts with the hot path. Each extra copy and context switch adds latency. Each wasted CPU cycle reduces headroom for rebuilds and spikes. A user-space datapath based on SPDK-style patterns can reduce overhead and raise IOPS per core, which helps you hit tighter SLOs without overbuying hardware.

Hardware choices still matter. NVMe drives deliver parallelism, but only when the software stack feeds them well. NUMA layout matters because remote memory access can raise latency. PCIe topology matters because oversubscribed lanes cap throughput. A strong design checks those basics before it tunes queue depth or filesystem flags.

🚀 Deploy Software-defined Block Storage on Bare Metal, Then Plug Into Kubernetes

Use Simplyblock to standardize storage nodes and connect workloads through CSI.

👉 Follow Simplyblock Deployments →

Bare-Metal Storage for Kubernetes in Kubernetes Storage Operations

Kubernetes Storage adds motion. Pods move, nodes drain, and autoscaling changes placement. Bare metal gives you speed, but it also forces discipline around topology and failure domains. You want volumes to follow workload intent, not accidents of scheduling.

Most teams separate storage classes by workload type. One class may favor low latency and tight QoS for OLTP. Another may favor capacity efficiency for logs and analytics. This approach makes policy visible, and it limits risk during cluster changes. It also keeps the control plane clean because the platform makes fewer “special case” decisions.

A good bare-metal plan also covers upgrades. You should be able to roll nodes without taking down stateful services. That means you need safe detach/attach flows, fast recovery paths, and clear observability for storage health.

NVMe/TCP networking for scale-out block storage

NVMe/TCP makes bare-metal storage easier to scale because it runs on standard Ethernet. You can disaggregate storage nodes from compute nodes, then export NVMe volumes across the network. That model can reduce blast radius, simplify storage upgrades, and keep application nodes focused on app CPU.

Network design still decides outcomes. Latency spikes often start in congestion, buffer pressure, or uneven pathing. Multipath and redundancy matter because a single link should not stall critical write paths. You also want stable queue behavior so one chatty workload does not raise tail latency for everything else.

When you pair NVMe/TCP with Kubernetes Storage, you get flexibility. You can run hyper-converged for the tightest latency tiers, and you can run disaggregated for shared pools that scale out cleanly.

Measuring and benchmarking bare-metal storage behavior

Benchmarking should answer two questions: “How fast is it?” and “How stable is it under stress?” Many reports stop at peak throughput. Production issues usually show up in p99 and p999 latency, not in average IOPS.

Use consistent fio profiles so you can compare options fairly. Keep block size, job count, queue depth, and read/write mix fixed across test runs. Then add stress that mirrors real life, such as rebuild activity, background compaction, or a node reboot during load. This method shows whether the platform keeps service steady when the cluster changes.

Track CPU per I/O as well. Bare metal gives you full CPU control, so waste stands out quickly. If protocol work consumes too many cores, you lose headroom for failover and maintenance.

Practical ways to improve bare-metal storage performance

- Pin storage work to dedicated CPU cores so the I/O path does not fight with application bursts.

- Align storage pods or daemons to NUMA locality to reduce cross-socket memory traffic.

- Set clear QoS limits per tenant or workload class to prevent noisy neighbors.

- Keep rebuild and rebalancing rates under control, so background work cannot starve foreground I/O.

- Validate network paths for NVMe/TCP, including redundancy, congestion behavior, and failover timing.

Side-by-side view of deployment choices

The table below compares common approaches teams evaluate when they plan bare-metal Kubernetes storage versus more layered options.

| Approach | What it does well | What you must manage |

|---|---|---|

| Bare metal + hyper-converged storage | Lowest hop count, strong locality | Shared failure domain with compute |

| Bare metal + disaggregated storage | Clear separation, simpler scaling | Network design becomes critical |

| Virtualized storage nodes | Fast to stand up | Added overhead and jitter |

| External SAN | Familiar workflows | Cost, scaling limits, and vendor lock-in |

Bare-Metal Storage for Kubernetes with Simplyblock™

Simplyblock™ delivers Software-defined Block Storage for Kubernetes Storage with NVMe/TCP support. It targets high IOPS with low overhead, and it fits bare-metal clusters where CPU efficiency matters. The platform supports hyper-converged, disaggregated, and hybrid layouts, so teams can choose the right failure domain model for each workload tier.

Multi-tenancy and QoS help keep service steady in shared clusters. That matters when one namespace runs bursty batch jobs while another runs a database. With clear guardrails, operators can protect critical volumes without manual throttling. Simplyblock also aligns with NVMe-oF growth, which helps teams plan for future transport options while keeping NVMe/TCP as the default for broad Ethernet deployments.

Future directions and advancements in platform storage

Teams now treat storage as part of the platform, not as a separate appliance. Expect more intent-driven storage classes that encode durability, performance limits, and placement rules. This keeps self-service safe and repeatable.

Offload will also grow in importance. DPUs and IPUs can take on networking and protocol work, which reduces host CPU pressure and smooths out latency during spikes. Observability will keep improving, too. Better visibility into queue depth, tail latency, and rebuild load helps teams prevent incidents instead of reacting to them.

Related Terms

These terms help teams plan bare-metal Kubernetes storage for latency, scale, and fault domains.

- Hyper-Converged Storage

- Disaggregated Storage

- Storage High Availability

- Kubernetes Storage Performance Bottlenecks

Questions and Answers

Bare-metal storage in Kubernetes refers to running storage directly on physical servers without a hypervisor layer. This reduces virtualization overhead and improves performance. Architectures based on distributed block storage allow bare-metal clusters to deliver scalable, high-availability persistent volumes.

Bare-metal deployments provide predictable latency, higher IOPS, and direct hardware access. For performance-sensitive applications, using NVMe over TCP on physical infrastructure enables near-local NVMe speeds across distributed nodes.

Bare-metal storage offers more consistent performance and hardware control, while cloud storage provides elasticity but can introduce variability. A software-defined storage platform can bring cloud-like automation to bare-metal Kubernetes clusters.

Yes. Databases, analytics engines, and messaging systems benefit from direct NVMe access and reduced overhead. Simplyblock supports stateful Kubernetes workloads with high-performance persistent volumes optimized for bare-metal deployments.

Simplyblock runs on commodity physical servers and delivers distributed NVMe-backed storage over standard Ethernet. Its Kubernetes-native storage integration ensures dynamic provisioning, replication, and encryption without relying on hypervisors.