Block Storage CSI

Terms related to simplyblock

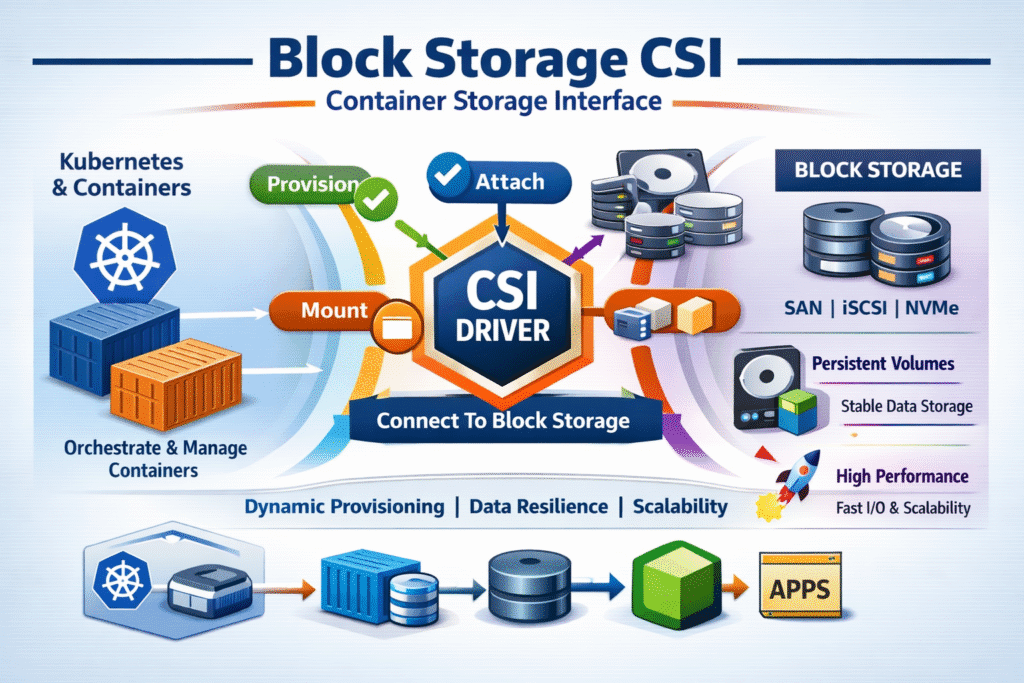

Block Storage CSI (Container Storage Interface) is the Kubernetes mechanism for provisioning, attaching, and mounting block volumes (typically exposed as devices, then formatted and mounted) through a CSI driver instead of in-tree volume plugins. It standardizes how storage vendors integrate with Kubernetes, so platform teams can treat storage as an API, using StorageClass, PersistentVolume (PV), and PersistentVolumeClaim (PVC) workflows across on-prem, baremetal, and cloud environments.

A practical definition executives tend to use is: Block Storage CSI is the control plane contract that turns Kubernetes Storage requests into repeatable, auditable, policy-driven block volumes, including lifecycle operations like resize, snapshots, and topology-aware placement when supported by the driver.

Optimizing Block Storage CSI with Modern Solutions

The difference between “CSI is installed” and “CSI is optimized” is the storage backend architecture and the driver’s behavior under load. High-performing deployments reduce kernel overhead, avoid excess data copies, keep IO paths predictable, and isolate noisy neighbors via QoS. This is where Software-defined Block Storage platforms built on SPDK-style user-space IO paths can materially change CPU efficiency, tail latency, and throughput consistency.

For organizations standardizing Kubernetes Storage across business units, the optimization goal is usually predictable p95/p99 latency, stable IOPS ceilings per workload, and fast Day-2 operations (expansion, rebalancing, failure recovery) without “storage heroics.”

🚀 Provision Block Volumes with Block Storage CSI on NVMe/TCP

Use Simplyblock to run Software-defined Block Storage with CSI-driven provisioning and predictable performance at scale.

👉 Use Simplyblock for CSI-Based Kubernetes Storage →

Block Storage CSI in Kubernetes Storage

In Kubernetes Storage, Block Storage CSI is the bridge between the scheduler-driven pod lifecycle and persistent data lifecycles. A StorageClass expresses policy (replication/erasure coding, encryption, topology, performance intent), a PVC declares demand, and the CSI controller/node components reconcile the request into an attached device on the right node, at the right time.

Where teams get surprised is that “same YAML” does not mean “same performance.” Node placement, CNI behavior, volume binding mode, filesystem choices, queue depths, and the storage protocol (iSCSI vs NVMe-oF vs NVMe/TCP) can each dominate results, especially for databases and log-heavy stateful services.

Block Storage CSI and NVMe/TCP

NVMe/TCP is a transport within NVMe-oF that extends NVMe semantics over standard Ethernet and TCP/IP, enabling disaggregated or hyper-converged designs without RDMA-specific fabrics. For Block Storage CSI, that typically means a CSI-provisioned volume maps to an NVMe namespace exposed over TCP, giving a cleaner IO path than legacy SCSI-based protocols in many environments.

In simplyblock deployments, NVMe/TCP is treated as a first-class dataplane option for Kubernetes Storage, pairing well with SPDK-based user-space processing to reduce kernel overhead and improve CPU efficiency per IO.

Measuring and Benchmarking Block Storage CSI Performance

Benchmarking Block Storage CSI should isolate three layers: workload generator behavior, Kubernetes orchestration side effects, and storage dataplane characteristics. A common approach is running fio-based tests inside pods (or on dedicated benchmark nodes) while controlling variables like block size, read/write mix, number of jobs, and iodepth.

For executive reporting, avoid averages-only. Require p95/p99 latency, throughput, and IOPS stability across time, plus failure-mode testing (node drain, pod reschedule, link flap). simplyblock’s published performance testing, for example, uses fio with defined concurrency and queue depth, and reports scaling characteristics across cluster sizes.

Approaches for Improving Block Storage CSI Performance

- Use NVMe/TCP or NVMe/RDMA backends where feasible so the block protocol matches NVMe parallelism rather than legacy SCSI semantics.

- Enforce performance isolation with QoS-capable StorageClasses so a single workload cannot consume the entire device queue and destabilize other tenants.

- Validate topology and binding behavior so volumes are provisioned where the pod will run, reducing cross-zone or cross-rack traffic that shows up as tail latency.

- Tune fio and application queue depths to match the backend, because “more concurrency” can inflate p99 latency even when IOPS rise.

- Prefer SPDK-style user-space dataplanes for CPU-efficient IO paths when your bottleneck is per-IO overhead rather than raw media bandwidth.

Block Storage CSI Architecture Options and Trade-Offs

The table below frames common Block Storage CSI approaches by the operational and performance trade-offs that matter in Kubernetes Storage programs.

| Approach | Dataplane characteristics | Typical strengths | Typical constraints |

|---|---|---|---|

| CSI + legacy iSCSI/SCSI backend | Kernel-heavy, SCSI semantics | Broad compatibility | Higher CPU per IO, tail latency sensitivity |

| CSI + NVMe/TCP backend | NVMe semantics over Ethernet | Strong performance on standard networks, simpler ops | Needs solid network design for consistency |

| CSI + NVMe/RDMA backend | RDMA bypasses network stack | Lowest latency potential | Fabric/NIC complexity, operational rigor |

| Local-only (hostPath / ephemeral) | Node-local IO | Very low latency on one node | Weak portability, fragile during reschedule |

| Software-defined Block Storage + CSI (SPDK-based) | User-space, zero-copy oriented | Predictable scaling, CPU efficiency, policy control | RDMA bypasses the network stack |

Achieving Predictable Block Storage CSI Performance with Simplyblock™

Simplyblock™ targets predictable performance by coupling Kubernetes-native CSI control with an SPDK-based, user-space dataplane, then adding multi-tenancy and QoS so teams can set enforceable performance intent per volume. The result is a Software-defined Block Storage platform that supports flexible Kubernetes Storage deployment models, including hyper-converged and disaggregated layouts, so infrastructure teams can scale compute and storage independently when business units diverge in demand patterns.

If you need a practical starting point, simplyblock provides CSI installation and operational guidance in its documentation, including Helm-based deployment patterns and troubleshooting references.

Future Directions and Advancements in Block Storage CSI

Block Storage CSI is trending toward deeper policy and automation: richer volume health signals, standardized snapshot/clone workflows, tighter topology integration for multi-zone scheduling, and better support for disaggregated NVMe-oF designs (including NVMe/TCP) that keep latency predictable while scaling out.

On the hardware side, DPUs/IPUs and in-network offload patterns are increasingly relevant because they reduce host CPU overhead and help stabilize tail latency when clusters run at high consolidation ratios.

Related Terms

Teams often review these glossary pages alongside Block Storage CSI when they standardize Kubernetes Storage and Software-defined Block Storage.

Container Storage Interface (CSI)

Dynamic Provisioning in Kubernetes

Kubernetes Block Storage

NVMe over TCP

NetApp Trident

Questions and Answers

Block Storage CSI (Container Storage Interface) allows Kubernetes to provision and manage block-level storage volumes dynamically. It provides persistent storage for stateful apps like databases, making it essential for Kubernetes-native storage deployments.

A Block Storage CSI driver provides raw volumes for applications to format and mount, offering better performance and lower latency than file or object storage. It’s ideal for workloads like databases on Kubernetes and analytics engines.

Yes, NVMe/TCP can be integrated with a CSI-compatible block storage backend. This enables ultra-fast persistent volumes with high IOPS and low latency, especially in cloud-native environments using CSI-based provisioning.

Simplyblock offers a high-performance CSI driver that provisions NVMe-based block storage with data-at-rest encryption and snapshot support. It integrates seamlessly with Kubernetes, ensuring scalability and performance.

Block Storage CSI is ideal for workloads that require consistent, low-latency I/O—such as PostgreSQL, MongoDB, and time-series databases. It supports stateful containers and ensures fast, reliable volume access across nodes.