Ceph vs Software-Defined Block Storage

Terms related to simplyblock

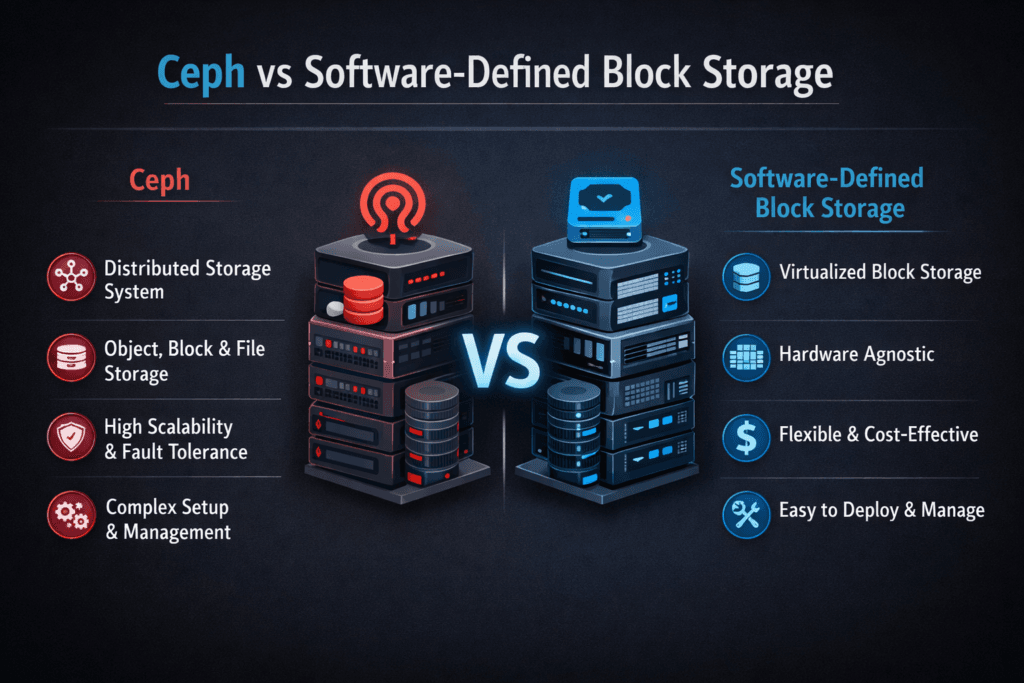

Ceph and software-defined block platforms both pool disks across servers, but they aim at different goals. Ceph delivers object, file, and block services from one cluster.

Software-defined block platforms focus on block volumes first, then add controls for latency, fairness, and day-2 operations. Executives usually care about the trade: how much operational effort the team spends versus how steady performance stays under load and failure.

How Architecture Choices Shape Ops, Cost, and Risk

Leadership teams feel storage risk when upgrades drag on, incidents repeat, or performance varies by cluster. Ceph offers broad capability, yet that breadth adds more choices to manage. Teams often tune placement rules, pool layouts, and recovery settings to match business targets. Those choices can work well, but they also increase variance across environments.

A block-first model keeps the scope tighter. That focus often makes it easier to map policy to outcomes, such as volume behavior, noisy-neighbor limits, and predictable scaling. When most critical apps depend on block volumes, a block-first approach can reduce surprises and improve repeatability.

🚀 Improve Ceph Economics with Higher IOPS per Node

Use Simplyblock to increase performance density and lower cluster footprint for tier-1 apps.

👉 See Simplyblock vs Ceph Performance →

Running Stateful Workloads Reliably in Kubernetes Storage

Kubernetes Storage succeeds when volumes behave the same way across clusters and upgrades. Operators need clean outcomes during reschedules, node drains, and rolling updates. Multi-tenant clusters also need guardrails, so one workload cannot dominate queues and push others into timeouts.

Block-first platforms often center on volume controls, QoS, and simpler operational patterns for stateful sets. Ceph can support Kubernetes well, but teams may spend more time aligning cluster tuning with the scheduling reality of mixed workloads.

Ceph vs Software-Defined Block Storage and NVMe/TCP Data Paths

NVMe/TCP brings NVMe semantics over standard Ethernet and TCP, which supports disaggregated designs on common network gear. In this setup, tail latency matters more than peak IOPS. Stable p99 latency protects databases, queues, and analytics jobs from ripple effects.

Block-first designs often prioritize a tight I/O path that matches NVMe/TCP behavior. Ceph can use NVMe media, yet overall results still depend on tuning, contention control, and how recovery interacts with application SLOs.

Practical Ways to Validate Real-World Performance

Benchmarking should match production behavior, not lab defaults. Teams get clearer answers when they test steady-state load, then repeat the same job under contention. They also need failure tests, because rebuild and rebalance often define the real user experience.

Use one workload definition across candidates and keep configs consistent. Measure IOPS, throughput, and latency spread, including p95 and p99. Track CPU use per I/O and network headroom, because those limits often cap scale before disks do.

Ceph vs Software-Defined Block Storage Tuning Levers That Matter

Strong performance comes from three habits: reduce overhead, control contention, and keep recovery from crushing tail latency. Start with architecture, then tune. A clean design saves more time than any single setting.

Test one change at a time and verify p99, not only averages. Avoid large retunes that hide cause and effect. Keep rebuilding behavior in every test cycle, because that phase exposes weak isolation fast.

Side-by-Side Criteria for Platform Selection

The table below highlights the decision points that most often drive outcomes for Kubernetes Storage teams that also plan for NVMe/TCP adoption.

| Decision Factor | Ceph (block via RBD) | Block-first software-defined approach |

|---|---|---|

| Primary scope | Unified object, file, and block | Block focus with policy controls |

| Operational surface area | More moving parts and tuning | Fewer knobs, clearer guardrails |

| Tail latency stability | Can vary with tuning and recovery | Often targets steady QoS |

| Multi-tenant isolation | Possible, depends on design | Usually a first-class goal |

| Fit for disaggregated designs | Works, needs careful planning | Often aligns well with NVMe/TCP |

| Best fit | Broad consolidation needs | Database-grade block at scale |

Making Performance Predictable with simplyblock™

Simplyblock targets Software-defined Block Storage for Kubernetes Storage and supports NVMe/TCP for networked, NVMe-class access. The platform uses an SPDK-based, user-space data path that reduces kernel overhead and helps improve CPU efficiency per I/O. That design matters when clusters run mixed tenants, when apps share CPU with storage services, and when p99 latency drives revenue impact.

Teams also need an operating model that stays stable during change. A storage layer should enforce QoS, keep isolation consistent, and support flexible deployments, including hyper-converged and disaggregated layouts. Those traits help keep performance steady during scaling, upgrades, and node events, not only during clean benchmark runs.

- Align topology and failure domains to your physical layout, then keep placement consistent across clusters.

- Enforce QoS so one workload cannot monopolize device queues or network paths.

- Validate performance during rebuild and rebalance, because that phase often breaks latency targets.

- Measure CPU-per-IO and queue behavior, then fix the hot spots first.

- Standardize benchmark profiles, then rerun them after every major change.

Ceph vs Software-Defined Block Storage Roadmap: Themes to Watch

Storage teams increasingly standardize on NVMe media, Ethernet transports, and automation-first operations. That shift pushes designs toward clearer isolation and simpler policy mapping to Kubernetes primitives.

User-space acceleration continues to gain traction because it lowers CPU cost per I/O. Hardware offload via DPUs and IPUs also matters more as clusters pack more stateful workloads per node.

Related Terms

Teams review these pages when evaluating Ceph against Software-defined Block Storage.

- Ceph Replacement Architecture

- Performance Isolation in Multi-Tenant Storage

- Storage Rebalancing Impact

- NVMe over TCP SAN Alternative

Questions and Answers

Ceph can slow down when cluster membership, placement, and recovery workflows spike during failures or expansions. A software-defined block storage platform with a dedicated storage control plane and clear control plane vs data plane separation can keep provisioning and attach workflows stable while the hot I/O path stays predictable.

Ceph’s background recovery and rebalancing can contend with foreground I/O and inflate p99 even when average throughput looks fine. SDS platforms that treat storage rebalancing impact as a throttled workflow can reduce tail-latency spikes during scale events and node loss.

Ceph commonly exposes block via RADOS Block Device (RBD), while SDS platforms often optimize CSI workflows to reduce control-plane bottlenecks at scale. If attach/mount time is your pain point, compare how each handles CSI control plane vs data plane operations during node churn.

Ceph can restore redundancy automatically, but recovery can trigger large data movement that disrupts latency. SDS block stacks that budget background work can protect application SLOs while still meeting durability targets. The key is whether the system makes recovery “elastic” or forces aggressive movement that amplifies storage latency’s impact on databases.

A Ceph exit plan should redesign the I/O path and operational workflows, not just swap drivers. Use a target-state blueprint like Ceph replacement architecture and validate how the new software-defined block storage design handles placement, rebalancing, and upgrades under load.