Ceph vs SPDK

Terms related to simplyblock

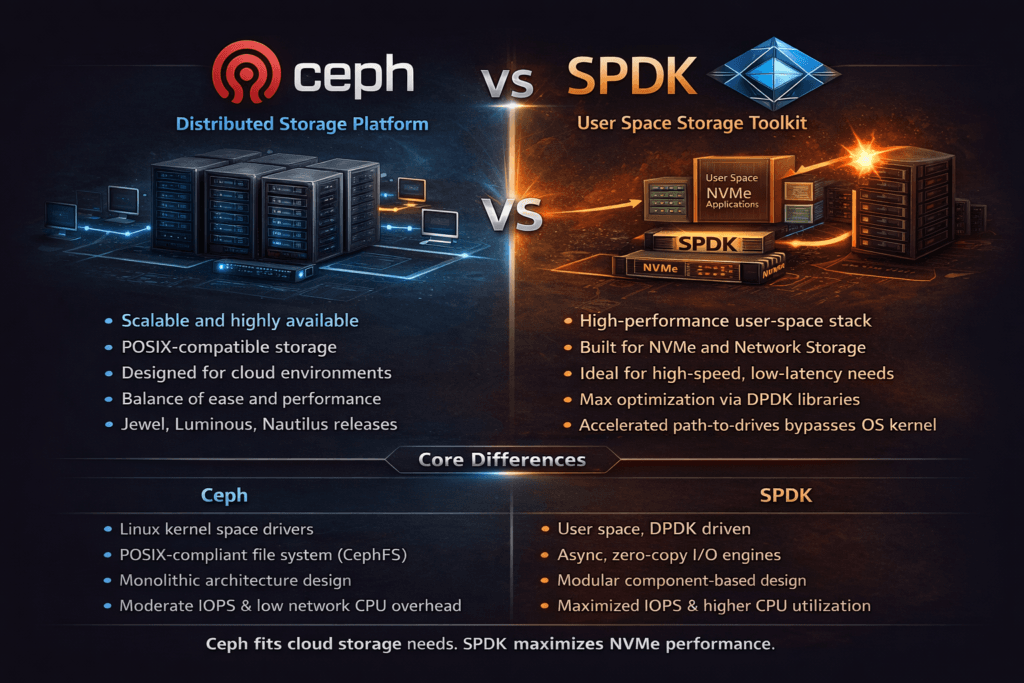

Ceph is a distributed storage platform that delivers object, block, and file services from a shared cluster. SPDK (Storage Performance Development Kit) is a user-space toolkit for building high-performance storage data paths that avoid much of the kernel I/O overhead. In practice, this comparison matters when leaders need to balance feature scope, operational effort, and performance consistency for Kubernetes Storage and Software-defined Block Storage.

Ceph often fits organizations that want a mature, general-purpose storage system with broad ecosystem support. SPDK fits organizations that prioritize low latency, high IOPS, and CPU efficiency, especially for NVMe-based designs and high-density clusters. The real decision is not “which is better,” but “which architecture matches our workload targets, our operating model, and our scaling path.”

Designing a Storage Stack for Latency, Scale, and Operational Control

A storage platform succeeds in production when it meets predictable service levels while staying manageable for platform teams. Ceph delivers a full storage system, including data distribution, replication, failure handling, and multiple front ends. That breadth can reduce vendor sprawl, but it also adds layers in the I/O path and increases tuning surface area.

SPDK-based systems start from a different premise. They optimize the data path first, then add control-plane features like multi-tenancy, QoS, snapshots, replication, and automation. By running I/O in user space and using polling, SPDK-based designs often reduce context switches and copy overhead. That difference can show up as more stable tail latency under load, which is what application owners notice.

Executives should evaluate both options against the cost of variance. A system that delivers slightly lower peak throughput but tighter p99 latency often produces higher business value for databases, streaming pipelines, and AI ingestion.

🚀 Benchmark, Prove, and Lock in Predictable Storage Performance

Use Simplyblock’s built-in benchmarking and tenant QoS to reduce noisy-neighbor impact at scale.

👉 Review Features & Benefits →

Ceph vs SPDK for Kubernetes Storage Workloads

Kubernetes Storage increases pressure on the storage plane because clusters run mixed workloads, change frequently, and often oversubscribe CPU. When pods reschedule, nodes drain, or autoscaling triggers sudden bursts, storage needs to hold latency steady.

Ceph commonly backs persistent volumes through CSI integrations, and many teams run it as a shared cluster service. That model works well when the organization already has Ceph expertise and can dedicate capacity and operational attention.

SPDK-based Software-defined Block Storage aligns with Kubernetes patterns when teams want consistent performance per volume, tenant isolation, and policy-driven QoS. The lower CPU cost per I/O can also improve node density, which matters when storage shares hosts with application compute. For many platform groups, the deciding factor becomes how well the system limits noisy neighbors while keeping operational effort predictable.

Networking Implications – Ceph, SPDK, and NVMe/TCP Fabrics

NVMe/TCP extends NVMe-oF over standard Ethernet and IP networking, which makes it easier to adopt in typical data center environments. For platform teams, the benefit is practical: NVMe/TCP can deliver strong performance without requiring a full RDMA fabric rollout.

Ceph benefits from fast networks and SSDs, but its end-to-end path typically includes more components than an SPDK-focused data path. SPDK-based designs frequently pair NVMe/TCP with user-space I/O to keep overhead low and reduce latency jitter under concurrency. This pairing matters when Kubernetes nodes run many tenants, and every extra microsecond at p99 becomes visible to applications.

If the organization expects rapid cluster growth, the fabric choice should align with operating reality. Simpler networks reduce integration risk, and data-path efficiency reduces CPU waste.

How to Benchmark and Validate Performance Claims

Benchmarking should mirror production behavior, not only synthetic peak tests. Storage teams should measure both throughput and latency distribution while tracking CPU and network utilization across clients and storage nodes. Single-run tests mislead, so repeatability matters more than a headline number.

Use one consistent methodology across platforms, and keep the environment stable. Run tests long enough to reach steady state, then validate behavior during stress and recovery. Tail latency, rebuild impact, and volume-level fairness reveal more about a platform’s production fitness than maximum sequential bandwidth.

- Measure p50, p95, and p99 latency for reads and writes under realistic queue depths.

- Track CPU cost per IOPS on client nodes and storage nodes.

- Test mixed workloads, including random read/write blends and small-block patterns.

- Validate performance during failure and recovery events, not only in healthy states.

Tuning Paths That Reduce Tail Latency and CPU Waste

Ceph improvements usually come from disciplined cluster design and careful control of background activity. Teams tune placement, device classes, recovery limits, and network segmentation to protect foreground workloads. That work can pay off, but it requires operational maturity and ongoing attention.

SPDK-oriented tuning usually targets deterministic resource usage. Teams pin CPU cores, allocate hugepages, tune queue depths, and keep the I/O path in user space. When done well, the result is a tighter latency envelope and more predictable scaling. This approach also aligns with infrastructure trends like DPUs and IPUs, where offload can reduce host CPU pressure while preserving high I/O rates.

Across both models, predictable performance comes from controlling contention. Reserve CPU, standardize networking, and enforce QoS so one workload cannot silently degrade another.

Side-by-Side Comparison

The fastest way to align stakeholders is to compare the platforms on the attributes that affect production outcomes. The table below summarizes common differences that show up in real deployments.

| Category | Ceph (distributed storage platform) | SPDK-based Software-defined Block Storage |

|---|---|---|

| Core intent | Broad storage services, shared cluster model | High-performance user-space data path with storage services layered on |

| Typical strength | Mature ecosystem, multi-service scope | Low latency, CPU efficiency, predictable tail behavior |

| Operational focus | Cluster tuning, recovery controls, capacity planning | CPU pinning, user-space I/O tuning, QoS policy design |

| Kubernetes fit | Strong when teams already run and operate Ceph at scale | Strong when teams prioritize per-volume fairness, latency SLOs, and density |

| NVMe/TCP impact | Gains from faster networks, but path includes more layers | Gains from faster networks, but the path includes more layers |

Predictable Ceph vs SPDK Results with Simplyblock™

Simplyblock™ targets predictable performance for Kubernetes Storage by combining SPDK-based, user-space design with enterprise controls that matter in multi-tenant environments. This approach aims to reduce copy overhead, lower CPU consumption per I/O, and keep latency stable as concurrency grows. It also supports NVMe/TCP and fits both hyper-converged and disaggregated deployment models, which helps teams standardize a single storage strategy across clusters.

For organizations comparing Ceph and SPDK, simplyblock™ can be positioned as a practical path to SPDK-class performance without forcing teams to build a storage system themselves. Platform teams typically care about consistent outcomes: performance isolation, policy-driven QoS, automation hooks, and operational clarity that supports predictable scaling.

Where the Architecture Is Heading Next

Storage architectures are moving toward greater efficiency per core and better alignment with cloud-native operations. As DPUs and IPUs become more common, user-space and offload-friendly designs gain relevance because they shift work away from the kernel and reduce host CPU pressure. At the same time, Kubernetes Storage continues to demand automation, fast provisioning, and consistent behavior during churn.

Ceph will keep evolving as a broad, feature-rich distributed platform. SPDK-driven designs will keep pushing the performance envelope, especially for NVMe-oF, NVMe/TCP, and accelerator-assisted data paths. The organizations that win with either approach will set clear latency and availability targets, then select the architecture that hits those targets with the least operational friction.

Related Terms

Teams reference these pages with Ceph vs SPDK.

SPDK (Storage Performance Development Kit)

NVMe over TCP (NVMe/TCP)

NVMe over Fabrics (NVMe-oF)

Software-defined Storage (SDS)

Questions and Answers

Ceph is a full distributed storage system with its own control plane, placement, and recovery behaviors, while SPDK is a user-space toolkit used to build ultra-fast data paths. The practical “Ceph vs SPDK” comparison is whether you want Ceph’s cluster features at the cost of a heavier I/O stack, or an SPDK-based design that minimizes context switches and copies for steadier p99 latency.

Ceph performance often becomes CPU-bound under mixed load because replication/EC, networking, and background tasks contend with foreground I/O. SPDK’s user-space, poll-mode approach can push higher IOPS per core by shrinking syscall overhead and kernel scheduling effects, which is why teams evaluate SPDK vs kernel storage stack when chasing predictable tail latency.

Ceph is typically consumed via RBD/CephFS/S3 and its internal messaging stack, while SPDK commonly fronts NVMe devices as an SPDK Target over NVMe-oF transports (including NVMe/TCP). If your design goal is a thin, high-throughput data plane over Ethernet, SPDK-based NVMe-oF targets can be a better fit than routing block I/O through a broader Ceph cluster pipeline.

In Ceph, rebalancing/recovery are core cluster behaviors that can reshape performance during OSD churn, expansion, or failures; you often spend time tuning movement and observing its impact. With SPDK, data movement behavior depends on the storage system built on top of it, so the key question becomes how the system budgets background work versus foreground I/O. If you’re diagnosing pain points, compare against storage rebalancing and its known side effects

A Ceph exit plan isn’t just “new data path”; it changes placement logic, failure-domain assumptions, and day-2 ops like upgrades and rebalancing. An SPDK-based engine usually emphasizes a lean, explicit data plane plus a separate policy/control layer, so you’ll want a target-state blueprint before moving production volumes. Map this using the Ceph replacement architecture and validate control/data responsibilities with the storage control plane concept.