Cross-Cluster Replication

Terms related to simplyblock

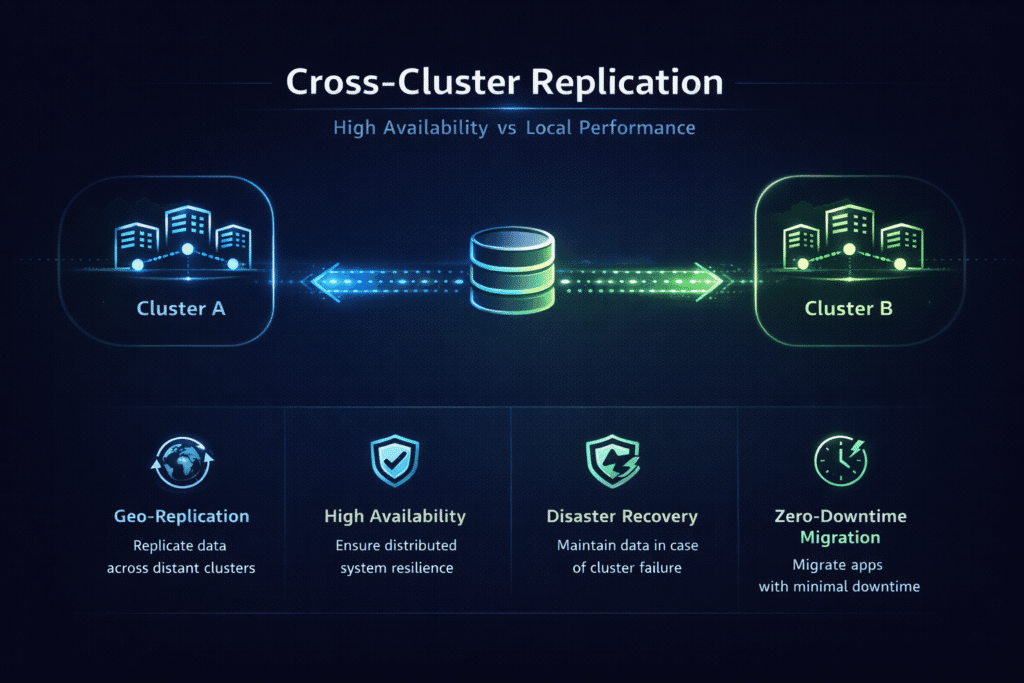

Cross-cluster replication copies data from one cluster to another cluster, often across zones, data centers, or clouds. Teams use it to survive full-cluster outages, reduce downtime, and make recovery steps repeatable instead of stressful.

The real choice isn’t “replicate or not.” The real choice is where replication lives: in the app, in the database, or in the storage layer.

Cross-Cluster Replication vs In-Cluster Replicas

In-cluster replicas protect you from node failures and some local issues. They usually won’t protect you when the whole cluster fails due to a control-plane outage, a zone loss, or a bad change that takes everything down.

Cross-cluster replication targets a bigger failure domain. It keeps a second copy of data in a separate cluster so you can fail over when you lose the primary cluster.

🚀 Replicate Data Across Clusters, Natively in Kubernetes

Use Simplyblock to protect stateful workloads with fast snapshots and cross-cluster recovery workflows—so you hit RPO/RTO targets without slowing production writes.

👉 Use Simplyblock for Fast Backups and Disaster Recovery →

Designing Multi-Cluster DR for Kubernetes Stateful Apps

Kubernetes adds two realities: pods move, and storage has placement rules. A good plan keeps data consistent and keeps failover steps simple.

Many teams combine replication with snapshots. Snapshots give you a clean rollback point after corruption, ransomware, or a bad deployment. Database-native replication can also work well when you accept database-specific tooling and runbooks.

Latency, Bandwidth, and Distance – The Real Constraints

Replication always spends network and write budget. Synchronous replication can reduce data loss, but it often adds write latency because the system must confirm remote writes. Asynchronous replication protects performance over distance, but it accepts some lag.

If your link saturates or your write rate spikes, lag grows. Your design must handle that reality with caps, schedules, and clear recovery targets.

Cross-Cluster Replication SLAs – Lag, RPO, and RTO

Track three numbers and keep them visible: replication lag, RPO, and RTO. Lag tells you if the replica stays close to the primary. RPO tells you how much data you can lose. RTO tells you how fast you must restore service.

Test these under load, not only on a quiet system. A plan that looks great at low traffic can fall behind during peak writes.

A Simple Decision Framework for Replication

- Choose application-level replication when the app already supports it, and you can automate failover cleanly across clusters.

- Choose database-native replication when you need a hot standby, and you accept database-specific limits and operations.

- Choose storage-level replication when you want one protection method that covers many workloads without rewriting each app.

- Prefer asynchronous replication for long distances and stable performance under heavy writes.

- Use synchronous replication only when you can tolerate added write latency and your network path stays tight and reliable.

- Prove the plan with drills, then tune until p95/p99 stays stable during replication and recovery.

Comparing Replication Options Side by Side

Compare the options by what you must guarantee during an outage: how much data you can lose (RPO) and how quickly you must restore service (RTO). Also account for the hidden cost—some approaches add write latency, while others add ongoing operational work.

| Option | Typical RPO | Typical RTO | Performance impact | Ops complexity | Best fit |

|---|---|---|---|---|---|

| App-level replication | App-dependent | Often fast | App-specific | High (per app) | One critical app |

| Database-native replication | Low to medium | Fast failover possible | Write/replica overhead | Medium | DB-led HA/DR |

| Storage-level async replication | Medium to low | Fast cutover/restore | Lower write penalty | Lower (covers many apps) | Multi-workload DR |

| Snapshot + restore across clusters | Medium | Medium | Low during steady state | Medium | Rollback and ransomware recovery |

| Synchronous storage replication | Near-zero possible | Fast | Higher write latency risk | Medium | Short distance, strict loss targets |

Cross-Cluster Replication with Simplyblock for Fast Recovery

Simplyblock aligns well with cross-cluster recovery goals because it focuses on fast snapshots plus replication-driven recovery workflows for stateful Kubernetes environments. That approach helps teams protect many workloads with one model, instead of building a separate replication story per app.

If your priority is “recover fast without slowing the hot path,” this is where snapshot speed, replication efficiency, and predictable cutover steps matter most.

What’s Next – Policy-Driven Replication and Faster Drills

Policy-driven controls are becoming the default: set an RPO/RTO target, then let the platform manage snapshot cadence, replication rate, and retention. Cleaner consistency across multiple volumes also matters more each year, since most real apps span more than one disk.

Safer automation for failover testing is rising in priority, because frequent drills catch gaps early without risking production data. Expect more app-aware consistency groups and orchestration hooks that restore services in the right order and keep recovery predictable.

Related Terms

These glossary pages pair well with Cross-Cluster Replication when you compare replication modes, snapshot-based workflows, and recovery behavior.

- Asynchronous Storage Replication

- Synchronous Storage Replication

- Replication

- Snapshot vs Clone in Storage

Questions and Answers

Cross-cluster replication ensures that data written in one Kubernetes cluster is mirrored to others in near real-time. This is crucial for maintaining consistency and uptime in multi-region stateful workloads and disaster recovery setups.

It uses underlying storage systems or middleware to sync volume data across clusters. Combined with software-defined storage, this enables seamless failover and scalable multi-cluster environments.

Yes, NVMe over TCP can be used for high-speed transport in replicated storage setups. It ensures low latency and high throughput across geographically distributed clusters using standard networking.

It minimizes data loss and downtime by maintaining real-time copies across sites. This is key for meeting SLA targets and supporting cloud cost optimization by reducing reliance on idle failover capacity.

Challenges include network overhead, consistency across zones, and storage provisioning complexity. These can be addressed through CSI support and topology-aware Kubernetes orchestration.