CSI Control Plane vs Data Plane

Terms related to simplyblock

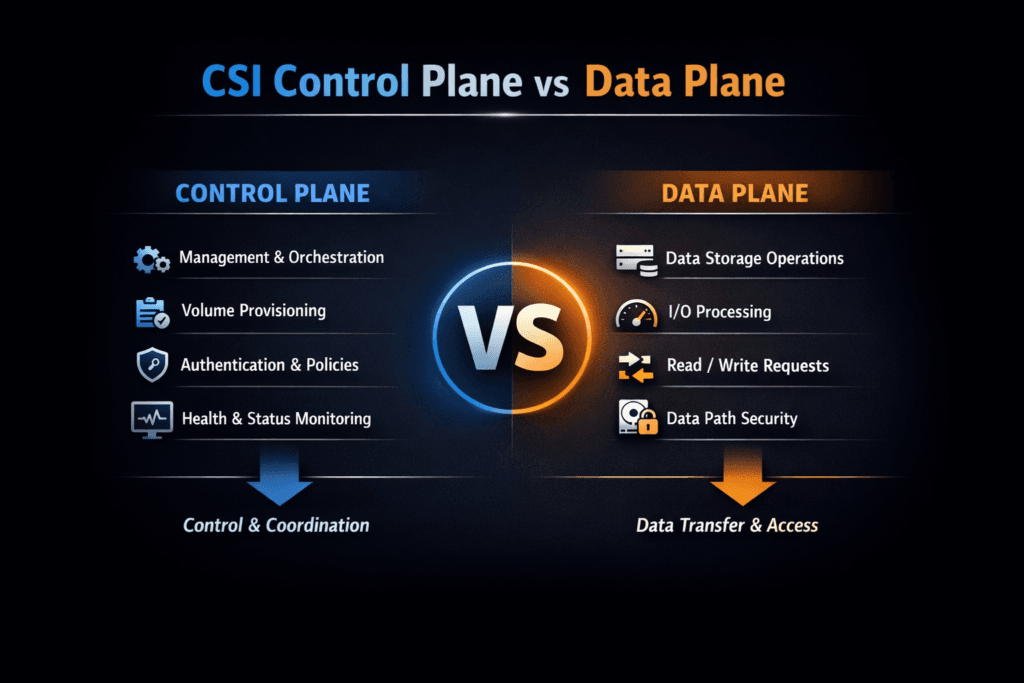

In the Container Storage Interface (CSI), the control plane decides what should happen to a volume, and the data plane moves the actual I/O for reads and writes. In Kubernetes Storage, the control plane covers provisioning, attach/detach, topology decisions, snapshots, and resize workflows. The data plane covers the hot I/O path from your pod to the block device and back, including CPU cost per I/O, queue behavior, and p95/p99 latency.

This split matters because Kubernetes can look “healthy” while applications still see jitter. When lifecycle operations collide with runtime I/O, you often get slow rollouts, long drains, or latency spikes during reschedules, even when raw media is fast. The CSI spec defines the interfaces, but the backend design determines whether lifecycle work stays off the I/O path.

Control and I/O Path Design for Cloud-Native Storage

Teams get better outcomes when they treat the control plane and the data plane as separate budgets. The control plane should finish volume operations quickly under churn, and the data plane should stay stable under load. Software-defined Block Storage platforms that keep metadata and orchestration off the hot path reduce tail latency risk, especially for databases and streaming systems.

For high-performance designs, user-space stacks often help because they cut context switching and reduce copies on the critical path. SPDK is a common foundation for that approach, particularly for NVMe and NVMe-oF transports.

🚀 Keep CSI Control Plane Actions Out of the Data Plane, Natively in Kubernetes

Use Simplyblock to reduce attach-time friction and avoid I/O hot-path contention with NVMe/TCP volumes.

👉 Use Simplyblock for Kubernetes NVMe/TCP Storage →

CSI Control Plane vs Data Plane in Kubernetes Storage

In Kubernetes Storage, the control plane begins when a PVC gets created and ends when the system reports the volume as provisioned, attached, and ready. The data plane begins when the application issues I/O and stays active for the full pod lifetime.

You can usually spot control plane pressure through symptoms like slow PVC binding, delayed attach after node drain, or snapshot operations that block deploy pipelines. You can spot data plane pressure through p99 latency jumps, CPU saturation on storage nodes, or noisy-neighbor effects when multiple tenants compete for the same backend resources. CSI plugins separate controller responsibilities from node responsibilities, but the best implementations also keep runtime I/O independent of lifecycle “bookkeeping.”

CSI Control Plane vs Data Plane and NVMe/TCP

NVMe/TCP helps because it delivers an NVMe-style command set over standard Ethernet, which fits Kubernetes scaling patterns and avoids specialized fabric requirements in many environments. When the data plane uses NVMe/TCP efficiently, it can reduce protocol overhead versus older network block patterns, and it can improve cost-per-IOPS by lowering CPU burn per I/O.

The control plane still decides placement and access, but the transport and implementation decide tail behavior. With NVMe/TCP, tuning typically centers on queue depth, CPU headroom, and predictable network paths, not on complex fabric feature alignment.

Measuring and Benchmarking CSI Control Plane vs Data Plane Performance

To measure the control plane, track time-based lifecycle metrics across real cluster events: PVC-to-Bound time, time-to-attach after scheduling, drain and reschedule duration for stateful pods, and snapshot create/restore time. These numbers directly affect release velocity and recovery time objectives.

To measure the data plane, track sustained IOPS, throughput, p95/p99 latency, and CPU utilization per I/O while the cluster performs normal operations. Use fio for block patterns, then validate with application benchmarks for your real workload shape. Most failures hide in the tails, so report p99 and jitter, not only averages.

Approaches for Improving CSI Control Plane vs Data Plane Performance

Use one principle: keep lifecycle work out of the hot path, and keep the hot path short. The following actions usually produce immediate wins when teams tune Kubernetes Storage with NVMe/TCP and Software-defined Block Storage:

- Reduce per-I/O overhead by favoring efficient I/O stacks, and avoid kernel-heavy work on the critical path when user-space acceleration fits your model.

- Isolate tenants with QoS so one namespace cannot flatten p99 latency for everyone else.

- Validate node drain, reschedule, and rolling updates under load so you can see whether lifecycle work triggers latency spikes.

- Align queue depth, CPU sizing, and network design with your application’s access pattern instead of using generic defaults.

- Keep topology and placement rules explicit, so attach and failover behavior stays predictable across zones.

For teams that operate at scale, “fast median latency” is not enough. Stable p99 latency and CPU efficiency usually decide whether storage becomes a cost center or a platform advantage.

Side-by-Side View of Control and I/O Paths

The split becomes clearer when you compare what teams optimize during cluster churn versus what they optimize during steady-state runtime I/O.

| Dimension | Control plane focus | Data plane focus |

|---|---|---|

| Primary goal | Correct, fast lifecycle actions | Stable, low-jitter I/O |

| Typical bottleneck | Provisioning delays, attach lag, snapshot workflow time | CPU overhead, transport overhead, queue contention |

| What breaks first | Slow deploys and reschedules | p99 latency spikes, jitter, noisy-neighbor impact |

| What to measure | PVC bind time, attach time, restore time | IOPS, throughput, p95/p99 latency, CPU per I/O |

| Common improvement | Better topology rules, fewer retries, faster controller operations | NVMe/TCP efficiency, lean I/O stack, QoS |

Higher CPU Efficiency for NVMe/TCP with Simplyblock™

Simplyblock™ targets Kubernetes Storage deployments that need predictable I/O under churn. It integrates through CSI for lifecycle operations while focusing engineering effort on the data plane, so runtime I/O stays consistent. Its architecture emphasizes NVMe-centric performance, NVMe/TCP support, and Software-defined Block Storage behavior that works in hyper-converged or disaggregated layouts.

Because simplyblock uses SPDK-oriented design patterns, it can reduce I/O path overhead and improve CPU efficiency, which matters when you run dense clusters or multi-tenant platforms. That lets platform teams scale stateful workloads without turning storage into the limiting factor for reliability targets and rollout timelines.

What’s Next for CSI Operational Patterns

Expect more automation and stricter SLO demands. Kubernetes will keep expanding lifecycle workflows (snapshots, clones, resize, topology controls), so the control plane will do more. At the same time, stateful platforms will measure storage by p99 latency and jitter because databases and streaming systems expose tail risk quickly.

You will also see more interest in offload paths (DPUs and IPUs) to protect CPU cycles for applications while storage handles I/O more efficiently. That trend makes the control/data split even more important because offload only helps if the runtime path stays clean.

Related Terms

Short references that help pinpoint and quantify the CSI Control Plane vs Data Plane impact in Kubernetes Storage and Software-defined Block Storage.

SPDK Architecture

IO Path Optimization

Storage Composability

CSI Topology Awareness

Questions and Answers

In CSI architecture, the control plane manages volume lifecycle operations like provisioning, attaching, and snapshotting via sidecar containers. The data plane handles direct I/O between workloads and the storage backend. Simplyblock ensures zero-copy, direct-path data flows to reduce latency on the data plane.

No. While the CSI control plane orchestrates setup tasks, actual I/O bypasses it entirely. Platforms like Simplyblock maintain a direct data path using NVMe over TCP, ensuring minimal latency and high throughput once volumes are mounted.

CSI control plane overhead can arise from Kubernetes API latency, sidecar container delays, or inefficient volume mount logic. These don’t affect I/O directly but can slow provisioning. Simplyblock optimizes this layer to ensure fast and reliable persistent volume operations.

The CSI data plane allows pods to access storage directly without routing I/O through the CSI plugin. Combined with NVMe over TCP, this architecture enables Simplyblock to deliver high IOPS and low latency equivalent to local NVMe storage.

Simplyblock decouples control operations from data access, using CSI only for lifecycle orchestration. Once provisioned, storage volumes operate on a direct NVMe/TCP path. This hybrid approach delivers full Kubernetes automation with bare-metal performance for block storage.