CSI Driver

Terms related to simplyblock

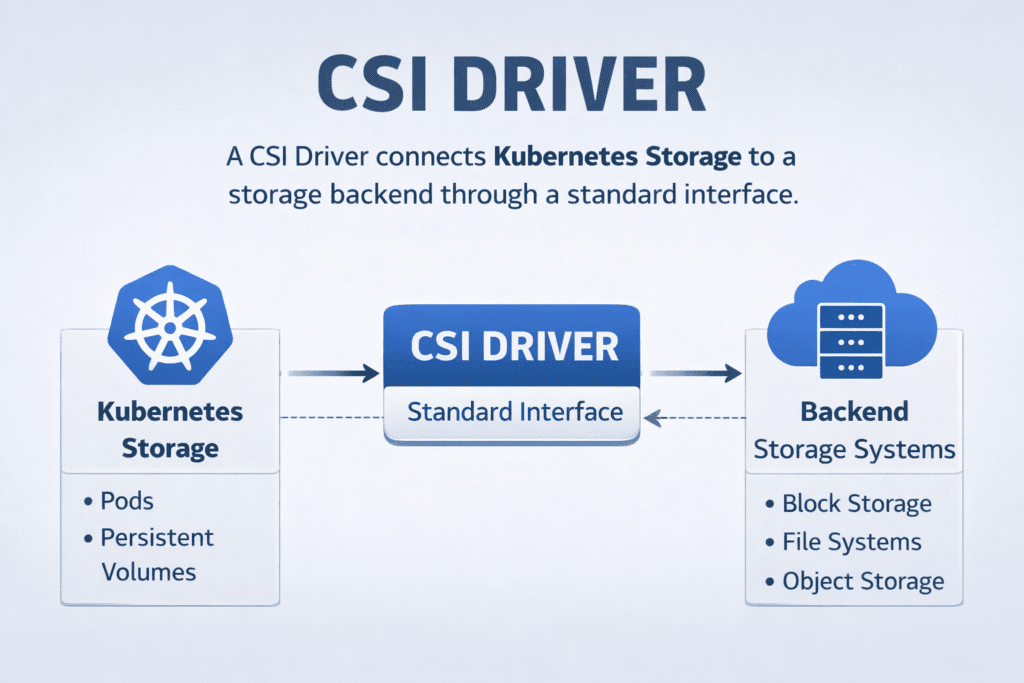

A CSI Driver connects Kubernetes Storage to a storage backend through a standard interface. It turns storage requests into actions such as create volume, attach, mount, expand, snapshot, and delete. That integration lets platform teams change storage systems without changing application code.

Leaders care because the driver shapes delivery speed, risk, and cost. A slow or fragile driver turns routine events into incidents. A solid driver keeps upgrades smooth and keeps stateful apps stable during node drains and reschedules.

DevOps teams feel this behavior every day. When a pod starts late, a mount step often sits on the critical path. When latency jumps, the I/O path or neighbor contention often explains the spike.

Building a Reliable Storage Stack for Kubernetes

A clean setup starts with clear intent and repeatable operations. Most clusters rely on three building blocks. The platform team defines performance and protection goals in a StorageClass. The app team requests capacity with a PersistentVolumeClaim. The system then binds the claim to a PersistentVolume and wires it to a pod.

At scale, the failure modes change. Provisioning may still work, but attach operations can queue up during bursts. Mount delays can stack up during rolling updates. Snapshot workflows can lag behind backup windows. These issues matter more than peak IOPS because they disrupt release plans.

A Software-defined Block Storage layer can reduce these risks when it offers consistent policy, strong isolation, and a low-overhead data path. That design also fits both hyper-converged and disaggregated clusters, so teams keep one operating model across environments.

🚀 Standardize CSI-Based Storage for Kubernetes

Use simplyblock to deliver NVMe/TCP block volumes with predictable attach, mount, and latency at scale.

👉 Use simplyblock for Kubernetes Storage →

CSI Driver Responsibilities Inside Kubernetes Storage

Kubernetes uses a CSI Driver for both control-plane and node-level steps. The controller side handles provisioning, snapshots, clones, and expansion. The node side handles publish and mount on each worker. Kubernetes calls these steps during events like a new claim, a pod start, a reschedule, or a node drain.

Most incidents trace back to a small set of lifecycle edges. Attach storms can hit when many pods restart at once. Orphaned mounts can block new pods on busy nodes. Stale device paths can break upgrades when teams change kernel versions or drivers. Good runbooks focus on these edges and keep them measurable.

Topology makes this harder. If the scheduler places compute in one zone and the volume lives in another, the driver may block the attach or force remote access. Either way, the workload pays with delay or jitter. Teams get better results when they align topology labels, placement rules, and storage constraints from day one.

CSI Driver Data Paths on NVMe/TCP Networks

NVMe/TCP delivers NVMe-oF semantics over standard Ethernet. Many teams adopt it as a practical SAN alternative because it scales without RDMA-only hardware in every rack. It also supports disaggregated designs that keep compute and storage independent.

The driver still controls the lifecycle, but the backend and data path decide performance. Two factors matter most. First, the stack must protect tail latency during bursts, not only average latency. Second, the platform must preserve CPU headroom, so app cores do not burn cycles on storage overhead.

A user-space, zero-copy I/O path can help here. It reduces context switching and lowers per-I/O CPU cost. That efficiency matters in multi-tenant clusters where contention often shows up as jitter rather than outright failure.

Observability and Benchmarks for CSI Driver Behavior

Measure outcomes that map to platform reliability. Track time from claim creation to bound volume. Track time from the pod schedule to the mounted volume. Track failure rates for attach and publish operations. Those metrics show whether your storage control plane keeps up with cluster churn.

Pair lifecycle metrics with workload metrics. Watch p95 and p99 latency, not only throughput. Compare CPU per I/O across nodes to spot inefficient paths. Add network counters, since retransmits and congestion often amplify remote I/O penalties.

Benchmarks should match real access patterns. Use small-block random tests for databases. Use mixed read and write tests for log-heavy services. Run tests during normal activity, such as rolling updates or reschedules, to expose lifecycle bottlenecks.

Field-Proven Ways to Improve Stability

This is the only list on the page.

- Define a small set of StorageClass tiers, and keep them consistent across clusters.

- Test attach and mount behavior during rolling updates, not only at steady state.

- Align topology labels with failure domains, and validate them during provisioning.

- Put limits on bursty volume operations, and stage large migrations in waves.

- Standardize filesystem and mount options to reduce drift and troubleshooting time.

- Enforce QoS and tenant isolation so one workload cannot disrupt the rest.

Integration Approaches Compared

The table below compares common integration patterns and the trade-offs that matter in production.

| Approach | What it does well | What to watch | Best fit |

|---|---|---|---|

| Basic CSI deployment with defaults | Quick rollout | Upgrade drift and noisy mounts | Small clusters and pilots |

| Operator-managed CSI deployment | Repeatable upgrades and config control | Operator lifecycle overhead | Regulated or large teams |

| Topology-first setup | Predictable placement and recovery | Label hygiene and policy testing | Multi-zone production |

| NVMe/TCP-backed block volumes | Strong performance on Ethernet | QoS and fabric discipline | Databases and analytics |

Consistent Volume Operations with simplyblock™

Simplyblock™ targets the parts of storage operations that impact business outcomes: fast provisioning, steady tail latency, and predictable behavior during churn. It supports Kubernetes Storage patterns across hyper-converged, disaggregated, and hybrid layouts, so teams keep one playbook as infrastructure evolves.

For performance-sensitive tiers, simplyblock pairs NVMe/TCP with an efficient I/O path built on SPDK principles. That design reduces CPU overhead and helps protect latency under load. Multi-tenancy and QoS controls help isolate workloads, which keeps performance more predictable when many teams share the same cluster.

For executives, this matters because it lowers operational drag. Teams spend less time chasing storage edge cases, and they run fewer disruptive migrations.

What’s Next for CSI Ecosystems

CSI Driver deployments will move toward safer upgrades, clearer health signals, and tighter links between scheduling and storage intent. Expect stronger defaults for topology awareness, better lifecycle timing metrics for attach and mount steps, and guardrails that prevent common misconfigurations.

DPUs and IPUs will also shape CSI outcomes. Offload can reduce CPU cost in the storage path and keep volume operations steady during churn. Faster Ethernet and better congestion control will lower the remote I/O penalty, but placement rules will still drive tail latency for stateful apps.

Related Terms

Teams often review these glossary pages alongside CSI Driver when they set standards for Kubernetes Storage and Software-defined Block Storage.

- Container Storage Interface (CSI)

- PersistentVolumeClaim (PVC)

- StorageClass

- Volume Snapshots

- Kubernetes Volume Plugin (in-tree vs CSI)

Questions and Answers

A CSI (Container Storage Interface) driver enables Kubernetes to provision and manage storage volumes dynamically. It abstracts the storage backend, allowing workloads to request volumes through PVCs. Simplyblock provides a robust CSI-compatible storage layer designed for performance and flexibility.

CSI drivers register with Kubernetes via sidecar containers and respond to volume operations like create, attach, and delete. This integration supports stateful workloads and enables dynamic storage handling across zones, regions, or nodes.

CSI drivers are decoupled from the Kubernetes core, making them more modular and easier to update. They also support advanced features like volume expansion, snapshots, and encryption. Simplyblock’s CSI driver is production-ready, supporting NVMe-over-TCP and multi-tenant isolation.

Yes. For any automated or dynamic storage provisioning, a CSI driver is required. Without it, volumes must be manually created and mounted. CSI drivers make it possible to provision storage on demand—essential for modern DevOps workflows.

Look for features like topology awareness, encryption, scalability, and performance tuning. Compatibility with your workload types and Kubernetes distribution is key. Explore Simplyblock’s supported CSI integrations to meet your workload and storage SLA requirements.