CSI Performance Overhead

Terms related to simplyblock

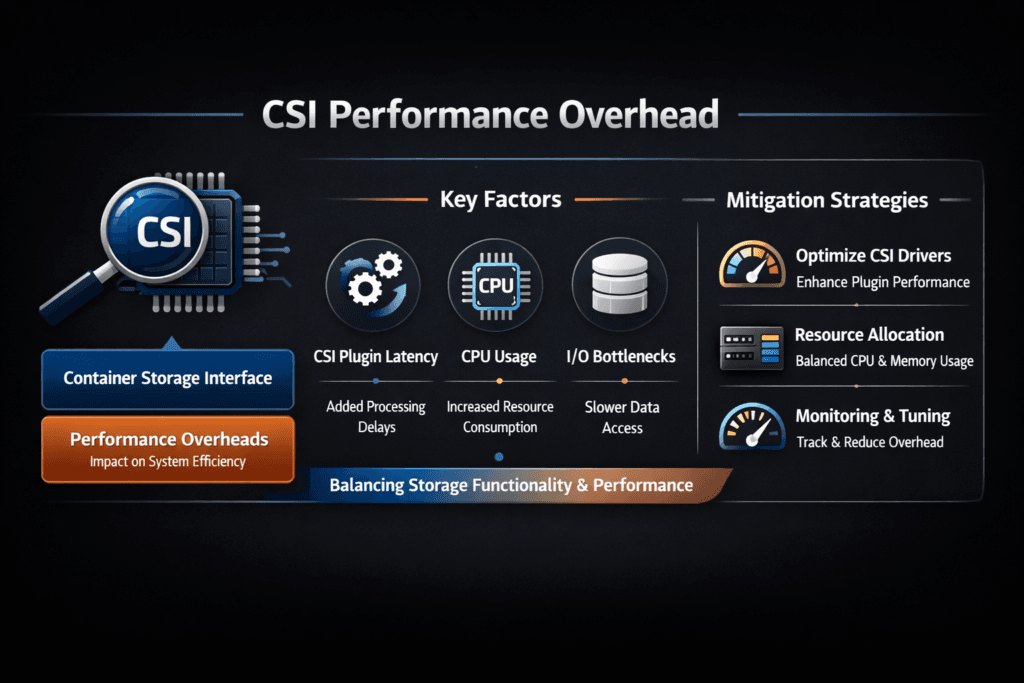

CSI Performance Overhead is the additional latency, CPU load, and scheduling overhead introduced when Kubernetes provisions, attaches, mounts, and manages persistent volumes via the Container Storage Interface (CSI). You notice it when p99 latency climbs during rollouts, node CPU headroom shrinks under bursty write traffic, or storage operations slow down during scale events.

Most clusters pay overhead in two places. The control plane pays it through CSI sidecars, API calls, retries, and reconciliation. The data plane pays for it through protocol processing, network hops, and avoidable kernel transitions or copies. A fast storage backend can still feel slow if the control plane thrashes, or if the data path burns CPU per I/O.

Reducing Control-Plane Drag with Storage Architecture Choices

You can lower overhead by keeping the I/O path short and keeping CSI components predictable under load. Start with resource isolation for the CSI controller, node plugin, and sidecars, so they don’t compete with app workloads during bursts. Next, align storage topology with scheduling, so pods reach their volumes with fewer network hops.

On the data path, user-space designs often improve CPU efficiency because they reduce context switching and copy work. SPDK is a common foundation for this approach, especially for NVMe and NVMe-oF stacks.

🚀 Reduce CSI Performance Overhead for Stateful Workloads on NVMe/TCP in Kubernetes

Use Simplyblock to simplify persistent storage and keep latency steady during churn at scale.

👉 Use Simplyblock for High-Performance Kubernetes Storage →

CSI Performance Overhead in Kubernetes Storage

In Kubernetes Storage, overhead spikes during events, not just during steady I/O. A deployment rollout can trigger parallel volume stage and publish calls, mount and format work, plus a burst of activity in sidecars like the external-provisioner. If those components hit CPU throttling or API backpressure, you get slow pod readiness even when raw storage bandwidth looks healthy.

You get clearer visibility when you track both lifecycle timing and workload latency. Measure PVC-to-ready time, attach latency, and mount completion during reschedules. Then compare those timelines to the p95 and p99 app latency. This approach helps you separate “Kubernetes overhead” from “storage path overhead” in a way that executives can tie to uptime and SLO risk.

CSI Performance Overhead and NVMe/TCP

NVMe/TCP reduces protocol drag compared to older network block approaches by running NVMe semantics over standard Ethernet and TCP/IP. It fits disaggregated Kubernetes Storage designs because you can scale storage pools independently from compute, and you can deploy across common network gear.

TCP still consumes CPU, so you get the best results when the storage target keeps processing efficiently. A user-space, SPDK-based NVMe/TCP target often improves IOPS-per-core and steadies tail latency by avoiding extra kernel transitions and copy work.

Measuring and Benchmarking CSI Performance Overhead Performance

Benchmark CSI Performance Overhead by splitting control-plane timing from data-plane timing.

Control-plane signals include CreateVolume time, attach time, stage and publish latency, and PVC-to-ready time during rollouts. Data-plane signals include p50, p95, and p99 latency, plus throughput and IOPS under realistic queue depth. Use fio to generate repeatable workloads, and run tests that match your real block sizes and read/write mix.

Also test “cluster reality,” not lab purity. Run with cgroup limits, real pod density, and normal background jobs. Then repeat the same test while draining nodes or scaling deployments. If tail latency jumps only during churn, you’ve found a lifecycle bottleneck rather than a media bottleneck.

Approaches for Improving CSI Performance Overhead Performance

Most teams get fast wins by reducing contention, limiting mount churn, and enforcing fairness in the storage layer.

- Right-size CSI pods and sidecars, and reserve CPU for them on nodes that host many stateful workloads.

- Reduce rescheduling for stateful sets during peak traffic, and avoid mount storms from aggressive rollouts.

- Use topology-aware placement so pods and storage targets share the shortest network path, especially across zones.

- Prefer raw block volumes for latency-sensitive engines when the workload supports it, and keep filesystem choices consistent.

- Enforce tenant-aware QoS in your Software-defined Block Storage layer to prevent noisy neighbors from inflating p99 latency.

Storage Stack Trade-offs That Influence Latency and CPU Cost

The table below summarizes how common approaches change overhead, tail latency behavior, and operational burden in Kubernetes.

| Approach | Where overhead accumulates | Tail latency behavior | Ops impact |

|---|---|---|---|

| Legacy SAN-style integration | Complex integration, slower semantics | Jitter during churn | Higher change friction |

| Generic CSI block storage | Sidecars, mounts, driver CPU spikes | Moderate jitter | Medium |

| CSI + NVMe/TCP + SPDK user space | Fewer copies, better CPU efficiency | Lower, steadier p99 | Medium, scalable |

| CSI + RDMA lane for select pools | Fabric tuning, specialized NICs | Lowest p99 for targeted apps | Higher complexity |

Latency Consistency with Simplyblock™ in Production Clusters

Simplyblock™ reduces performance volatility by focusing on the data path and operational fit for Kubernetes Storage. Simplyblock delivers Software-defined Block Storage with NVMe/TCP as a first-class transport, and it uses an SPDK-based user-space design to keep I/O efficient and CPU use predictable under load.

This model supports hyper-converged, disaggregated, and mixed deployments, so teams can place storage where it best matches workload needs. That flexibility helps platform teams control the blast radius of churn while keeping high-IO workloads on a stable NVMe/TCP path.

What’s Next for CSI Data Paths, Sidecars, and Offload Acceleration

CSI ecosystems continue to improve around concurrency, backpressure handling, and lifecycle efficiency, especially during large-scale provisioning and rescheduling. On the transport side, NVMe/TCP remains a strong default because it scales over standard Ethernet while keeping operations familiar.

Offload acceleration also matters more each year. DPUs and IPUs move parts of networking and storage processing away from host CPUs, which pairs well with user-space NVMe stacks. That trend can reduce the CPU penalty of high-throughput storage while keeping latency steadier across busy nodes.

Related Terms

Short references that help pinpoint and quantify CSI Performance Overhead in Kubernetes Storage.

- Block Storage CSI

- Storage Metrics in Kubernetes

- Storage Performance Benchmarking

- Five Nines Availability

Questions and Answers

Yes, CSI introduces a small performance overhead due to its gRPC-based control plane and sidecar containers. However, platforms like Simplyblock minimize this impact by optimizing the data path with NVMe over TCP, ensuring near-native storage performance.

CSI drivers may introduce slightly more latency than legacy in-tree plugins due to external sidecars and abstraction layers. That said, CSI is the standard going forward and can be tuned for high-performance workloads using efficient storage backends like Simplyblock’s NVMe-based volumes.

Performance overhead in CSI drivers can come from containerized sidecars, Kubernetes API calls, and volume mount operations. However, the data plane remains direct, especially when paired with NVMe/TCP, which ensures fast I/O despite the control plane complexity.

Yes. Tuning CSI drivers involves optimizing StorageClass parameters, volume mount options, and underlying network/storage layers. Simplyblock’s architecture supports fine-tuning of block size, I/O queues, and performance isolation for latency-sensitive workloads.

Simplyblock mitigates CSI overhead by maintaining a direct data path with no proxying through the CSI control layer. It integrates NVMe over TCP directly into Kubernetes via its CSI driver, ensuring maximum throughput and minimal latency across persistent volumes.