Data center bridging (DCB)

Terms related to simplyblock

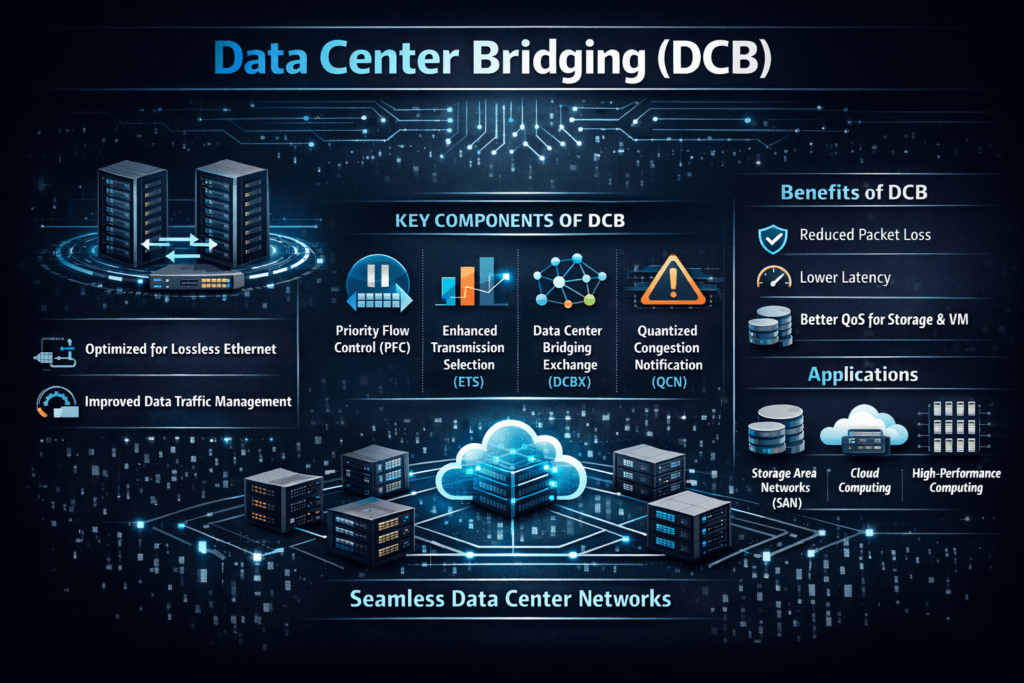

Data center bridging (DCB) adds Ethernet features that make selected traffic more reliable and more predictable inside data centers. Teams use DCB when they want “near-lossless” behavior for traffic that reacts badly to drops, such as storage and cluster fabrics.

DCB does not replace IP, TCP, or routing. Instead, it changes how Ethernet handles congestion, and how switches share bandwidth across traffic classes. Many designs pair DCB with Priority Flow Control (PFC) and related controls so the network protects specific queues instead of dropping frames under pressure.

Why Data center bridging (DCB) matters in converged Ethernet

Converged data centers carry storage, east-west app traffic, and management flows on the same links. In that mix, bursty workloads can fill buffers fast. Standard Ethernet drops frames when queues overflow, and some protocols take a heavy performance hit when loss shows up.

DCB helps by separating traffic into priorities and applying control per priority. That approach supports converged storage designs, including Fibre Channel over Ethernet (FCoE) and RDMA-based fabrics, where teams aim to reduce loss and keep latency spikes under control.

🚀 Reduce Lossless Ethernet Complexity for Kubernetes Storage

Use simplyblock to run Software-defined Block Storage on NVMe/TCP, and reserve DCB for the few tiers that need RoCEv2.

👉 Use simplyblock NVMe/TCP vs RoCE guidance →

Where DCB fits in Kubernetes Storage networks

Kubernetes Storage adds a scheduling layer on top of the network. Pods move, nodes drain, and StatefulSets scale. Each event can shift I/O paths and raise bursts on the fabric. A stable storage network reduces risk during these shifts, especially when multiple tenants share the same cluster.

That said, many Kubernetes environments run well on standard Ethernet when they use NVMe/TCP and strong QoS at the storage layer. DCB becomes more relevant when teams adopt RDMA over Ethernet at scale, or when they chase strict tail-latency targets for latency-sensitive stateful services.

Data center bridging (DCB) and NVMe/TCP tradeoffs

NVMe/TCP typically works on standard routed Ethernet without requiring a lossless fabric. It can simplify rollout because teams avoid the tight coupling that PFC-based designs can create across a large Layer 2 domain.

RoCEv2 often pushes teams toward DCB because RDMA traffic reacts poorly to drops. Simplyblock’s NVMe over RoCE guidance notes that deployments often use DCB and PFC to build a lossless fabric for that path.

A practical enterprise pattern mixes both approaches. Teams use NVMe/TCP for broad coverage, then add RoCEv2 where the business justifies more network work. That blend supports Software-defined Block Storage goals while keeping the operations burden in check.

How to measure DCB impact on storage latency

Measure what users feel, not only what the link can push. Track average latency, plus p95 and p99 latency under steady load and during burst events. Add packet drops, ECN marks, and pause frame counters so you can tell whether the fabric controls congestion or hides it.

PFC can stop loss for a priority, but it can also spread congestion if the fabric misconfigures priorities or thresholds. Simplyblock’s RoCEv2 glossary warns that PFC can protect RDMA traffic while also amplifying outages when teams deploy it poorly.

Practical steps to improve DCB results

Use one consistent playbook, and keep changes small so you can see cause and effect.

- Separate storage classes by priority, and map only the traffic that needs protection to the “no-drop” queue.

- Set clear buffer and pause thresholds, and validate them under burst tests, not only during calm runs.

- Pair PFC with congestion signaling like ECN where your design supports it, so senders slow down before queues explode.

- Limit the blast radius by avoiding oversized Layer 2 domains for lossless queues, and keep routing boundaries clear where possible.

- Re-test during Kubernetes events such as rolling node drains, because reschedules change traffic patterns fast.

Lossless Ethernet choices for storage fabrics

This comparison helps teams decide when DCB fits, and when a simpler Ethernet approach works better.

| Topic | DCB-style lossless Ethernet (often with RoCEv2) | Standard Ethernet with NVMe/TCP |

|---|---|---|

| Primary goal | Prevent drops for selected traffic classes | Keep deployment simple on routed networks |

| Congestion behavior | Uses PFC and related controls per priority | Relies on TCP congestion control, plus good network hygiene |

| Operational risk | Misconfig can spread congestion or create wide impact | Easier change control, fewer fabric-wide dependencies |

| Typical fit | Strict latency targets, RDMA-heavy clusters | Broad Kubernetes Storage adoption, general stateful fleets |

Simplify DCB-heavy fabrics with Simplyblock™ Software-defined Block Storage

Simplyblock™ supports NVMe/TCP for Kubernetes Storage so teams can run high-performance block volumes on standard Ethernet while keeping operations straightforward. That approach helps when you want predictable storage without turning every switch change into a storage incident.

When workloads justify RDMA, simplyblock also supports NVMe over RoCE, so you can adopt a DCB-backed fabric where it adds real value. This flexibility lets platform leaders standardize on one Software-defined Block Storage layer, then choose the transport per cluster tier instead of per application.

Data center bridging (DCB) trends for AI, storage, and DPUs

DCB remains important in environments that push RDMA for tight latency, especially in AI and high-throughput storage clusters. At the same time, more teams combine PFC with ECN-style congestion signals, because ECN can reduce the need for aggressive pausing when queues build.

Hardware offload also shapes the roadmap. DPUs and IPUs can enforce policy closer to the wire, and they can help keep host CPU cycles focused on applications. That direction aligns with high-density Kubernetes fleets where network behavior and storage behavior must stay consistent.

Related Terms

Teams often review these glossary pages alongside Data center bridging (DCB) when they standardize Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

Data Center Bridging (DCB) enhances Ethernet by reducing packet loss and jitter, which is crucial for protocols like iSCSI and NVMe over Fabrics. It ensures consistent low-latency performance and is especially useful in converged infrastructure setups where storage and network traffic share the same fabric.

No, NVMe over TCP is designed to run over standard Ethernet without requiring DCB or RDMA. This reduces complexity and cost, unlike NVMe over RoCE, which depends on DCB-enabled lossless networks for stable performance.

By enabling lossless Ethernet and traffic prioritization, DCB reduces retransmissions and buffer overruns. This lowers storage latency and improves throughput consistency—especially in systems where p99 latency matters for application performance.

DCB is most critical for loss-sensitive protocols like Fibre Channel over Ethernet (FCoE) and RoCE. While less important for TCP-based storage like NVMe/TCP, it’s essential for setups relying on deterministic, low-latency transport.

In most modern software-defined storage environments using NVMe/TCP, DCB is not required. However, if you’re running mixed workloads with legacy RDMA or FCoE components, DCB can help ensure fairness and reliability across shared Ethernet infrastructure.