DPU vs GPU

Terms related to simplyblock

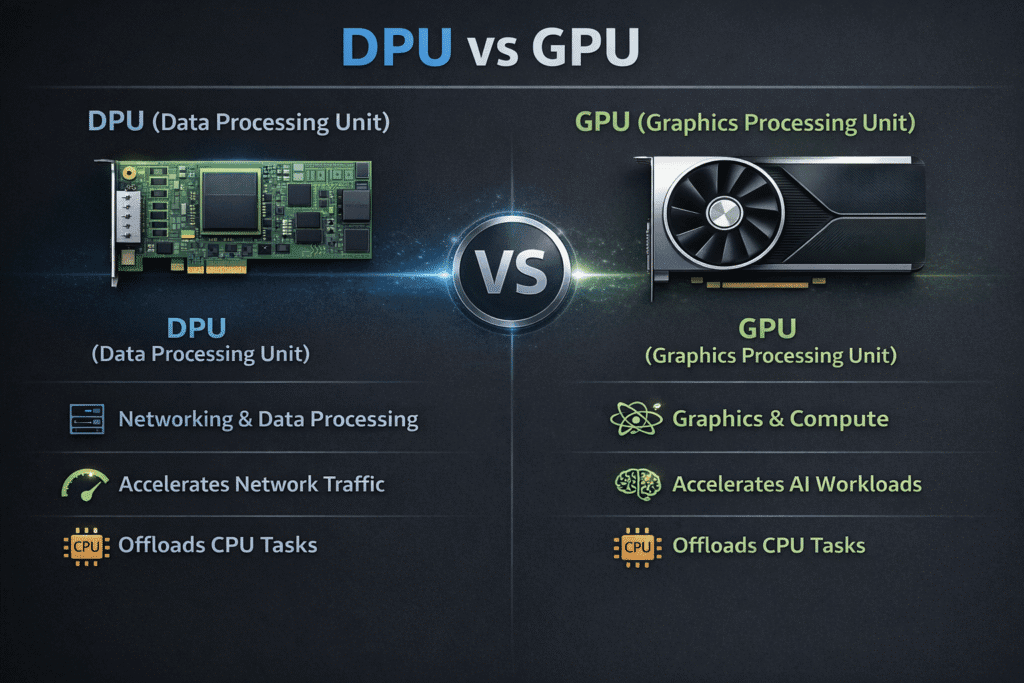

A DPU (Data Processing Unit) and a GPU (Graphics Processing Unit) solve different problems in the stack. A DPU offloads infrastructure data-plane work such as networking, storage protocol handling, encryption, and virtualization so host CPUs spend fewer cycles on platform overhead.

A GPU accelerates massively parallel compute for graphics, AI training, inference, and HPC kernels. In data centers running NVMe/TCP, Kubernetes Storage, and Software-defined Block Storage, DPUs and GPUs are often deployed together because each removes a different bottleneck.

What Is a DPU and How It Works

A DPU is optimized for moving, transforming, and securing data as it traverses the system. Typical DPU workloads include virtual switching, firewalling, telemetry, crypto, and storage networking acceleration.

In practice, the DPU sits close to the NIC and can run data-plane services in isolation from application processes, which helps multi-tenant environments enforce policy and QoS without consuming as many host CPU cycles.

What Is a GPU and How It Works

A GPU is a parallel processor built to run thousands of lightweight threads at once, which is why it dominates AI and HPC workloads. GPU performance is measured less by single-thread latency and more by throughput across matrix math, tensor operations, and vectorized workloads.

In storage terms, GPUs are often “fed” by high-throughput data pipelines, so storage and networking stalls translate directly into wasted accelerator time.

🚀 Keep GPUs Busy While DPUs Offload the Data Plane

Use Simplyblock to deliver NVMe/TCP Kubernetes Storage that sustains training throughput and checkpoint speed.

👉 Use Simplyblock for AI and ML Workloads →

DPU vs GPU impact on Kubernetes Storage performance

In Kubernetes clusters, the CPU typically pays the platform tax for networking, overlay encapsulation, service routing, policy enforcement, and storage I/O. When nodes also run GPU workloads, CPU contention becomes visible fast: the same cores orchestrating pods and networking are also responsible for delivering training batches and writing checkpoints.

DPUs reduce that contention by moving parts of the network and storage data path off the host. GPUs, by contrast, increase the need for a stable data path because they amplify the cost of I/O stalls. This is why many teams evaluate DPUs as a complement to GPU nodes, especially when storage is provided over NVMe/TCP and consumed as Kubernetes Storage.

DPU vs GPU – Capabilities Compared

The key question is not “which is better,” it is “which bottleneck are you removing.” This table summarizes how DPUs and GPUs differ in infrastructure design decisions.

| Component | Primary role | What it accelerates | Typical bottleneck it fixes | Common tie-in to storage |

| DPU | Infrastructure data plane | Networking, vSwitch offload, security, storage protocol processing | Host CPU overhead, noisy-neighbor contention, data-path jitter | Improves predictability for NVMe/TCP fabrics and shared block storage |

| GPU | Parallel compute engine | AI/HPC kernels, tensor and vector math, graphics | Compute throughput, training/inference speed | Demands sustained throughput for datasets, checkpoints, and shuffle I/O |

DPU vs GPU in NVMe/TCP environments

With NVMe/TCP, storage traffic rides standard Ethernet and competes with application networking. If the host handles packet processing, crypto, and virtual switching while also running GPU workloads, you can see tail-latency spikes, retransmits, and CPU saturation during bursts. DPU offload can reduce host-side packet processing and stabilize throughput, which helps keep the storage data path predictable when GPUs are pulling data at high rates.

At the same time, GPUs benefit from storage architectures that scale out without pinning data to local disks. That’s where disaggregated storage designs become attractive: compute nodes with GPUs scale independently, while storage nodes provide shared capacity and performance over Ethernet.

Simplyblock™ for GPU pipelines and DPU-aware data paths

In DPU vs GPU architectures, GPUs depend on a sustained data feed and fast checkpointing, while DPUs are used to offload infrastructure processing and keep host CPU available. simplyblock provides Software-defined Block Storage with an SPDK-based, user-space, zero-copy data path, which reduces kernel overhead when GPU nodes pull large training datasets and push frequent checkpoints under parallel load.

In DPU-enabled designs, that same CPU-efficient storage path pairs well with the goal of minimizing host-side networking and storage overhead. With CSI-based Kubernetes Storage integration and NVMe/TCP over standard Ethernet, simplyblock supports hyper-converged and disaggregated layouts so GPU pools can scale independently from storage capacity, while DPUs are introduced where they deliver measurable efficiency and isolation gains.

What to standardize first

- If the main constraint is GPU utilization due to slow data ingest or check pointing, prioritize storage throughput, locality strategy, and NVMe/TCP fabric design first, then evaluate DPU offload where host CPU becomes the limiter.

- If host CPUs are saturated by networking, security, or virtual switching at high packet rates, a DPU can claw back cores for applications and storage services.

- If you need stricter multi-tenancy and data-path isolation, DPU separation can simplify policy enforcement and reduce interference between teams.

- If your workload is compute-bound (kernels dominate wall time), GPUs deliver the primary acceleration, and DPUs are a secondary lever.

Related Technologies

These glossary terms are commonly reviewed alongside DPU vs GPU decisions in performance-sensitive Kubernetes Storage environments.

PCI Express (PCIe)

Storage Latency

IOPS (Input/Output Operations Per Second)

NVMe Latency

Questions and Answers

DPUs offload networking, storage, and security tasks to free up CPUs, while GPUs handle compute-heavy operations like AI, ML, and rendering. Together, they enable scalable, high-performance architectures by separating infrastructure acceleration from application-level compute.

Yes, for infrastructure workloads like network virtualization, storage offload, and security, a DPU is far more efficient than a GPU. GPUs shine in AI/ML and rendering, while DPUs optimize data movement and isolation in cloud-native environments.

Absolutely. DPUs handle infrastructure-level tasks such as NVMe-oF target offload and vSwitch acceleration, while GPUs are used for model training or inference. Separating roles improves efficiency, security, and workload scalability in modern cloud or edge deployments.

For Kubernetes infrastructure, DPUs are more impactful than GPUs. Offloading networking, encryption, and storage tasks to DPUs frees up CPU for workloads. GPUs are only useful if the app specifically requires heavy compute (like AI or image processing).

Unlike GPUs, DPUs bring performance and isolation to the network and storage plane. They reduce noisy neighbor effects, improve multi-tenancy security, and accelerate IO paths—key for scalable cloud-native infrastructure and composable architectures.