Ephemeral Storage in Kubernetes

Terms related to simplyblock

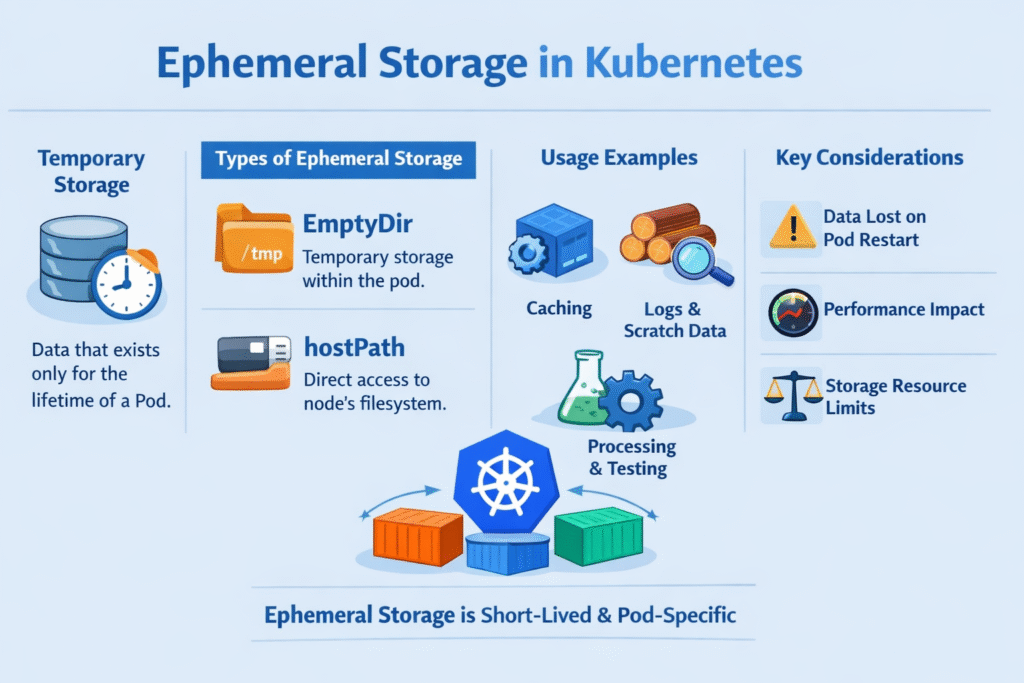

Ephemeral storage in Kubernetes is node-local storage a pod uses for temporary data, such as caches, build artifacts, scratch space, and spill files, and Kubernetes removes it when the pod terminates or reschedules. In practice, the two most common sources are emptyDir on the node filesystem and emptyDir backed by memory (tmpfs). Kubernetes also supports CSI ephemeral volumes, which let CSI drivers provision pod-scoped volumes for the pod lifecycle.

For platform owners, ephemeral storage becomes a reliability problem when nodes hit disk-pressure, pods get evicted, or tail latency spikes during I/O bursts. For DevOps teams, it becomes a performance problem when fast CPUs and NVMe devices still deliver inconsistent p95 and p99 latency because multiple pods contend for the same node disk queue.

Modern Tactics for Making Ephemeral Storage in Kubernetes More Reliable

Treat ephemeral capacity like a first-class resource, not “whatever is left on the root disk.” Start by isolating scratch I/O away from OS partitions, then enforce guardrails so one namespace cannot consume the node. Kubernetes supports eviction signals and resource management patterns that help prevent node-level instability when ephemeral usage spikes.

When teams standardize the storage layer, they often pair node-local scratch with Kubernetes Storage built on Software-defined Block Storage. That design keeps short-lived data local while routing durable writes to a backend that can apply QoS and isolate tenants.

🚀 Improve Ephemeral Storage Performance, Natively in Kubernetes

Use simplyblock to reduce I/O contention and keep latency predictable with NVMe/TCP.

👉 Use simplyblock for Kubernetes Storage →

How Ephemeral Storage in Kubernetes Fits into Kubernetes Storage Architecture

Ephemeral storage does not replace persistent volumes. It complements them. Stateful systems, such as databases and queues, still need persistent volumes so data survives restarts, node drains, and upgrades. Scratch paths should remain rebuildable by design, because a reschedule moves the pod to a new node and drops node-local temp state.

The key architecture decision is placement. If you allow any pod to land anywhere, the scheduler may place I/O-heavy scratch workloads on nodes with slow disks or limited free space. When you rely on local NVMe, scheduling rules such as storage affinity and topology awareness become part of the storage design, not an afterthought.

Ephemeral Storage in Kubernetes When Your Backend Uses NVMe/TCP

NVMe/TCP matters even for “ephemeral-heavy” stacks because many apps mix scratch I/O with durable writes in the same pipeline. A clean pattern is hybrid: keep true scratch on node NVMe, and keep state on a backend that delivers NVMe semantics over standard Ethernet using NVMe/TCP. The NVMe/TCP transport specification targets broad deployability across TCP networks, including software and hardware-accelerated implementations.

When you run multi-tenant Kubernetes Storage, NVMe/TCP-based Software-defined Block Storage also gives you a place to enforce predictable service levels for persistent data, while ephemeral storage stays focused on short-lived work.

How to Measure Ephemeral Storage in Kubernetes Performance Without Fooling Yourself

Benchmark ephemeral storage where the application experiences it: inside the pod, under realistic concurrency, and at the same block sizes your workloads use. fio remains a common tool for this because it can simulate mixed random and sequential I/O patterns and report latency distributions, not just throughput.

Also measure contention. Two pods with separate emptyDir mounts can still compete for the same underlying node device. Capture p50, p95, and p99 latency during parallel load, and correlate spikes with node disk pressure and eviction events.

Practical Ways to Improve Ephemeral Storage in Kubernetes Performance

- Put

emptyDiron dedicated node NVMe devices rather than the root disk, and reserve headroom for bursts. - Use memory-backed

emptyDironly for small, high-churn paths, and cap it to avoid node memory pressure. - Separate scratch from state by routing durable writes to persistent volumes, and keep scratch rebuildable across reschedules.

- Control noisy neighbors with storage QoS and topology-aware placement, so one workload cannot dominate shared queues.

- Reuse the same fio job files in CI to detect p95 and p99 regression before rollout.

Decision Matrix – Comparing Ephemeral Storage Options

The table below helps teams choose an option based on lifecycle, scheduling impact, and performance behavior. Use it to align ephemeral needs with Kubernetes Storage standards and your Software-defined Block Storage backend strategy.

| Option | Data location | Lifecycle | Performance behavior | Scheduling impact | Best fit |

|---|---|---|---|---|---|

emptyDir (disk) | Node filesystem | Pod-bound | Varies by node disk and contention | Low | General scratch and caches |

emptyDir (memory) | RAM (tmpfs) | Pod-bound | Very fast, size-limited | Medium (memory pressure) | Small temp files, hot caches |

| CSI ephemeral volume | CSI-provisioned | Pod-bound | Depends on driver/backend | Low–Medium | Pod-scoped volumes with CSI workflows |

| Local volume | Node-attached device | Claim-bound | High when backed by NVMe | High (node affinity) | Predictable local NVMe I/O |

| Persistent volume over NVMe/TCP | Shared storage pool | Claim-bound | Consistent NVMe semantics over TCP | Low | Durable data with QoS and scale |

Predictable Ephemeral Storage in Kubernetes with simplyblock™

Simplyblock™ supports Kubernetes Storage with NVMe/TCP and Software-defined Block Storage controls that target predictable latency under multi-tenant load. That helps when applications mix scratch paths with durable writes, and teams want one storage platform across hyper-converged and disaggregated clusters.

Simplyblock’s SPDK-based, user-space data path reduces kernel overhead and avoids extra copies, which lowers CPU cost per I/O as concurrency rises. That architecture pairs well with NVMe-oF goals, especially at scale, where efficiency and tail latency matter more than peak throughput in a single test run.

Where Ephemeral Storage in Kubernetes Is Heading Next

Expect tighter integration between scheduling and storage signals, including more topology-aware provisioning for pod-scoped volumes and clearer operational patterns for managing node disk pressure. CSI continues to standardize how storage integrates with Kubernetes, and that reduces friction when teams adopt advanced backends and consistent policy across clusters.

On the transport side, NVMe standards keep evolving, and NVMe/TCP remains a key option because it aligns with common Ethernet and TCP/IP deployments.

Related Terms

Teams often review these glossary pages alongside Ephemeral Storage in Kubernetes when they set targets for Kubernetes Storage and Software-defined Block Storage.

- Storage Affinity in Kubernetes

- Storage Quality of Service

- CSI Topology Awareness

- HostPath Storage in Kubernetes

- CSI Ephemeral Volumes

Questions and Answers

Ephemeral storage refers to temporary storage tied to the pod lifecycle. It’s ideal for cache files, logs, or scratch space. Once a pod is deleted, its ephemeral data is lost. For persistent workloads, persistent volumes should be used instead to retain data across pod restarts.

Ephemeral storage is transient and managed locally by the kubelet, while persistent storage is provisioned via CSI and survives pod rescheduling. Applications needing data durability—like databases—should use CSI-backed block storage to avoid data loss.

Yes. If a pod exceeds its ephemeral storage limit, it may be evicted, impacting workload stability. In performance-sensitive environments, it’s recommended to monitor disk usage and implement storage-aware scheduling to avoid node pressure conditions.

No. Databases require data persistence, backups, and recovery support. Using ephemeral storage for stateful workloads introduces a high risk of data loss. Instead, use high-performance logical volumes with proper encryption and redundancy.

You can monitor ephemeral storage through Kubernetes metrics, kubectl describe pod, or using Prometheus/Grafana setups. For dynamic observability and volume management, platforms like Simplyblock integrate well with Kubernetes to deliver insights into volume usage and performance bottlenecks.