High Availability

Terms related to simplyblock

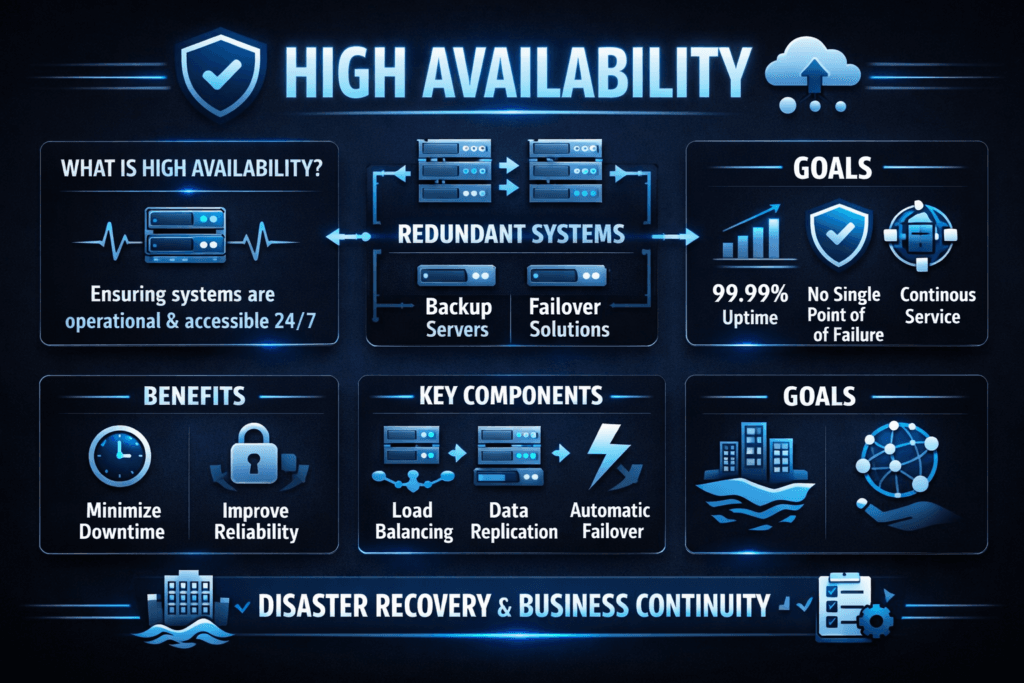

High Availability (HA) describes a system’s ability to meet an agreed-upon uptime level for long periods, even when parts fail. Teams build HA with redundancy, fast detection, and clean failover, so users keep access to apps and data.

Leaders usually tie HA to an SLA and an error budget. Those targets turn downtime into a business number that the org can plan, staff, and fund.

In storage, HA protects Kubernetes Storage by keeping volumes online through node loss, drive loss, maintenance events, and even zone issues when you design for that failure domain.

Building Resilient Uptime with Cloud-Native Design

HA works best when architecture matches real failure domains. A “big box” SAN can hide faults, but it also concentrates risk. Scale-out designs spread risk across nodes and let automation handle recovery steps without paging humans for every incident.

Three design choices drive most HA outcomes. First, you pick the failure domain you want to survive (node, rack, or zone). Next, you choose a write policy (sync or async) to balance latency and data loss risk. Finally, you set quorum and fencing rules so the system avoids split-brain. Quorum voting gives distributed systems a clear rule for safe decisions under faults.

When you adopt Software-defined Block Storage, you can encode these rules as policies, instead of relying on a single storage controller pair.

🚀 Keep Virtualized and Stateful Apps Highly Available on NVMe/TCP Storage, Natively in Kubernetes

Use Simplyblock to simplify persistent storage and reduce failover risk under real load.

👉 Use Simplyblock for Highly Available Kubernetes Storage →

High Availability in Kubernetes Storage

Kubernetes reschedules pods quickly, but stateful apps still depend on storage that survives the reschedule. HA becomes a platform concern, not just an app feature.

Control plane design also matters. Kubernetes documents two common HA layouts with kubeadm: stacked control plane nodes and external etcd. Both aim to keep cluster management available when you lose a node, and each changes your infrastructure footprint and risk profile.

For day-to-day operations, HA for Kubernetes Storage often comes down to stable attach behavior, predictable rebuild pacing, and low tail latency during recovery. If rebuild traffic floods the network, the cluster may look “healthy,” while the database stalls.

High Availability and NVMe/TCP

NVMe/TCP extends NVMe over standard TCP/IP networks as part of NVMe-oF, which lets teams disaggregate compute and storage without specialized fabrics.

That split helps HA in two ways. You can scale storage nodes independently, and you can keep data replicas away from the same failure domain as compute. NVMe/TCP also simplifies operations because it rides on familiar Ethernet and routing patterns, which reduces the number of unique failure modes teams need to troubleshoot.

In practice, many orgs treat NVMe/TCP as a SAN alternative for high-performance shared block storage that still fits cloud-native change rates.

Measuring and Benchmarking High Availability Performance

HA claims only matter when you measure what users feel during faults.

Track RTO (how fast service returns) and RPO (how much data you can lose), then add application-facing latency. Many systems stay “up,” yet miss their SLO because p99 latency spikes during resync. High-availability software often focuses on behavior during subsystem failure and on minimizing downtime during upgrades.

Run failure tests with production-like load. Pull a node, pause a network path, or simulate a drive drop. Keep the test repeatable, and record the time to detect, fence, rebuild, and return to steady state.

Practical Steps to Reduce Outage Risk

- Define the failure domain you must survive, then place replicas across that domain boundary.

- Use quorum rules and fencing so only one side serves writes after a partition.

- Cap rebuild bandwidth to protect foreground I/O and avoid tail-latency blowups.

- Separate tenants with QoS, so one workload cannot starve others during recovery.

- Test failover and rollback as part of every release, not only during incidents.

High Availability Design Patterns Compared

The table below compares common availability approaches for stateful systems, with an emphasis on how they behave in Kubernetes Storage and on Ethernet fabrics.

| Approach | What you get | What you give up | Typical fit |

|---|---|---|---|

| Active/Passive failover | Clear roles, simpler ops | Idle capacity, slower warm-up | Smaller clusters, steady workloads |

| Active/Active with quorum | Fast failover, better utilization | More strict design, careful fencing | Multi-tenant platforms |

| Synchronous replication | Very low data-loss risk | Adds write latency | Short-distance domains |

| Asynchronous replication | Lower write latency over distance | Non-zero RPO | Cross-site DR plans |

| Disaggregated NVMe/TCP pools | Separate scale for compute and storage | Needs strong network hygiene | SAN alternative designs |

Consistent Storage Behavior at Scale With Simplyblock™

Simplyblock™ targets HA at the storage layer for cloud-native stacks. The simplyblock architecture includes HA and fault-tolerance goals for enterprise and Kubernetes environments.

For performance under failure, simplyblock leans on SPDK-style user-space data paths and a zero-copy mindset, which can reduce CPU overhead and keep throughput steadier during rebuild work. Those traits matter when you push NVMe/TCP hard and still want predictable latency for Kubernetes Storage on Software-defined Block Storage policies.

This approach also fits mixed deployments. Teams can run hyper-converged, disaggregated, or hybrid layouts while keeping the same operational model and policy controls.

From Manual Runbooks to Self-Healing Operations

HA design keeps shifting toward automation and a smaller blast radius. Teams want systems that detect partial failure early, fence cleanly, and recover without manual runbooks.

Offload will also shape HA economics. DPUs and IPUs can take on data-path work, which helps maintain service during rebuild and upgrade windows. Recent vendor and community work keeps pushing NVMe-oF adoption across TCP, RDMA, and Fibre Channel, so architects can tune transport choice to cost and latency goals.

Related Terms

Teams often review these glossary pages alongside High Availability when they set targets for Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

High availability (HA) refers to a system’s ability to remain operational with minimal downtime. In modern cloud and software-defined storage, HA is achieved through redundancy, failover mechanisms, and data replication across zones or nodes.

High availability prevents downtime by using redundant systems that take over instantly during failures. Disaster recovery focuses on restoring services after downtime. For Kubernetes and NVMe/TCP storage, HA ensures continuity without recovery delays.

Kubernetes workloads often run mission-critical apps that need constant uptime. Integrating Kubernetes-native storage with HA ensures pods can reschedule quickly, and volumes stay accessible during node or zone failures.

Features like synchronous replication, incremental snapshots, multi-zone volume provisioning, and failover support are key. Simplyblock’s distributed architecture enables HA by default, ensuring fast access and resilience even during hardware failures.

High availability reduces both Recovery Point Objective (RPO) and Recovery Time Objective (RTO) by keeping systems running or instantly failing over. For organizations focused on RTO and RPO reduction, HA is a foundational strategy.