Infrastructure Processing Unit (IPU)

Terms related to simplyblock

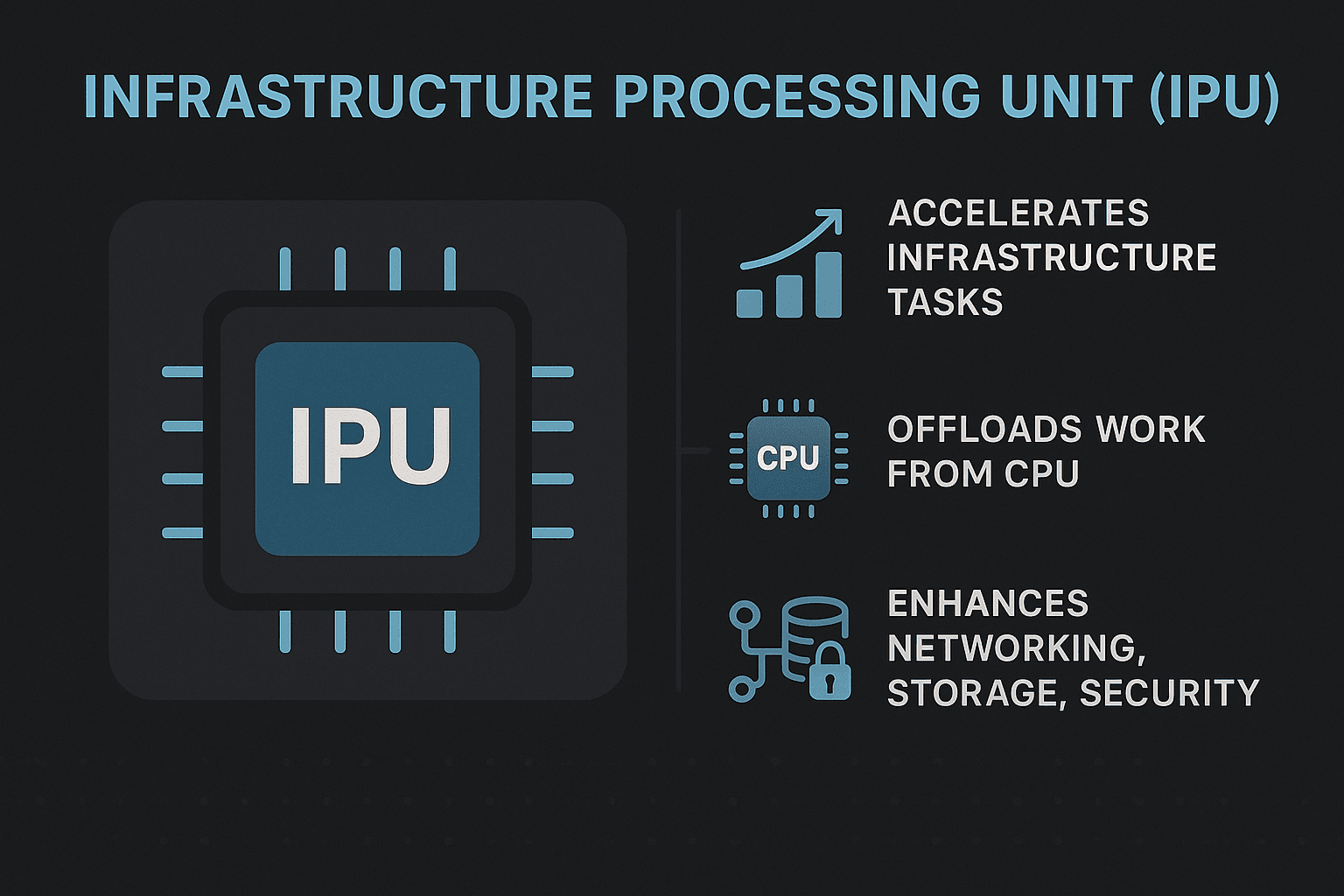

An Infrastructure Processing Unit (IPU) is a specialized processor designed to handle infrastructure-level tasks that normally burden the CPU. Instead of letting the main CPU manage networking, storage, security, and virtualization overhead, an IPU takes over these duties and frees compute resources for applications.

This shift helps data centers run faster, scale more easily, and support workloads that demand predictable performance. IPUs are becoming an essential part of modern cloud and enterprise architectures as systems push for higher efficiency.

How an IPU Handles Infrastructure Workloads

An IPU offloads tasks that were traditionally handled by the server’s CPU. This includes storage virtualization, packet processing, encryption, traffic routing, and tenant isolation. By moving this work onto a dedicated processor, the system can run more efficiently and maintain stable performance under heavy load.

IPUs are built with high-speed network interfaces, dedicated accelerators, and isolated processing environments. This setup allows them to manage data flows with low latency while giving the CPU more room to run application logic.

🚀 Strengthen IPU-Based Infrastructure with High-Performance Storage

Use Simplyblock to support disaggregated systems where IPUs handle networking and storage tasks with lower overhead.

👉 Find Out More About Disaggregated Storage →

Why IPUs Matter for Large-Scale Systems

As workloads grow, standard CPU-based architectures face bottlenecks. Tasks like encryption, packet routing, and storage management pull resources away from applications. IPUs solve this by separating infrastructure work from compute work.

This approach helps keep latency low, prevents congestion under heavy traffic, and ensures that application performance remains steady even when the environment becomes more complex.

Key Advantages of Using an IPU

An IPU offers several powerful benefits that modern data centers rely on:

- More CPU Headroom: Offloading infrastructure tasks frees CPU cycles for applications that need direct processing power.

- Lower Latency for Network and Storage: IPUs manage data paths directly, reducing delays during communication and I/O operations.

- Better Isolation for Multi-Tenant Environments: Infrastructure functions run separately from application logic, improving security and reducing cross-tenant impact.

- Improved Scalability: Systems can add more workloads without overwhelming the CPU, making growth smoother and more predictable.

These benefits are especially important for cloud providers, large enterprises, and high-performance systems.

Where IPUs Are Used Today

IPUs are now common in environments where predictable, high-performance infrastructure is essential:

- Cloud platforms need efficient virtualization and strong tenant isolation.

- High-traffic networking systems that require fast packet processing.

- Storage-heavy environments handling encryption, replication, and data routing.

- AI and analytics clusters where CPU usage must stay focused on computation.

- Kubernetes deployments that benefit from more efficient network and storage processing.

These use cases highlight the growing role of dedicated infrastructure processors.

IPU vs Traditional Server Architectures

Traditional servers rely on the main CPU to manage both application logic and infrastructure tasks, which can create bottlenecks as workloads grow. An IPU changes this model by offloading infrastructure duties, allowing the CPU to stay focused on application performance. This separation leads to more predictable behavior under load and smoother scaling in complex environments.

Here’s how IPUs compare with conventional designs where the CPU handles both compute and infrastructure:

| Feature | Traditional Server CPU | Infrastructure Processing Unit (IPU) |

| Workload Handling | Application + infrastructure | Infrastructure tasks only |

| CPU Overhead | High | Lower |

| Latency | Higher under load | Lower, more predictable |

| Security Isolation | Shared environment | Separate isolated domains |

| Scalability | Limited by CPU saturation | Scales better with added workloads |

How IPUs Improve Distributed Storage and Networking

In distributed systems, performance often drops when the CPU gets overloaded with infrastructure-level tasks. IPUs prevent this by taking control of the storage and networking pipelines. This allows more predictable I/O behavior and ensures faster response times during operations such as replication, routing, and data movement.

By handling these core functions directly, IPUs also help systems scale horizontally without adding significant CPU overhead. This is critical in environments where thousands of nodes must work together without performance dips.

How Simplyblock Strengthens IPU-Based Environments

Simplyblock supports IPU-driven architectures by providing data paths and storage capabilities that work efficiently with offloaded infrastructure tasks. With Simplyblock, organizations can:

- Improve Throughput: High-speed I/O paths help take advantage of IPU-managed traffic and storage acceleration.

- Keep Latency Steady: Optimized processing ensures predictable performance even when workloads grow.

- Reduce Resource Pressure: Less strain on the CPU makes it easier to support mixed workloads without bottlenecks.

- Support Modern Deployments: Works smoothly with Kubernetes, hybrid setups, and multi-tenant environments that benefit from IPU offloading.

What’s Next for Infrastructure Processing Units

As data centers evolve, the need for separating compute and infrastructure functions will continue to grow. IPUs offer a direct path toward more efficient architectures, helping organizations manage larger workloads with lower overhead.

Their role will expand as systems demand better performance, stronger isolation, and smarter scaling.

Related Terms

Teams often review these glossary pages alongside the Infrastructure Processing Unit (IPU) when they define offload boundaries, data-plane isolation, and control-plane responsibilities in high-throughput systems.

Intel E2200 IPU

In-network computing

Network offload on DPUs

Storage virtualization on DPU

Questions and Answers

An IPU offloads infrastructure tasks such as networking, security, storage virtualization, and traffic management from the host CPU. By handling these workloads independently, it frees CPU resources for application performance and improves overall system efficiency.

IPUs address bottlenecks caused by software-defined networking, storage, and security services running on the CPU. They ensure predictable performance, increase isolation between tenants, and improve scalability for multi-tenant and high-throughput workloads.

GPUs accelerate compute-heavy tasks, while DPUs focus on networking and data movement. An IPU is purpose-built to manage infrastructure functions—like virtualization, packet processing, and storage services—providing deeper system-level control for cloud-native environments.

Workloads with heavy infrastructure overhead—such as distributed storage, Kubernetes platforms, NFV environments, and high-density virtualization—benefit the most. IPUs improve performance consistency by isolating noisy-neighbor effects and offloading background processing.

IPU adoption may require updates to orchestration tools, network configurations, and driver support. Integrating IPUs also introduces new management layers, so teams need expertise in hardware offload architectures and monitoring IPU-specific workloads.