Intel E2200 IPU

Terms related to simplyblock

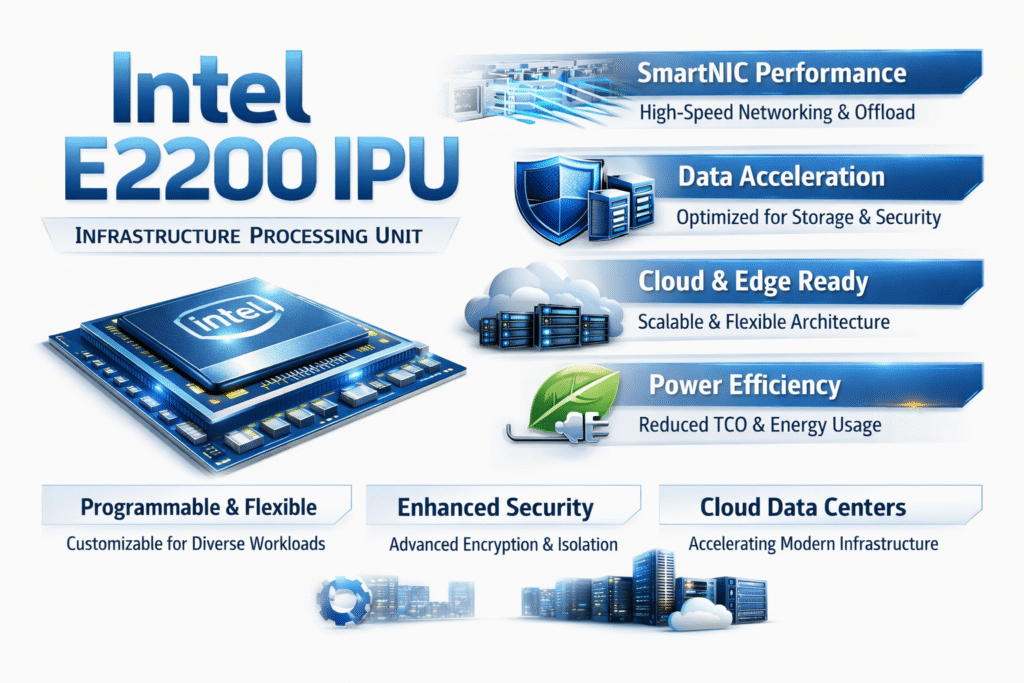

The Intel E2200 IPU (often referenced as “Mount Morgan”) is an Infrastructure Processing Unit intended to move infrastructure services—networking, security, telemetry, and policy enforcement—off the host CPU and closer to the network edge of the server.

The objective is stronger isolation between applications and platform services, plus more predictable CPU headroom when east-west traffic and storage I/O spike together.

What an IPU takes off the host in storage-heavy environments

In storage-heavy clusters, the host CPU is often doing more than running applications. It is also handling packet steering, encryption, node-level policy checks, observability agents, and sometimes virtual switching. When those functions compete with I/O-intensive workloads, tail latency becomes unstable, and noisy-neighbor events become more frequent in multi-tenant setups.

An IPU changes that boundary by giving the platform a dedicated place to execute infrastructure work. That boundary is why E2200-class devices are evaluated in the same architecture cycle as disaggregated storage, baremetal Kubernetes, and SAN alternative designs.

🚀 Run NVMe/TCP Storage With IPU-Ready Kubernetes Design

Use Simplyblock™ to deliver Software-defined Block Storage for Kubernetes Storage with multi-tenancy and QoS.

👉 Learn About IPU-Based Infrastructure →

Intel E2200 IPU capabilities that matter for throughput and isolation

Public technical coverage frames the E2200 class as a 400G-era infrastructure device with a programmable networking subsystem, and on-device compute intended to host infrastructure services.

For platform owners, the key point is not a single throughput number; it is that network and security work can be handled next to the wire, reducing contention with application threads and storage services under load.

NVMe/TCP behavior on Ethernet storage fabrics

NVMe/TCP carries NVMe commands over standard TCP/IP networks, which makes it practical for routable Ethernet, disaggregated storage, and SAN alternative architectures without requiring specialized RDMA fabrics. It is part of NVMe over Fabrics, within the broader NVM Express specification ecosystem.

At high IOPS, hosts still pay for TCP stack work, encryption, policy checks, and telemetry. In E2200-style designs, more of that infrastructure overhead can be removed from the application CPU budget, which helps protect p99 latency and improves consolidation, especially when multiple tenants share a Kubernetes cluster.

Kubernetes Storage architectures where offload matters

In Kubernetes Storage, infrastructure offload does not replace the storage platform. You still need Software-defined Block Storage to deliver block semantics, resiliency, snapshots, multi-tenancy, and QoS, and you still rely on Kubernetes primitives such as PersistentVolumes and CSI-driven provisioning.

Hyper-converged deployments run compute and storage on the same nodes, so CPU contention shows up quickly during spikes. Disaggregated deployments separate storage nodes from application nodes, so the network path becomes the performance focus. Offload helps in both models because it reduces host-side platform tax that otherwise competes with application scheduling and storage I/O.

Comparison of infrastructure offload options for Kubernetes Storage

This comparison matters because platform teams evaluating the Intel E2200 IPU are usually deciding between staying CPU-only, adding selective NIC offloads, adopting a DPU, or moving to an E2200-class IPU when storage traffic and security controls push host CPUs into contention.

The table below summarizes where infrastructure work typically runs and what that means for clusters standardizing on NVMe/TCP, Kubernetes Storage, and Software-defined Block Storage.

| Option | Where infrastructure work runs | Typical effect in storage-heavy clusters |

| CPU-only | Host CPU and kernel | Higher contention during spikes, higher p99 risk |

| SmartNIC | Select NIC offloads | Helps specific functions, but much work stays on-host |

| IPU (E2200 class) | On-card compute plus programmable networking | More stable host CPU headroom, steadier p99 under load |

| DPU | On-card compute tightly coupled to NIC | Similar objective, with differences in software and operations |

What the storage layer must still provide

Even with infrastructure offload, storage teams still need a platform that can deliver predictable block behavior at scale.

That typically includes volume lifecycle control, snapshots, replication or erasure coding, multi-tenant isolation, and enforceable QoS so one tenant cannot starve others. This is where NVMe-oF transport choices and user-space I/O design matter.

Simplyblock™ with Intel E2200 IPU in an IPU-aligned stack

Simplyblock™ fits an Intel E2200 IPU deployment because the IPU and the storage layer solve different parts of the same problem – the IPU reduces host-side infrastructure contention during heavy NVMe/TCP traffic, while simplyblock delivers Software-defined Block Storage for Kubernetes Storage with multi-tenancy and QoS.

Simplyblock’s SPDK-based, user-space data path supports low overhead and predictable CPU usage, which complements an IPU-first approach focused on stable p99 under load.

Related Technologies

These glossary terms are commonly reviewed alongside Intel E2200 IPU when planning offload-heavy networking and storage data paths in Kubernetes Storage.

SmartNIC vs DPU vs IPU

PCI Express (PCIe)

Understanding Disaggregated Storage

Container Storage Interface (CSI)

Questions and Answers

The Intel E2200 IPU goes beyond SmartNICs by offering a fully programmable infrastructure processing unit that offloads storage, networking, and security workloads. It enables more secure and efficient software-defined infrastructure by isolating control and data planes.

Yes, the E2200 IPU is designed to offload NVMe-oF targets directly to the IPU, freeing up host CPU and reducing latency. This makes it ideal for scalable, disaggregated storage in cloud-native and edge environments.

The E2200 IPU enhances Kubernetes storage performance by offloading packet processing, encryption, and I/O tasks. It improves pod density, reduces CPU overhead, and enables secure multi-tenancy at the hardware level.

By handling networking, storage, and security tasks on the IPU itself, the Intel E2200 reduces CPU load and increases performance per watt. It also improves observability and control, which is essential for cloud cost optimization.

High-performance workloads like storage backends, distributed databases, and network-intensive microservices benefit most. The E2200 IPU ensures lower latency and better IOPS, especially when paired with NVMe over TCP and containerized deployments.