IO Path Optimization

Terms related to simplyblock

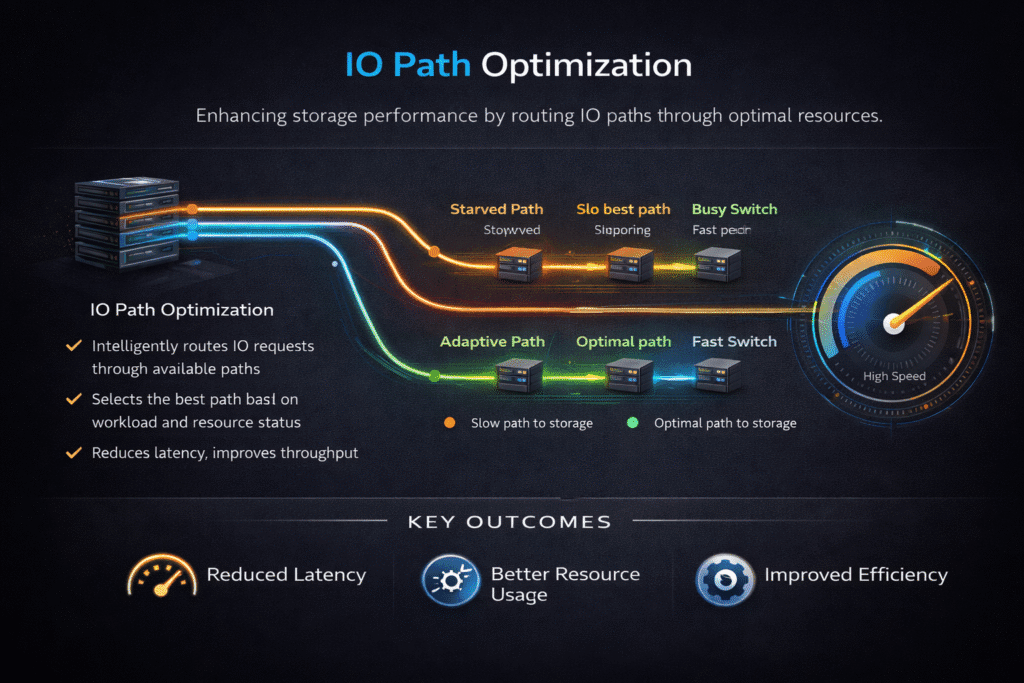

IO Path Optimization reduces work in the end-to-end path of a read or write, from an application call to NVMe media and back. Teams aim for fewer CPU cycles per I/O, fewer context switches, fewer memory copies, and tighter tail latency under load. For executives, that often translates into higher workload density per node and fewer surprise capacity adds. For platform teams, it means steadier SLOs for stateful services that depend on Kubernetes Storage and Software-defined Block Storage.

An I/O path can include application threads, filesystem or raw block access, kernel queues, drivers, the network (for remote storage), the storage target, and NVMe completions. Small inefficiencies stack up fast when many pods share the same nodes and the same fabric.

Building Low-Overhead Data Planes with High-Performance Storage Engines

A lean data plane wins because it keeps the “hot path” short and repeatable. User-space I/O stacks can cut interrupt pressure and reduce extra copies, which helps keep latency stable as queue depth rises. SPDK focuses on user-space drivers and polled-mode processing to reduce overhead and improve CPU efficiency for NVMe-heavy systems.

Storage platforms also need to manage background work. Rebuild, rebalance, and snapshot tasks should not steal queue time from latency-sensitive volumes. Strong QoS controls and tenant isolation keep those activities from turning into p99 spikes.

🚀 Improve IO Path Optimization for NVMe/TCP Kubernetes Storage

Use simplyblock to reduce I/O overhead with SPDK acceleration and keep Software-defined Block Storage consistent at scale.

👉 Use Simplyblock for NVMe over Fabrics & SPDK →

Kubernetes Storage – Scheduling, CSI, and Placement for Fast I/O

Kubernetes can either protect performance or create extra overhead, depending on how you wire the stack. CSI components, kubelet mount flows, and node-side attach logic influence both time-to-ready and steady-state latency. A clean design keeps volume operations predictable during reschedules, drains, and rolling upgrades.

Topology matters just as much as raw device speed. If a pod lands far from its volume, hop count grows, and tail latency follows. Many teams reduce these gaps by pairing storage-aware placement with strict controls in Software-defined Block Storage.

IO Path Optimization for NVMe/TCP Fabrics

NVMe/TCP keeps NVMe semantics while running over standard TCP/IP networks, which makes it useful for disaggregated storage and SAN alternative designs. NVM Express maintains the NVMe over TCP transport specification, which defines how NVMe queues and data move over TCP.

Teams tune NVMe/TCP paths for consistency, not just peak IOPS. Start with clean MTU alignment and stable congestion behavior. Then, validate multipath behavior so a single link issue does not turn into a latency spike. NVMe multipathing supports multiple paths to the same namespace,e so I/O can continue when a link or target node drops.

Measuring and Benchmarking IO Path Optimization Results

Benchmark the path that production hits. Use small random I/O for OLTP, larger blocks for scans and backups, and mixed read/write for real pressure. Fio is widely used for this because it can model block sizes, job counts, queue depth, and latency distributions.

Track p95 and p99 latency, not only averages. Measure CPU per I/O as well, because an inefficient path can burn cores and lower real throughput even when the media stays underutilized. In Kubernetes, include “day-two” events in your plan, such as node drain, reschedule, and upgrade windows, because those events often expose hidden contention.

Practical Levers for Sustained Performance Under Load

Most gains come from tightening the hot path first, then controlling shared resources so neighbors cannot steal latency headroom.

- Reduce copies and kernel crossings with user-space data paths where they fit your operational model.

- Align CPU and NIC queues to avoid cross-core packet churn under NVMe/TCP load.

- Use NVMe multipathing and test failover timing during real congestion.

- Enforce per-volume QoS so background work does not starve critical flows.

- Keep Kubernetes Storage placement aligned with zones, racks, and fabric segments, so pods avoid long paths.

I/O Path Models and Their Trade-Offs

Different designs remove different bottlenecks. This comparison helps teams choose a model that fits their latency targets and ops constraints.

| I/O path model | Typical data path | Strengths | Common trade-off |

|---|---|---|---|

| Kernel-centric block stack | App → kernel → driver → device/transport | Familiar, broad compatibility | More interrupts and context switches |

| User-space accelerated stack | App/daemon → user-space pollers → device/transport | Lower overhead, stronger tail control | Needs careful CPU planning |

| NVMe-oF over NVMe/TCP | Host → TCP/IP fabric → target → NVMe | Standards-based, works on Ethernet | Needs fabric tuning and QoS discipline |

Predictable Storage Latency with Simplyblock™

Simplyblock™ targets efficient data paths for stateful platforms by combining SPDK-style acceleration with NVMe/TCP transport options. The simplyblock NVMe-over-Fabrics and SPDK design centers on user-space, zero-copy behavior to reduce overhead on standard hardware.

On the platform side, simplyblock aligns with Kubernetes Storage workflows so teams can provision and operate persistent volumes through CSI patterns while keeping the data plane lean.

Where IO Path Optimization Is Heading Next

The path keeps moving toward policy-driven control and hardware offload. DPUs and IPUs can take parts of the network and storage work off the host CPU, which helps protect application cores in dense clusters.

NVM Express also continues to evolve NVMe specs, including transport-related updates, as the ecosystem pushes for better reliability, power control, and recovery behavior.

Related Terms

Teams often review these glossary pages alongside IO Path Optimization when they tune Kubernetes Storage and Software-defined Block Storage paths.

Zero-Copy I/O

NVMe Multipathing

NVMe-oF (NVMe over Fabrics)

NVMe-oF Target on DPU

Questions and Answers

Optimizing the IO path reduces latency and increases throughput by eliminating unnecessary layers between the application and storage. In latency-sensitive environments like databases or Kubernetes workloads, streamlined IO paths ensure faster response times and better resource efficiency.

With protocols like NVMe over TCP, IO path optimization enables near-local performance by cutting down protocol overhead and bypassing legacy stacks like SCSI. This leads to significant improvements in IOPS, latency, and CPU usage for remote storage solutions.

Yes, Software-Defined Storage platforms often implement IO path optimization techniques to match or exceed the performance of traditional SANs. By integrating with kernel-level drivers or using RDMA/TCP transport layers, SDS can minimize data hops and increase efficiency.

In Kubernetes, using an optimized CSI driver that supports fast storage backends like NVMe helps shorten the IO path. Aligning workloads with local or high-speed network storage reduces context switches and ensures consistent performance under high IO load.

IO path optimization involves tuning layers such as the file system, block layer, and protocol stack. Techniques include using DPDK, SPDK, or zero-copy IO, as well as removing intermediate software bottlenecks. Solutions like Simplyblock leverage protocol-native features to deliver fast, streamlined storage access.