Kubernetes PodDisruptionBudget for Storage

Terms related to simplyblock

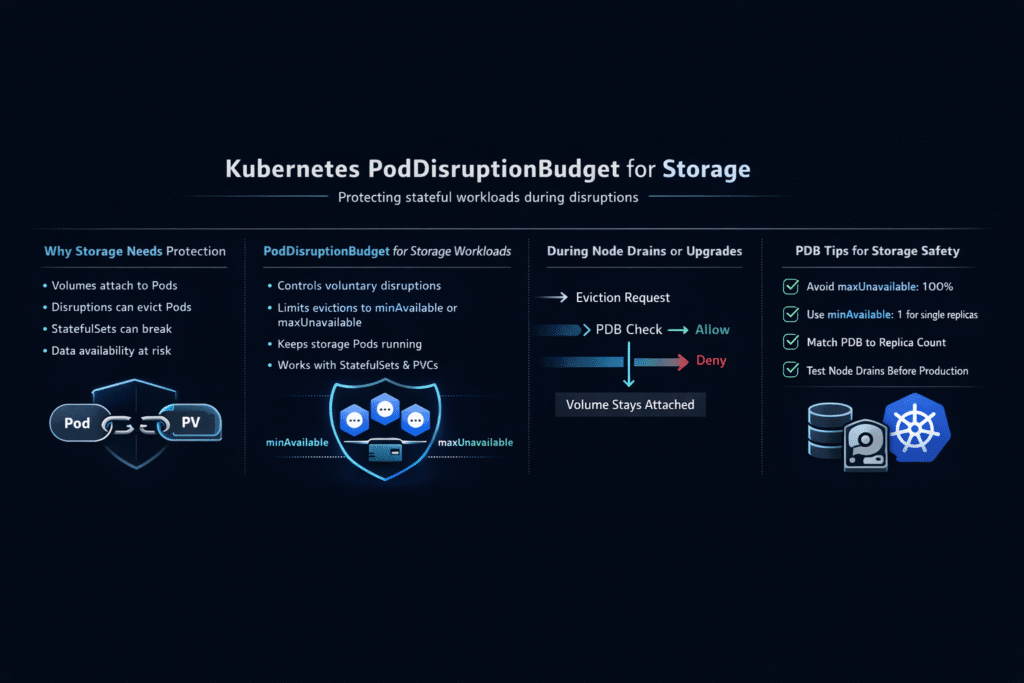

A Kubernetes PodDisruptionBudget for Storage (PDB) sets guardrails for voluntary disruptions such as kubectl drain node pool upgrades and autoscaler-driven consolidation. Storage teams use a PDB to keep enough storage-critical pods running while Kubernetes evicts and reschedules workloads. That policy helps you avoid quorum loss, remount storms, and cascading retries during maintenance.

PDBs do not cover involuntary failures such as node crashes or power loss. You still need replication, failure-domain design, and consistent recovery behavior. Treat the PDB as an operations control that reduces self-inflicted downtime, not as a durability feature.

In Kubernetes Storage environments, PDB decisions affect two layers. The first layer includes storage system pods, CSI components, and data-plane services that must stay online to serve volumes. The second layer includes the stateful apps that depend on those volumes and must keep enough replicas alive to meet SLO targets. A strong Software-defined Block Storage platform makes PDB outcomes more predictable because it sustains I/O while pods move.

Policy Tuning That Matches Storage Quorum and Recovery

Good PDB values follow the storage availability model, not the replica count alone. A replicated data path, a quorum-based control plane, and a gateway-style design behave differently under eviction pressure. If your storage needs two healthy members to accept writes, then a PDB that allows two simultaneous evictions can still trigger downtime.

Topology rules help the PDB do its job. Spread storage pods across zones, racks, and node pools so one drain cannot evict the same role everywhere. Pair that placement with clear maintenance runbooks so that upgrade controllers, autoscalers, and operators do not disrupt the same failure domain at the same time.

🚀 Keep Node Drains Safe for Stateful Pods with Disruption Budgets

Use Simplyblock to keep Kubernetes Storage steady during maintenance with NVMe/TCP and predictable I/O.

👉 Use Simplyblock for Persistent Storage on Kubernetes →

Kubernetes PodDisruptionBudget for Storage in Kubernetes Storage Topologies

Hyper-converged Kubernetes Storage often places storage pods and application pods on the same nodes. That layout can deliver low-latency local access, but it also raises the risk that a drain removes storage capacity and workloads together. Disaggregated layouts keep storage nodes stable and let compute nodes churn, which can simplify maintenance planning.

Either model needs careful control-plane protection. CSI controller pods handle provisioning, attach, and detach operations, and CSI node pods support the mount path on each worker. When those components restart too freely during a drain, volume operations can stall even if the backend keeps running. A PDB can limit that blast radius, but you must also keep replicas spread and ready.

Kubernetes PodDisruptionBudget for Storage and NVMe/TCP Networked Volumes

NVMe/TCP brings NVMe semantics over standard Ethernet and fits well with disaggregated Kubernetes Storage designs. That combination helps when nodes rotate because the storage endpoints can remain stable while compute reschedules. Multipath support also helps the stack ride through link maintenance without forcing a full I/O reset.

Performance matters during disruption windows. Even when an application stays “up,” it can suffer timeouts if p99 latency spikes during resync, rebuild, or remount. NVMe/TCP-backed Software-defined Block Storage can reduce that variance by keeping the data path efficient and by shortening recovery work.

Validation Drills and Benchmarks for Disruption Readiness

Measure behavior under the same conditions that cause incidents: high load, active rollouts, and node drains. Start with a steady baseline, then run controlled drains on busy nodes, and record the full recovery curve. Capture time to evict, time to reschedule, time to reattach, and time to return to steady p95 and p99 latency.

A repeatable workload generator such as fio helps you compare storage stacks and settings. Use realistic queue depth, block size, and read/write mix for your databases, analytics jobs, or streaming services. Track volume operation latency alongside IOPS and throughput so you can see whether the system slows on control-plane calls or on the data path.

Operational Changes That Reduce Risk During Node Drains

Use one set of changes, test them, and keep what moves the numbers. These levers usually deliver the most value:

- Set PDB values from quorum and rebuild needs, then run drain drills under load to confirm behavior.

- Spread replicas across failure domains with topology constraints, and avoid placing siblings on the same node pool.

- Coordinate rollout speed for stateful apps with storage maintenance so you do not stack recoveries.

- Enforce QoS and tenant isolation so background resync work does not starve latency-sensitive services.

- Alert on p99 latency and error rates during drains, and gate upgrades when those signals breach targets.

Storage Disruption Policy Options – What Changes in Practice

The table below shows how common PDB approaches affect storage behavior during planned disruption.

| Option | What it limits | Operational upside | Typical pitfall for stateful storage |

|---|---|---|---|

| No PDB | Nothing | Fast drains | Parallel evictions can break quorum and trigger broad retries |

PDB with minAvailable | Minimum pods that must stay up | Strong guardrail for quorum roles | Overly strict values can block maintenance |

PDB with maxUnavailable | Maximum pods that may go down | Predictable drain planning | Mis-sized values can still drop below quorum |

| PDB + NVMe/TCP + Software-defined Block Storage | Eviction rate plus fast I/O path | Better latency stability during churn | Bad sizing still hurts, but recovery finishes sooner |

Simplyblock™ Maintenance Stability for Stateful Clusters

Simplyblock centers on predictable maintenance by keeping the data path lean under pressure. It builds on SPDK to run storage I/O in user space and reduce CPU overhead, which helps protect latency when resync and rebuild work runs in the background. That approach also aligns with DPU offload strategies where teams want more I/O per core.

In Kubernetes Storage deployments, simplyblock supports hyper-converged, disaggregated, and mixed models, so platform teams can match architecture to workload needs. NVMe/TCP connectivity supports high-performance remote volumes on standard networks, and multi-tenancy controls help isolate tenants during disruption events. Those traits make PDB outcomes easier to validate because the platform sustains predictable I/O while the cluster drains and upgrades.

What to Expect Next for Disruption Controls in Stateful Platforms

Kubernetes continues to improve eviction, scheduling, and disruption handling, and the ecosystem now treats safe consolidation as a first-class goal. Storage platforms also add deeper topology awareness, which helps operators align disruption policy with where data lives and how replicas recover.

Teams will likely automate more of this work. Smarter controllers can coordinate autoscaling, upgrades, and storage-aware placement so drains stop colliding with recovery tasks. With NVMe/TCP, Kubernetes Storage can keep high performance during churn, especially when Software-defined Block Storage limits background work and protects tail latency.

Related Terms

Teams often review these glossary pages alongside Kubernetes PodDisruptionBudget for Storage when they set measurable targets for Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

A PDB limits the number of concurrently disrupted pods during voluntary events like node maintenance or upgrades. For stateful apps with persistent volumes, it ensures Kubernetes storage-backed workloads remain available and consistent during disruption.

Without a proper PDB, multiple pods with attached volumes could be evicted at once, risking downtime or data unavailability. Defining a conservative PDB helps protect stateful applications on block storage from simultaneous eviction in high-availability environments.

Yes. A well-defined PDB can delay node upgrades until critical pods safely terminate, avoiding race conditions in volume unmounting. This aligns with Simplyblock’s approach to reliable volume lifecycle handling via CSI.

Set minAvailable or maxUnavailable values based on your app’s replication strategy. For single-replica StatefulSets with attached volumes, minAvailable: 1 prevents pod eviction entirely—critical for maintaining data consistency under load or failover.

Yes. Simplyblock integrates natively with Kubernetes, respecting pod scheduling and disruption policies. Combined with its block storage resilience features, it supports zero-downtime maintenance and controlled failovers.