Kubernetes StorageClass Parameters

Terms related to simplyblock

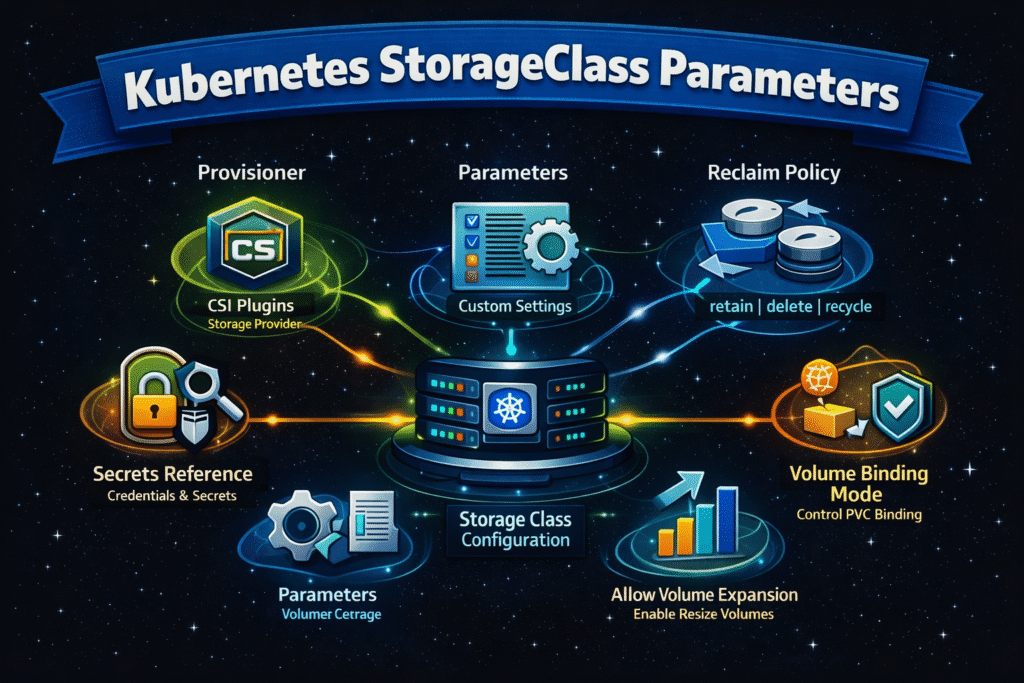

Kubernetes StorageClass Parameters define how Kubernetes requests storage, and how a CSI driver creates volumes to satisfy those requests. They sit at the center of tiering, guardrails, and day-2 operations in Kubernetes Storage, because they turn platform policy into repeatable volume behavior.

A StorageClass combines standard fields (like reclaim behavior and binding mode) with driver-specific parameters (like pool selection, encryption flags, or fabric choice). When teams tune these values with discipline, they reduce ticket load, shrink drift across clusters, and keep Software-defined Block Storage predictable even under mixed workloads.

Kubernetes StorageClass Parameters as a Policy Contract Between Platform and Apps

Treat Kubernetes StorageClass Parameters as a contract: app teams declare intent through PVCs, while the platform team encodes the storage promise in StorageClasses. That contract matters most in three areas.

Lifecycle control starts with reclaim behavior. Reclaim settings decide whether the backend keeps or removes data after a claim is released. Scheduling control shows up through the binding mode. The binding mode influences when Kubernetes binds a volume and how well it aligns with topology and placement. Operational control expands through growth rules and mount options. Volume expansion settings and mount flags shape resize workflows, filesystem behavior, and node-side I/O patterns.

Use this short checklist to keep StorageClasses clean without turning YAML into a bespoke art project:

- Define one default class that matches the most common workload tier, and force exceptions to name a specific class.

- Set reclaim behavior per data type, and keep it consistent across environments.

- Use binding mode to align provisioning with scheduling, especially in multi-zone clusters.

- Keep mount options minimal, and document every option you keep.

- Limit driver-specific parameters to a small, approved set, then version them like any other platform API.

🚀 Standardize Kubernetes StorageClass Parameters for Cleaner Provisioning

Use simplyblock to define StorageClass parameters once, enforce tiers, and keep NVMe/TCP behavior consistent.

👉 Use Simplyblock StorageClass Docs →

Kubernetes StorageClass Parameters in Kubernetes Storage Tier Design

Platform teams usually map StorageClasses to tiers such as “general,” “low-latency,” and “protected.” Each tier should change only a few things. Too many toggles create hidden tiers, and hidden tiers create on-call pain.

In Kubernetes Storage, tier design works best when it aligns with workload classes, not teams. Databases often need predictable tail latency and stable throughput. Analytics pipelines often want bandwidth and fast scale-out. Logging systems often need cost control plus safe reclaim defaults. A clean tier model gives executives clearer cost and risk boundaries, and it gives operators fewer moving parts to debug.

Kubernetes StorageClass Parameters for NVMe/TCP and Disaggregated Backends

Kubernetes StorageClass Parameters become even more critical when you run NVMe/TCP, disaggregated storage pools, or a SAN alternative architecture. In that model, the StorageClass often selects the fabric type, the pool, and the QoS posture that protects latency during tenant spikes.

NVMe/TCP also raises the bar on consistency. Network path, CPU overhead, and queue behavior can move p99 latency more than raw media speed. Teams get stronger results when they keep parameters stable and shift “performance shaping” into the storage layer, where multi-tenant controls can enforce fairness. That approach matches how modern Software-defined Block Storage platforms operate, especially those built on SPDK-style user-space I/O paths.

What to Measure When StorageClass Changes Affect Performance

StorageClass edits rarely fail fast. They tend to fail later, under load, during node drains, or during a resize. Strong measurement focuses on signals that expose those failure modes.

Track volume provisioning time, attach time, and mount time to catch control-plane and node-side friction. Track steady-state IOPS, throughput, and p95/p99 latency to catch data-path drift. Add contention tests to expose noisy-neighbor behavior, because shared pools can hide problems until a busy window arrives.

Tuning Storage Behavior Without Adding YAML Sprawl

Start by reducing variation. Many clusters accumulate near-duplicate StorageClasses that differ by one flag, and that pattern makes audits and incident response harder. Consolidate classes, publish a small tier matrix, and pin key behaviors such as reclaim rules and binding mode.

Next, align parameter choices with the failure model you want. If a workload must move during maintenance, avoid designs that hard-pin storage to a narrow node set. If a workload must keep data after release, set reclaim behavior up front and enforce it through policy.

StorageClass Options Side-by-Side – Operational and Performance Trade-offs

The table below compares common StorageClass choices across storage models, since many organizations mix cloud disks, shared filesystems, and NVMe/TCP-backed block storage in one fleet.

| Storage model | Typical StorageClass goal | Parameter focus | Operational trade-off |

|---|---|---|---|

| Cloud block (CSI disks) | Safe defaults for broad use | Reclaim rules, binding mode, expansion | Provider limits and zonal behavior can restrict placement |

| Shared filesystem (NFS) | Simple shared access | Mount options and access patterns | Throughput and latency vary with shared server load |

| Local PV / node-attached | Lowest local latency | Binding mode and topology alignment | Drains and failures turn into storage events |

| NVMe/TCP disaggregated block | SAN alternative with scale | Pool selection, fabric choice, QoS | Needs disciplined tiering to keep p99 stable |

Predictable Storage Policies with Simplyblock™

Simplyblock™ focuses on Kubernetes Storage that behaves like a platform service instead of a set of one-off volume recipes. Simplyblock supports NVMe/TCP and designs for Software-defined Block Storage patterns where you scale storage independently from compute. Teams can keep StorageClass parameters compact while still expressing the outcomes that matter: tier, pool, and performance guardrails.

That approach fits executive goals around utilization and risk, because the platform team can standardize classes across clusters and reduce drift. It also fits operator goals, because a smaller set of classes reduces misprovisioning, shrinks troubleshooting time, and keeps upgrades less fragile.

How StorageClass Parameter Strategy Is Evolving

Enterprises now treat StorageClasses as part of the platform API surface. That shift drives three clear trends: fewer classes with stricter governance, more topology-aware provisioning in multi-zone fleets, and tighter coupling between observability and policy.

As offload tech matures, teams will also push more of the I/O path into efficient user-space designs and hardware assist, which helps preserve CPU headroom when NVMe/TCP traffic grows.

Related Terms

Teams often review these glossary pages alongside Kubernetes StorageClass Parameters when they standardize Kubernetes Storage and Software-defined Block Storage tiers.

- StorageClass

- Local Node Affinity

- Kubernetes Block Storage

- Zero-Copy I/O

- Retain vs Recycle vs Delete Policy

Questions and Answers

StorageClass parameters define how storage is provisioned by the CSI driver—such as volume type, filesystem, IOPS, or encryption. These settings determine the behavior of dynamically created volumes and are key to automating Kubernetes storage provisioning.

They control backend-specific features like throughput limits, replication, or disk type (e.g., SSD vs HDD). Choosing the right parameters enables cost-optimized storage provisioning without compromising on workload performance or reliability.

Yes. You can create multiple StorageClasses to serve different application needs—like high-performance volumes for databases and lower-tier volumes for logs. This supports efficient multi-workload infrastructure and granular control over resources.

Absolutely. Parameters can include encryption settings, zone awareness, or volume binding modes. These options are especially valuable for securing multi-tenant Kubernetes deployments and aligning storage with cluster topology.

It depends on workload needs, performance goals, and compliance requirements. Refer to your CSI driver’s documentation and test across environments. Simplyblock provides flexible CSI configurations that expose tunable parameters for NVMe, encryption, and replication.