KubeVirt and Kubernetes Virtualization

Terms related to simplyblock

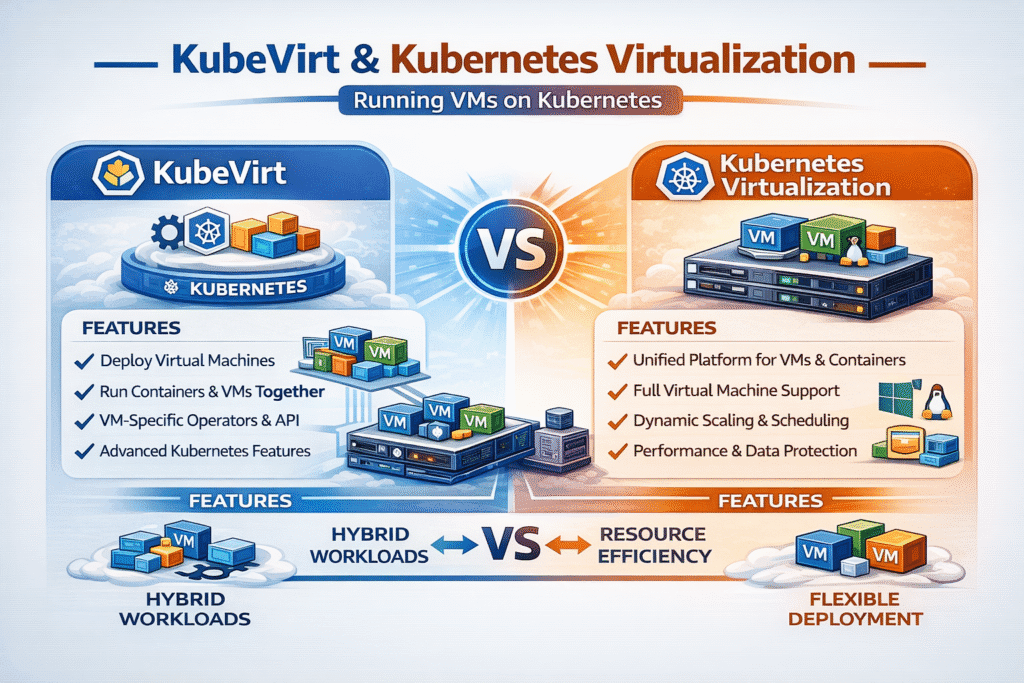

KubeVirt and Kubernetes Virtualization let teams run and manage virtual machines (VMs) using Kubernetes-native APIs, so VMs and containers share the same control plane, RBAC, scheduling, and automation workflows. KubeVirt introduces VM-focused custom resources and runs VM workloads inside pods, which simplifies day-2 operations for mixed estates that still require VM boundaries.

A practical mental model is that Kubernetes becomes the platform layer for legacy and cloud-native workloads, while the virtualization runtime becomes another Kubernetes-managed service rather than a separate infrastructure silo.

Optimizing KubeVirt and Kubernetes Virtualization with Cloud-Native Platform Patterns

Optimization starts by aligning virtualization operations with Kubernetes primitives. That means treating VM lifecycle management as declarative, enforcing namespace-based tenancy, and standardizing policy and automation through existing cluster tooling.

Storage, however, is typically where KubeVirt platforms succeed or fail at scale. VM boot storms, image imports, cloning, snapshots, and steady-state database I/O tend to happen concurrently. If the storage backend cannot maintain consistent tail latency, the impact shows up as guest timeouts, slow application response, and unpredictable density.

🚀 Run KubeVirt Virtual Machines with NVMe/TCP-Backed Kubernetes Storage

Use Simplyblock to deliver Software-defined Block Storage for VM disks, with predictable latency at scale.

👉 Use Simplyblock for KubeVirt Storage →

Storage Paths for Virtual Machines in Kubernetes Storage

In KubeVirt, VM disks are usually backed by PersistentVolumeClaims (PVCs), so VM I/O behavior is tightly coupled to Kubernetes Storage choices and the CSI implementation that provisions and attaches volumes.

KubeVirt’s Containerized Data Importer (CDI) adds additional storage pressure because importing images, cloning, and uploading VM templates often generate bursty reads and writes, especially during scale events or when rolling out new golden images across clusters.

To keep virtualization predictable, the most important storage characteristics are low latency variance, stable performance under contention, and clear separation between tenants or workload classes. This is why Kubernetes-native Software-defined Block Storage is commonly preferred for VM-backed persistent disks: the control plane can orchestrate block volumes consistently with the rest of the cluster, rather than relying on external SAN workflows.

Why NVMe/TCP Fits Kubernetes-Native Virtualization

NVMe/TCP extends NVMe semantics over standard TCP/IP networks, which makes it a strong match for disaggregated Kubernetes architectures that need high performance without RDMA-specific operational overhead. The NVMe/TCP transport is standardized by NVM Express and is designed for scalable NVMe-oF deployments.

For KubeVirt and Kubernetes Virtualization, NVMe/TCP enables a storage pattern that maps well to platform engineering goals: compute nodes run VM workloads, storage nodes serve remote NVMe namespaces, and Kubernetes automates provisioning and placement while keeping the storage datapath efficient. In practice, that can behave like a SAN alternative while retaining Kubernetes-native deployment and automation benefits.

Simplyblock specifically positions NVMe/TCP as a Kubernetes Storage building block for I/O-intensive workloads and highlights disaggregated and hyper-converged deployment flexibility.

Performance Metrics That Matter for KubeVirt Workloads

A useful benchmark plan measures performance from both the guest and the cluster. Inside the VM, tools like fio can generate repeatable read/write patterns. At the cluster level, you correlate guest results with CSI attach/mount timings, storage latency histograms, network utilization, and node CPU saturation.

For virtualization platforms, tail latency (p95 and p99) is usually more actionable than peak throughput because VM fleets amplify spikes into application-visible stalls. Also track boot time for image-based workloads, clone/import duration (CDI), and recovery behavior under node drain, rescheduling, and link interruption.

Tuning Methods to Reduce Latency Variance

- Use Software-defined Block Storage with a CSI integration so VM disks map cleanly to PVC lifecycle operations, including snapshots, cloning, and automated provisioning.

- Standardize NVMe/TCP networking with clear separation from east-west service traffic, and validate queue depth and MTU settings across the storage path to reduce jitter.

- Control noisy-neighbor behavior with per-tenant policies and QoS so one VM’s burst does not distort p99 latency for other workloads. Simplyblock documents QoS capabilities in its CSI workflow.

- Optimize image lifecycle operations by staging imports and limiting parallel CDI-heavy workflows during peak hours, since import and clone actions can create unexpected storage bursts.

- Keep the datapath CPU-efficient by preferring user-space storage stacks where appropriate, especially when chasing higher VM density per node. SPDK’s model is designed around user-space, poll-mode efficiency.

Comparing VM Storage Designs for Kubernetes Virtualization

The table below summarizes common ways teams back VM disks for KubeVirt and Kubernetes Virtualization, focusing on Kubernetes Storage operational fit and performance predictability.

| VM disk backend option | Typical latency behavior | Kubernetes operational fit | Networking dependency | Multi-tenancy and QoS |

|---|---|---|---|---|

| Legacy SAN / iSCSI-style block | Often stable average, variable tail under contention | Lower variance potential with an efficient datapath | Dedicated SAN patterns | Vendor-dependent, often coarse |

| Distributed block via general SDS | Can be strong, but tuning-sensitive | Kubernetes integration varies | East-west traffic can contend | Strong, but may require tuning discipline |

| NVMe/TCP-based Kubernetes-native block | Lower variance potential with efficient datapath | Strong fit via CSI | Commodity Ethernet TCP/IP | Designed for policy-driven isolation |

Predictable Virtual Machine I/O with Simplyblock™

Simplyblock™ targets predictable VM disk behavior by combining NVMe/TCP with Kubernetes Storage integration and a user-space performance approach aligned with SPDK principles, which can reduce kernel overhead and improve CPU efficiency.

For platform teams running KubeVirt, the practical benefits are operational: storage provisioned through Kubernetes workflows, the ability to support disaggregated or hyper-converged designs, and mechanisms for multi-tenancy and performance controls. Simplyblock’s Kubernetes CSI installation and QoS documentation outline the integration points used for provisioning and policy enforcement.

What’s Next for KubeVirt and Kubernetes Virtualization

The direction is toward tighter standardization of VM lifecycle automation in Kubernetes: repeatable templates, policy-as-code, and more predictable image supply chains. Infrastructure advances will likely come from faster storage fabrics, more efficient user-space datapaths, and hardware offload opportunities (DPUs/IPUs) that reduce CPU per I/O and raise VM density without compromising tail latency.

As adoption grows, the differentiator will remain the storage layer’s ability to deliver consistent outcomes for mixed workloads, including VM boot storms, steady-state databases, and multi-tenant environments.

Related Terms

Teams often review these glossary pages alongside KubeVirt and Kubernetes Virtualization when they standardize VM disks on Kubernetes Storage and reduce latency variance.

Persistent Volume Claim (PVC)

Storage Quality of Service (QoS)

Disaggregated Storage

Storage Virtualization

OpenShift Virtualization

Questions and Answers

KubeVirt brings virtualization capabilities to Kubernetes by allowing virtual machines to run alongside containers. It’s ideal for running legacy workloads within Kubernetes-native environments, bridging the gap between VMs and containers.

Unlike hypervisors like VMware or KVM, KubeVirt integrates VM workloads into the Kubernetes control plane. This unifies container and VM management, enabling simplified infrastructure and shared storage orchestration.

Yes, KubeVirt allows you to run VMs and containers on the same Kubernetes cluster. This is useful for gradually migrating legacy VM workloads to cloud-native architectures, while still using Kubernetes-native storage.

Absolutely. KubeVirt supports persistent volumes using Kubernetes CSI drivers. Backends like NVMe over TCP provide fast, scalable storage for VMs running inside Kubernetes clusters.

KubeVirt enables unified management, automated scaling, and infrastructure consolidation. It reduces complexity by letting teams manage containers and VMs with the same tools and processes.