KVM

Terms related to simplyblock

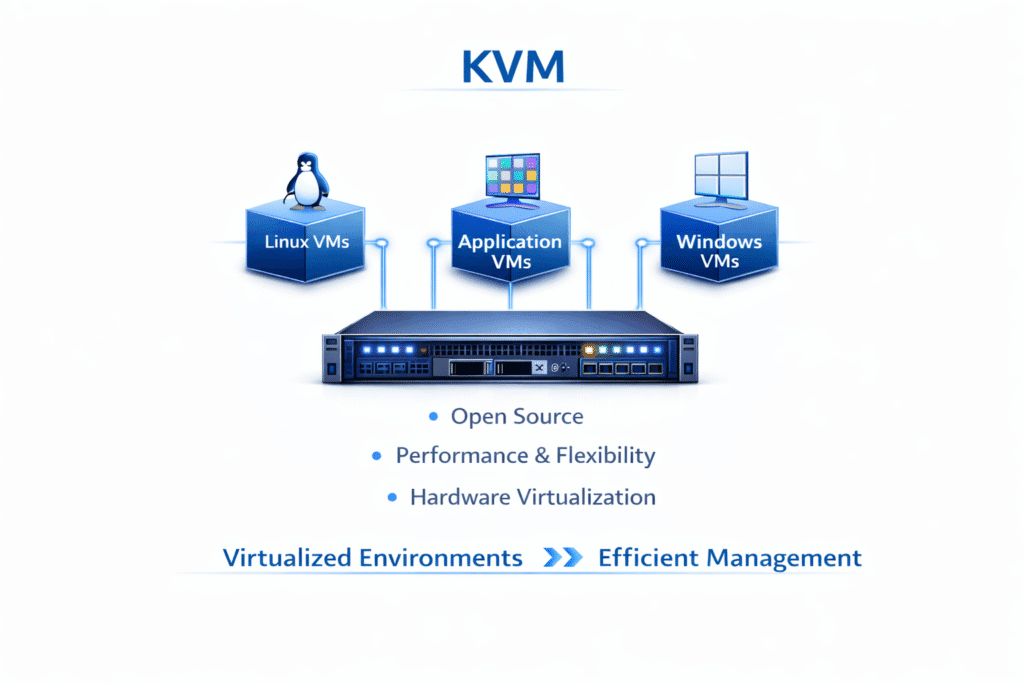

KVM (Kernel-based Virtual Machine) lets Linux run as a hypervisor, so one server can run many virtual machines (VMs) with Intel VT-x or AMD-V support. Most stacks pair KVM with QEMU for device emulation and with libvirt for VM lifecycle control.

KVM helps IT teams keep strong isolation, keep VM workflows familiar, and support many guest OS choices, while Linux stays in control.

KVM – The Linux Hypervisor Stack in Practice

KVM sits in the Linux kernel, but operators feel the layers around it. QEMU handles most device behavior, and libvirt manages storage, networking, snapshots, and automation hooks. Large private clouds often run OpenStack on KVM, while Kubernetes-first teams commonly run KubeVirt for VM workflows in clusters.

Virtio drivers usually deliver better VM I/O than fully emulated devices. Many production teams standardize on virtio for disk and network to cut overhead and reduce jitter.

🚀 Run KVM VM Disks on NVMe/TCP for Kubernetes Storage

Use simplyblock to standardize Software-defined Block Storage and keep p99 latency steady at scale.

👉 Use simplyblock for NVMe/TCP Storage on Kubernetes →

Storage I/O Paths That Shape VM Latency

VM disk I/O crosses several queues, not just “the disk.” The path includes guest queues, host scheduling, backend queues, and the storage fabric. Under load, latency often spikes when the host CPU competes with storage threads, the backend pool gets busy, or the network adds jitter.

Teams reduce that variance when they treat VM disks as core infrastructure. They track p95 and p99 latency, set limits for noisy neighbors, and match the storage backend to the workload. Mixed VM-and-container platforms benefit the most from that discipline.

KVM Performance in Kubernetes with KubeVirt

KubeVirt runs VMs on Kubernetes, and it typically maps VM resources to PersistentVolumeClaims through a CSI driver. That pattern unifies policy, identity, and automation across VMs and containers. It also turns VM management into an API: a team defines VM configurations once, then manages VMs with consistent behavior across clusters.

By leveraging KVM, platform teams can manage both virtual machines and containers within the same Kubernetes environment, ensuring seamless orchestration, scalability, and efficiency.

Why NVMe/TCP Fits Virtualized Workloads

NVMe/TCP carries NVMe commands over standard Ethernet, so teams can scale shared block storage without adding a specialized fabric on day one. Many VM fleets pick NVMe/TCP because it balances latency, throughput, and day-two operations. Routing and troubleshooting also stay familiar with, which helps during outages and changes.

Standardization matters for virtualization. When the storage team adopts NVMe/TCP across clusters, they simplify host onboarding, maintenance windows, and migration plans. Some teams add RDMA tiers for a small set of workloads later, while keeping NVMe/TCP as the default for the broader fleet.

Measuring VM Storage the Way Production Uses It

Benchmark inside the VM, then line up results with host signals. Start with guest-visible latency, including fsync when the app depends on it. Next, review host CPU pressure and backend tail latency.

Most teams use fio with stable job files to compare releases and hardware changes. Add disruption tests too. Run the same profile during node drain, storage failover, and network contention, because those events often expose queue buildup that averages hide.

One Practical List for Reducing I/O Variance

- Pin vCPUs for latency-sensitive VMs, and avoid heavy oversubscription on storage-dense nodes.

- Enable virtio multiqueue where supported, and match queue count to workload parallelism.

- Separate storage traffic classes from general east-west traffic, and keep MTU consistent across the path.

- Enforce volume or tenant QoS so one workload cannot dominate shared queues in Kubernetes Storage.

- Standardize shared VM disks on NVMe/TCP-backed volumes when you need mobility and predictable p99.

VM Disk Backends for KVM Environments Compared

The table below helps teams compare common VM disk backends when they evaluate Software-defined Block Storage for Kubernetes Storage and VM fleets.

| Backend option | Typical latency behavior | Network needs | Ops complexity | Fit for Kubernetes Storage | Notes |

|---|---|---|---|---|---|

| Local PCIe NVMe | Lowest, host-bound | None | Low | Medium | Best raw latency for KVM, but ties VM disks to the host |

| iSCSI SAN | Higher, variable tail | Ethernet | Medium | Medium | Works with KVM everywhere, but adds protocol overhead |

| Scale-out SDS (Ceph/RBD-style) | Strong when tuned, tail varies | Ethernet or RDMA | High | Medium–High | Common KVM backend at scale; tuning and rebuild behavior matter |

| NVMe/TCP-based software-defined block | Low with controlled tail | Ethernet | Medium | High | Strong fit for KVM VM mobility and stable p99 when QoS is enforced |

Predictable VM Storage with Simplyblock™

Simplyblock supports Software-defined Block Storage with an SPDK-aligned, user-space dataplane and Kubernetes-native operations. That design helps teams lower CPU cost per I/O and protect tail latency as environments scale.

KVM teams can standardize shared VM disks on NVMe/TCP, keep Kubernetes Storage workflows consistent, and apply QoS to reduce noisy-neighbor impact in multi-tenant platforms.

What Changes Next for KVM Operations and Storage

VM teams keep pushing toward automation that feels closer to Kubernetes: policy-as-code, repeatable templates, and tighter links to scheduling. Hardware offload will also matter more over time. SmartNICs and DPUs can take on more network and storage work, which frees host CPU for tenants and improves consolidation.

On the fabric side, NVMe-oF tooling keeps improving around discovery, connection lifecycle, and fleet operations. That maturity supports NVMe/TCP as a default transport for shared VM storage, while RDMA fits best in the tightest latency tiers.

Related Terms

Teams often review these glossary pages alongside KVM when they align VM operations with Kubernetes Storage and Software-defined Block Storage.

KubeVirt and Kubernetes Virtualization

Kubernetes vs Virtual Machines

Block Storage CSI

Storage Quality of Service (QoS)

KVM Storage

Kernel Virtual Machine

Linux VM

Questions and Answers

Yes, KVM is a widely used hypervisor for running virtualized Kubernetes clusters. It provides excellent performance, especially when paired with high-speed, software-defined storage for persistent volumes.

KVM runs full virtual machines, while containers share the host OS. Containers offer better efficiency, but KVM provides stronger isolation—making it useful for legacy apps or combining with Kubernetes-native virtualization tools like KubeVirt.

Yes, both KubeVirt and OpenShift Virtualization use KVM under the hood to run virtual machines inside Kubernetes. This lets you run VMs and containers side-by-side in a unified cloud-native environment.

KVM can be integrated with high-speed storage backends such as NVMe over TCP, improving IOPS and latency for VM workloads. This setup is ideal for databases, analytics, and storage-heavy applications.