Multi-Tenant Kubernetes Storage

Terms related to simplyblock

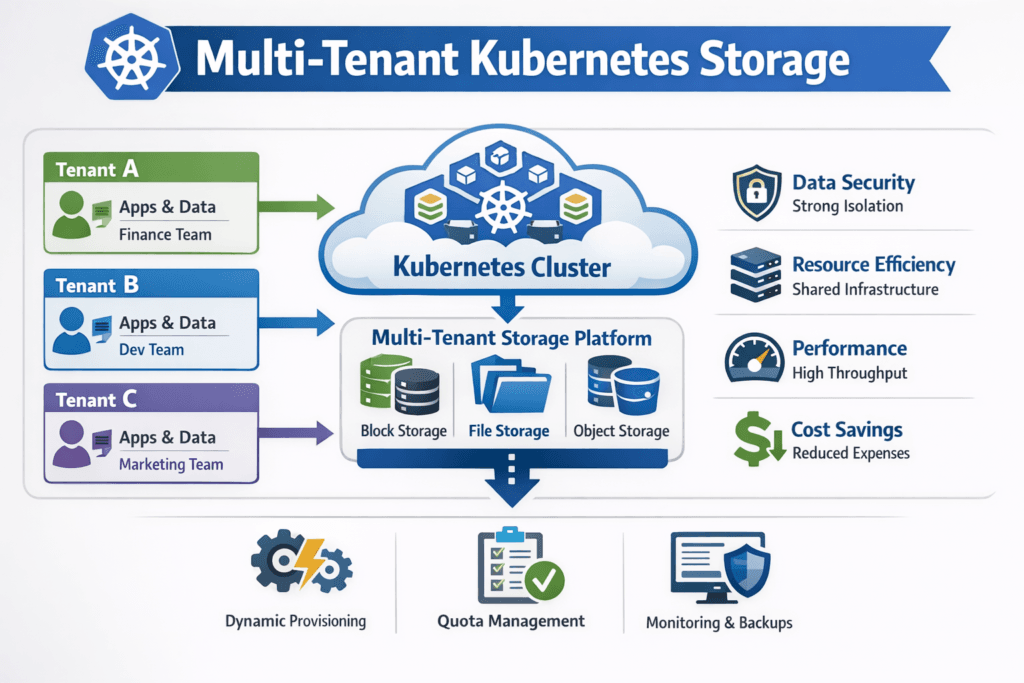

Multi-Tenant Kubernetes Storage describes a setup where several teams or apps share the same Kubernetes cluster and storage backend, while each tenant keeps clear limits, stable performance, and clean separation. Tenants often map to namespaces, projects, or internal business units. They expect the storage layer to enforce guardrails for capacity, IOPS, throughput, and latency.

Shared clusters reduce cost and speed delivery, but storage creates the fastest path to tenant conflict. One noisy workload can drive high queue depth, raise tail latency, and trigger retries across other services. Strong tenant storage prevents that chain reaction and keeps shared platforms usable.

Isolation and Fairness in Shared Kubernetes Storage

Two outcomes matter most: data separation and performance separation. Kubernetes helps with namespaces, quotas, and policy, but the scheduler cannot stop IO contention inside the storage path. Storage needs its own controls to keep tenants from draining capacity or soaking up performance.

A practical model starts with Software-defined Block Storage that supports policy per tenant. The platform should track usage by tenant so you can show who used what, when, and how it affected others. That visibility supports chargeback, budgets, and SLO reporting.

🚀 Run Multi-Tenant Kubernetes Storage with Predictable QoS on NVMe/TCP

Use simplyblock to isolate tenants, cap noisy neighbors, and keep p99 latency steady at scale.

👉 Use simplyblock for NVMe over TCP Storage →

Multi-Tenant Kubernetes Storage Design Patterns for Enterprises

Teams usually pick from three patterns: separate clusters per tenant, shared clusters with strict controls, or a hybrid. Separate clusters cut contention, but they raise cost and slow standardization. Shared clusters scale better, but they demand a stronger storage policy.

A good shared design uses storage classes as tenant tiers. Each tier maps to limits and priorities, so critical services keep stable latency while dev and batch jobs accept tighter caps. Encryption, snapshots, and replication also matter because tenants often carry different risks and recovery needs.

Multi-Tenant Kubernetes Storage and NVMe/TCP

NVMe/TCP fits multi-tenant clusters because it scales on standard Ethernet and works well across routable IP networks. That makes it easier to spread tenants across racks or zones without building a special fabric. It also supports high parallel IO, which helps when many tenants push traffic at once.

In shared clusters, tail latency matters more than peak IOPS. NVMe/TCP works best when the datapath stays lean under load. SPDK-based user-space IO can reduce CPU overhead and avoid extra copies, which helps keep queues under control when tenant demand spikes.

Measuring Tenant-Level Performance in Kubernetes Storage

Measure per-tenant behavior, not only cluster totals. Track p95 and p99 latency per volume and per storage class. Watch queue depth, retry rates, and saturation signals at storage nodes and clients. Averages hide the tenant who suffers.

Test with profiles that match real apps. Databases often use small-block random IO with mixed writes. Logging and streaming often use steady sequential writes. Analytics often stresses large-block reads. Run tests in parallel, because multi-tenant pain shows up when workloads overlap.

Practical Steps to Reduce Noisy-Neighbor Impact

Most multi-tenant issues come from a small set of causes. Fixing them takes clear rules and steady enforcement. Use this short checklist as a baseline:

- Define storage classes per tenant tier, and set hard limits for IOPS and throughput.

- Keep critical stateful pods stable with placement rules when latency matters.

- Put batch and dev workloads into capped tiers to prevent burst storms.

- Tune the network path for NVMe/TCP, and keep settings consistent across nodes.

- Validate behavior at peak load, not only during quiet test windows.

Storage Options Compared for Shared Clusters

The storage model you pick shapes tenant experience. The table below compares common approaches for shared clusters, with a focus on isolation, risk, and day-to-day operations.

| Storage approach | Tenant isolation strength | Typical performance risk | Ops impact |

|---|---|---|---|

| Local disks per node | Medium, depends on scheduling | Hot nodes create uneven latency | Simple at small scale, hard at scale |

| Traditional SAN | Medium, depends on LUN design | Higher latency under mixed load | Central control, higher cost curve |

| Software-defined Block Storage with NVMe/TCP | High with strong QoS | Tail latency if QoS is weak | Fits Kubernetes, scales by adding nodes |

| NVMe/RDMA fabrics | High, strong tail latency potential | Needs strict network controls | Strong performance, higher fabric effort |

Stabilizing Multi-Tenant Kubernetes Storage with Simplyblock™ Performance QoS

Simplyblock™ targets stable shared-cluster behavior by combining an SPDK-based user-space datapath with tenant-aware controls. This approach reduces CPU waste in the IO path and helps keep latency steady when many tenants push IO at once.

Simplyblock™ supports NVMe/TCP and NVMe/RoCEv2, so teams can use standard Ethernet for broad scale, then reserve RDMA tiers for the tightest latency goals. Multi-tenancy and QoS let platform teams set clear limits per tenant, apply a fair-share policy, and cut noisy-neighbor pressure without spinning up separate clusters for every group.

Future Directions for Multi-Tenant Kubernetes Storage

Multi-tenant storage keeps moving toward tighter policy enforcement close to the datapath. User-space acceleration and zero-copy IO will matter more as NVMe devices get faster. DPUs and IPUs will also play a bigger role as teams push storage services off the host CPU.

On the Kubernetes side, more orgs treat storage classes like products. Each product has clear SLOs, chargeback signals, and runbooks tied to real limits. When the storage layer aligns QoS with those products, platform teams add tenants without constant performance drills.

Related Terms

These pages help teams set tenant limits, enforce policy, and keep Kubernetes Storage predictable.

- QoS Policy in CSI

- Storage Resource Quotas in Kubernetes

- Kubernetes StorageClass Parameters

- Storage Metrics in Kubernetes

Questions and Answers

Secure multi-tenant storage in Kubernetes requires volume-level encryption, namespace-based RBAC, and CSI drivers that support per-tenant isolation. Platforms like Simplyblock offer per-volume encryption and logical separation to prevent data leakage across workloads or teams sharing the same infrastructure.

Data isolation is enforced using tenant-specific encryption keys, separate StorageClasses, and access controls aligned with Kubernetes RBAC. Combined with persistent volume provisioning, this ensures secure, compliant data handling in shared clusters.

Shared storage environments risk cross-tenant data access, noisy neighbor effects, and limited fault isolation. Using CSI-compatible solutions that support encryption, replication, and fine-grained access control helps mitigate these issues while maintaining performance guarantees.

Yes, NVMe over TCP enables high-performance, multi-tenant storage by allowing dynamic provisioning over standard Ethernet. When combined with per-tenant encryption and IOPS control, it provides both speed and security at scale.

Simplyblock delivers fully isolated block storage with dynamic provisioning, snapshot support, and per-volume encryption. Its CSI integration allows you to assign dedicated encrypted volumes to each tenant, ensuring compliance and high performance across environments.