NVMe (Nonvolatile Memory Express)

Terms related to simplyblock

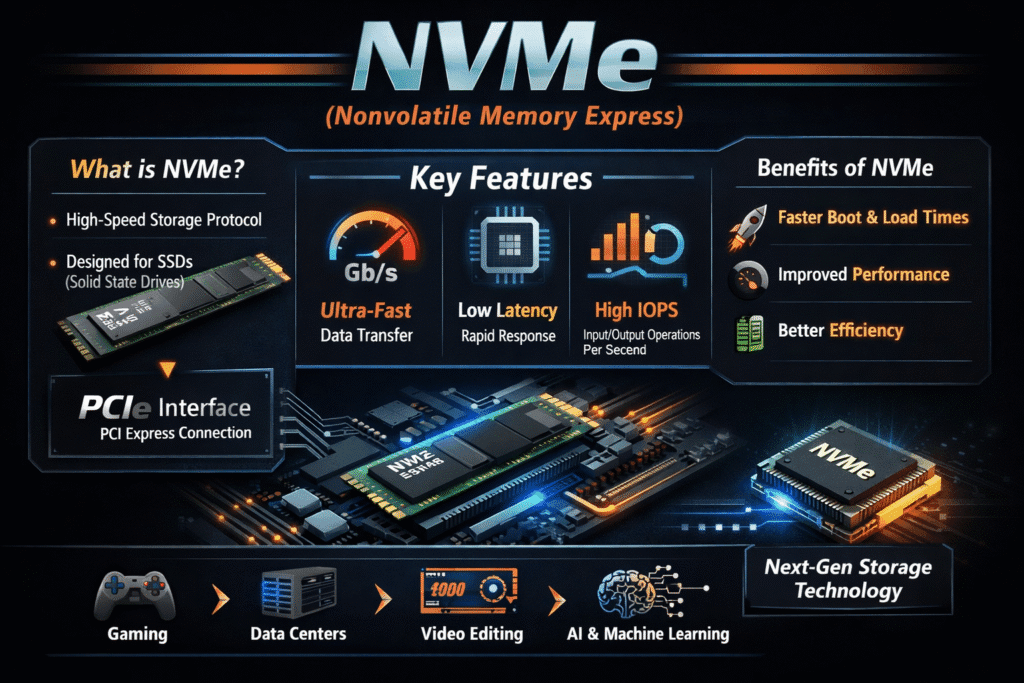

NVMe (Non-Volatile Memory Express) is a storage protocol designed specifically for flash memory. Unlike older interfaces that were built for spinning disks, NVMe takes full advantage of modern SSD hardware by reducing latency and increasing parallelism.

By communicating directly over the PCIe bus, NVMe removes many of the bottlenecks found in legacy storage stacks. This makes it a foundational technology for workloads that demand fast, predictable I/O.

What Makes NVMe Different from Legacy Storage Protocols

Traditional storage protocols such as SATA and SAS were designed around the limitations of hard drives. They rely on command queues and processing models that don’t scale well with flash memory.

NVMe was built from the ground up for solid-state storage. It supports thousands of parallel queues and commands, allowing applications to issue I/O requests efficiently without waiting on serialized operations. This design significantly reduces latency and improves throughput under load.

🚀 Run High-Performance Storage on NVMe Architectures

Support fast, low-latency data paths with storage designed to take full advantage of NVMe-based systems.

👉 See NVMe over TCP Storage Solutions →

How NVMe Communicates with the CPU and Memory

NVMe connects storage devices directly to the CPU through PCI Express lanes. This direct path allows data to move between applications and storage with minimal software overhead.

Because NVMe supports multiple submission and completion queues per CPU core, it scales efficiently as workloads grow. This is especially important in multi-core systems where parallel processing is critical for performance.

Core Capabilities Enabled by NVMe

NVMe introduces several capabilities that redefine storage behavior:

- Low-Latency Access: Direct PCIe communication reduces I/O delays.

- Massive Parallelism: Thousands of queues allow high concurrency without contention.

- Efficient CPU Utilization: Fewer interrupts and context switches reduce overhead.

- High Throughput: Optimized command processing enables faster data transfer rates.

These capabilities make NVMe suitable for demanding applications.

Where NVMe Is Commonly Used

NVMe is widely deployed in environments that require consistent, high-speed storage. Common use cases include databases, virtualization platforms, analytics systems, AI workloads, and containerized applications running in Kubernetes.

It is also a key building block for modern storage architectures such as disaggregated storage and software-defined storage platforms.

NVMe Compared to SATA and SAS Storage

SATA and SAS were designed for hard drives and early-generation SSDs. Their command models and queue handling limit how much parallel work storage devices can process at once. As flash storage became faster, these limitations became more noticeable.

NVMe was created to remove these constraints. By using PCIe and a modern command architecture, NVMe allows storage devices to process many requests in parallel with far less overhead.

| Feature | SATA / SAS | NVMe |

| Latency | Higher | Much lower |

| Queue Depth | Limited | Very high |

| Parallel Processing | Minimal | Extensive |

| CPU Efficiency | Lower | Higher |

| Scalability | Limited | Scales with CPU cores |

This difference matters in environments where storage must keep up with multi-core CPUs, high-concurrency workloads, and fast application response times.

NVMe in Distributed and Cloud-Native Environments

In distributed systems, storage performance often becomes a bottleneck as clusters grow. NVMe helps address this by delivering consistent I/O behavior even under heavy concurrency.

In Kubernetes and cloud-native platforms, NVMe-backed storage improves pod startup times, database responsiveness, and overall system stability. When combined with network-based transports, NVMe can extend these benefits beyond a single server.

How Simplyblock Supports NVMe-Based Storage Workloads

Simplyblock is designed to work efficiently with NVMe-backed environments by aligning storage software with modern data paths.

- Optimized NVMe Data Handling: Storage operations maintain low latency and high throughput.

- Clean Integration with Modern Protocols: Supports NVMe-based architectures without added complexity.

- Stable Performance at Scale: Maintains predictable behavior as workloads grow.

- Efficient Resource Usage: Avoids unnecessary CPU overhead in NVMe-heavy deployments.

This makes it easier to run NVMe-backed workloads in production.

Why NVMe Is a Long-Term Storage Standard

As applications demand faster access to data, NVMe has become the baseline for modern storage performance. Its protocol design aligns closely with current hardware and scales well with future advancements in flash and memory technology.

NVMe allows organizations to meet performance goals today while preparing infrastructure for continued growth.

Related Terms

Teams often review these glossary pages alongside NVMe when they quantify device-level performance, model tail-latency risk, and tune flash behavior under mixed read/write pressure.

IOPS (Input/Output Operations Per Second)

Read Amplification

Write Amplification

NVMe over RoCE

NVMe Partitioning

NVMe SSD Endurance

Questions and Answers

NVMe uses the high-speed PCIe interface, enabling much higher throughput and massively parallel I/O queues. This reduces latency and increases IOPS, offering significantly faster performance than SATA-based SSDs.

NVMe delivers low-latency, high-bandwidth access needed for workloads like AI/ML, real-time analytics, and high-performance databases. Its ability to process parallel requests makes it ideal for environments with heavy read/write traffic.

Yes, as long as the server supports PCIe or NVMe-compatible slots. Many modern servers include U.2, M.2, or PCIe bays, allowing easy NVMe adoption without major hardware changes.

Key factors include endurance (TBW), interface type (PCIe Gen3/4/5), capacity, power consumption, and required IOPS. Workloads with constant writes, like databases, often need higher-endurance NVMe models.

NVMe enables higher density and performance per node, allowing data centers to scale without adding excessive hardware. When combined with NVMe-oF technologies, it supports disaggregated, highly scalable storage architectures.