NVMe-Based Storage vs Cloud Block Storage

Terms related to simplyblock

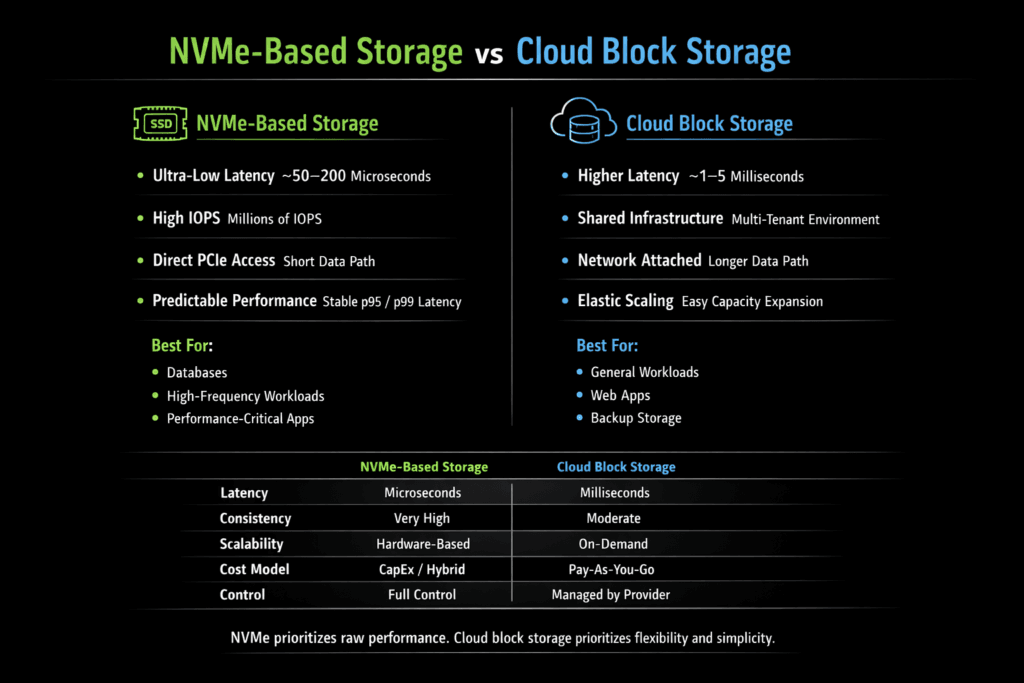

NVMe-based storage uses NVMe SSDs and an NVMe-centric I/O path to deliver low latency and high parallel I/O. Cloud block storage delivers persistent volumes as a managed service and trades raw device control for simpler operations.

This comparison usually comes down to three things: performance consistency, operational control, and cost behavior at scale. NVMe-based storage gives teams tighter control over latency, placement, and queueing. Cloud block storage speeds up provisioning and reduces hands-on work early, but it can introduce throttling, shared-tenancy variance, and tier-driven limits that show up under load.

For Kubernetes Storage, the decision affects rollout reliability, incident volume, and how often teams overprovision to protect SLOs. For leadership teams, the decision affects vendor dependency, upgrade paths, and long-term unit economics for stateful workloads.

Picking the Right Architecture for Real Workloads

A good architecture reduces variance, not just average latency. NVMe-based storage tends to win when a workload needs steady tail latency, high IOPS per node, or predictable behavior during churn. Cloud block storage tends to win when teams prioritize fast onboarding, low operational staffing, or standardized cross-region patterns.

In practice, many platforms use a hybrid mindset. They keep a default pool that supports most workloads with minimal effort. They add a higher-performance pool for databases and latency-sensitive pipelines. That structure reduces exceptions, limits escalations, and keeps the platform easy to operate.

🚀 Get Cloud-Like Simplicity with NVMe-Based Storage on NVMe/TCP

Use Simplyblock to automate provisioning and keep day-2 operations consistent at scale.

👉 Deploy Simplyblock Now →

NVMe-Based Storage vs Cloud Block Storage in Kubernetes Storage

Kubernetes makes storage behavior visible to every team. Provisioning time, attach and mount timing, and node drain behavior shape the experience for stateful services. NVMe-based storage can deliver fast I/O when the CSI layer integrates cleanly, and the system enforces fairness across tenants. Cloud block storage can reduce setup time, but performance often varies by volume type, quota, and service-side policies.

Placement choices matter. Hyper-converged NVMe pools reduce network hops for strict latency goals. Disaggregated pools improve utilization by separating compute from capacity. Mixed layouts can work well when teams want both local speed and independent scaling.

NVMe-Based Storage vs Cloud Block Storage and NVMe/TCP

NVMe/TCP extends NVMe over standard Ethernet using TCP. It supports disaggregated NVMe performance while keeping networks familiar to operate. That matters when teams want NVMe semantics across nodes without a specialized fabric.

Cloud block storage already sits behind a network service boundary, so the attach workflow can feel similar. The difference shows up in control. NVMe/TCP systems can expose stronger knobs for QoS, placement, and latency stability. Cloud block storage often expresses performance through tiers, limits, and burst behavior.

Benchmarking What Matters in Production

A fair comparison needs more than peak IOPS. Teams should measure percentiles, not only averages. They should test during real events such as node drains, rolling upgrades, rebuilds, and snapshot activity.

For cloud block storage, test each tier you plan to run and record when burst limits change behavior. For NVMe-based storage, test local and network paths, especially with NVMe/TCP, and measure CPU per IOPS. CPU waste reduces pod density and raises spend in both cloud and baremetal environments.

Practical Ways to Improve Outcomes

Most improvements come from better guardrails and less variance. Teams get stronger results when they align storage tiers with workload needs and enforce fairness across tenants.

- Map a small set of storage tiers to StorageClasses, so app teams choose the right behavior without manual reviews.

- Enforce QoS to prevent a single workload from pushing other tenants into tail-latency spikes.

- Size for steady-state performance, because burst behavior hides risk and drives surprises later.

- Limit the impact of rebuilds and snapshots on foreground I/O to protect SLOs during churn.

NVMe-Based Storage vs Cloud Block Storage Comparison Table

The table below highlights trade-offs that show up in day-2 operations, performance stability, and scaling decisions.

| Decision Area | NVMe-Based Storage | Cloud Block Storage |

|---|---|---|

| Latency stability | Low and steady with the right software layer | Varies by tier, can show throttling patterns |

| Performance controls | Fine-grained tuning and strong QoS potential | Tier-driven limits with fewer low-level knobs |

| Kubernetes fit | Strong with CSI-native design and isolation controls | Simple provisioning and attach patterns |

| Scaling model | Scale out with pooled devices and policy | Scale through volume types, quotas, and limits |

| Cost behavior | Predictable when utilization stays high | Easy to start, can cost more for high I/O |

Operational Control with Simplyblock™

Simplyblock™ focuses on NVMe-first Kubernetes Storage with Software-defined Block Storage, multi-tenancy, and QoS controls. This approach targets predictable behavior during churn events such as drains, reschedules, and background maintenance. Predictable tail latency often reduces incidents more than higher peak throughput.

NVMe/TCP support helps teams scale NVMe semantics across nodes on standard Ethernet. That supports disaggregated and hybrid layouts while keeping operations consistent across environments. A platform team can standardize storage delivery, enforce isolation, and keep performance tiers clear without building a custom storage stack.

Where This Comparison Is Headed

Cloud block storage will keep improving its top tiers, but pricing and limits will keep changing, too. NVMe-based platforms will keep improving pooling, placement logic, and QoS enforcement. More teams will adopt two-pool strategies: a default pool for most services and a performance pool for strict workloads.

NVMe/TCP will remain a common choice for scale-out designs because it fits standard networks and supports repeatable operations. As Kubernetes Storage grows, the winning approach will focus on predictable day-2 behavior, not only headline benchmarks.

Related Terms

For NVMe-Based Storage vs Cloud Block Storage, these terms support Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

NVMe-based storage uses high-speed flash drives with parallel queues for ultra-low latency and high IOPS. Cloud block storage is typically virtualized and network-attached, which can introduce variability. Simplyblock delivers NVMe over TCP storage to combine performance with cloud flexibility.

Yes. NVMe-based storage offers microsecond-level latency and significantly higher IOPS compared to most cloud block storage tiers. Platforms built on distributed NVMe architecture provide more consistent performance under heavy workloads.

Choose NVMe-based storage for latency-sensitive applications like databases, analytics, or real-time systems. Simplyblock supports high-performance stateful Kubernetes workloads where predictable I/O performance is critical.

Cloud block storage scales easily within public cloud limits, but NVMe-based platforms with a scale-out storage architecture can scale both capacity and performance linearly without vendor lock-in.

Simplyblock provides software-defined, NVMe-backed block storage over standard Ethernet. Its Kubernetes-native storage platform delivers higher performance, predictable latency, and enterprise features like replication and encryption—often outperforming standard cloud block offerings.