NVMe Latency

Terms related to simplyblock

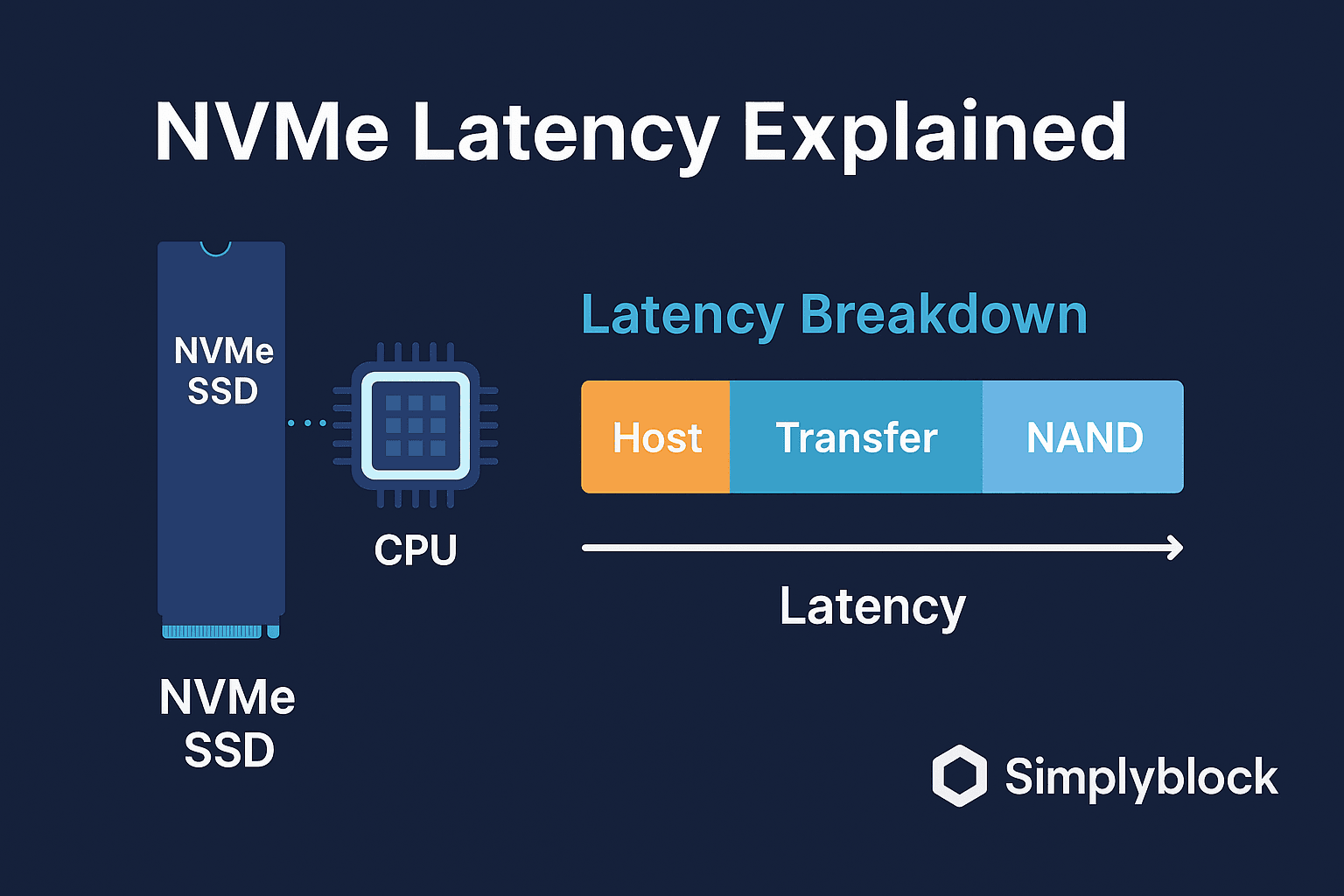

NVMe (Non-Volatile Memory Express) latency refers to the time delay in processing data requests between an NVMe-based storage device and the system. It is measured in microseconds (µs) and is significantly lower than traditional storage interfaces like SATA and SAS. Low latency is one of the defining characteristics of NVMe, making it ideal for high-performance applications such as databases on Kubernetes, artificial intelligence, and real-time analytics.

How NVMe Achieves Low Latency

NVMe storage achieves ultra-low latency by leveraging the following:

- Parallelism and Queues: NVMe supports up to 64K parallel command queues, each capable of holding 64K commands. This allows modern CPUs to handle multiple storage operations simultaneously, reducing processing delays.

- PCIe Interface: Unlike SATA SSDs, which operate at 6Gbps, NVMe uses PCIe, offering speeds of up to 16Gbps per lane, with multiple lanes providing aggregate bandwidth.

- Direct CPU Communication: NVMe eliminates the need for intermediate storage controllers, reducing processing overhead and accelerating data transfers.

NVMe Latency vs Other Storage Protocols

NVMe offers significantly lower latency compared to traditional storage protocols, making it ideal for high-performance workloads.

| Feature | NVMe | SATA SSD | HDD |

|---|---|---|---|

| Read Latency | ~20 µs | ~100 µs | 2-5 ms |

| Write Latency | ~30 µs | ~200 µs | 5-10 ms |

| Queue Depth | 64K queues × 64K commands | 32 commands | 1 command |

| Bandwidth | Up to 16GB/s (PCIe 4.0) | 600MB/s | ~150MB/s |

Compared to HDDs and even SATA SSDs, NVMe latency is nearly negligible, making it a preferred choice for latency-sensitive workloads.

Factors Affecting Latency

While NVMe is designed for high-speed data access, certain factors can influence its latency:

- Network Transport (NVMe-oF vs. NVMe/TCP): NVMe over Fabrics (NVMe-oF) minimizes latency further by leveraging RDMA, while NVMe/TCP, though cost-effective, has slightly higher latency due to network stack overhead.

- Queue Depth and Workload: Higher queue depths allow NVMe to process multiple requests simultaneously, but excessive workloads can lead to queue saturation, slightly increasing latency.

- Firmware and Controller Efficiency: High-performance NVMe drives have optimized firmware to minimize internal processing delays.

- Host System Configuration: CPU power management, PCIe slot configuration, and DRAM caching can impact NVMe response times.

- Storage Network Architecture: In distributed storage systems, network topology and bandwidth can influence end-to-end latency.

Optimizing NVMe for Minimal Latency

To achieve the lowest possible NVMe latency:

- Use NVMe over RDMA (RoCE or InfiniBand) to eliminate unnecessary network overhead.

- Enable Direct Storage Access (DSA) to bypass kernel-level processing.

- Optimize PCIe Slot Allocation to prevent bandwidth bottlenecks.

- Leverage Software-Defined Storage (SDS) for better scalability and latency control.

NVMe and Simplyblock

Simplyblock utilizes NVMe technology to deliver sub-millisecond latency storage solutions optimized for modern applications. By integrating NVMe/TCP, Simplyblock ensures high-speed data access without requiring specialized RDMA hardware, making it a cost-effective and scalable solution for enterprises.

For more details on NVMe storage and its impact on modern workloads, check out Simplyblock’s NVMe Storage Overview.

Questions and Answers

Modern NVMe devices can reach latencies as low as 20 microseconds, drastically outperforming traditional spinning disks that average 10–14 milliseconds. In NVMe over TCP setups, latency stays impressively low even across the network, making it ideal for latency-sensitive applications like databases and real-time analytics.

In modern workloads like AI, financial trading, and high-performance databases, latency is often the bottleneck. NVMe minimizes protocol overhead and accelerates I/O paths, delivering higher IOPS and near-instant access compared to protocols like iSCSI or SATA.

Simplyblock uses NVMe over Fabrics to bring near-local performance to networked storage. By eliminating kernel overhead and enabling direct memory access through TCP, latency remains low even in distributed cloud-native setups.

Latency impacts app responsiveness and throughput in Kubernetes storage. NVMe volumes via Simplyblock help minimize delays for databases, CI/CD systems, and event-driven workloads requiring consistent low-latency reads and writes.

Benchmarks show up to 25–34% lower latency when switching from iSCSI to NVMe over TCP. Read our NVMe vs iSCSI comparison for detailed metrics on performance under various queue depths and block sizes.