NVMe Multipathing

Terms related to simplyblock

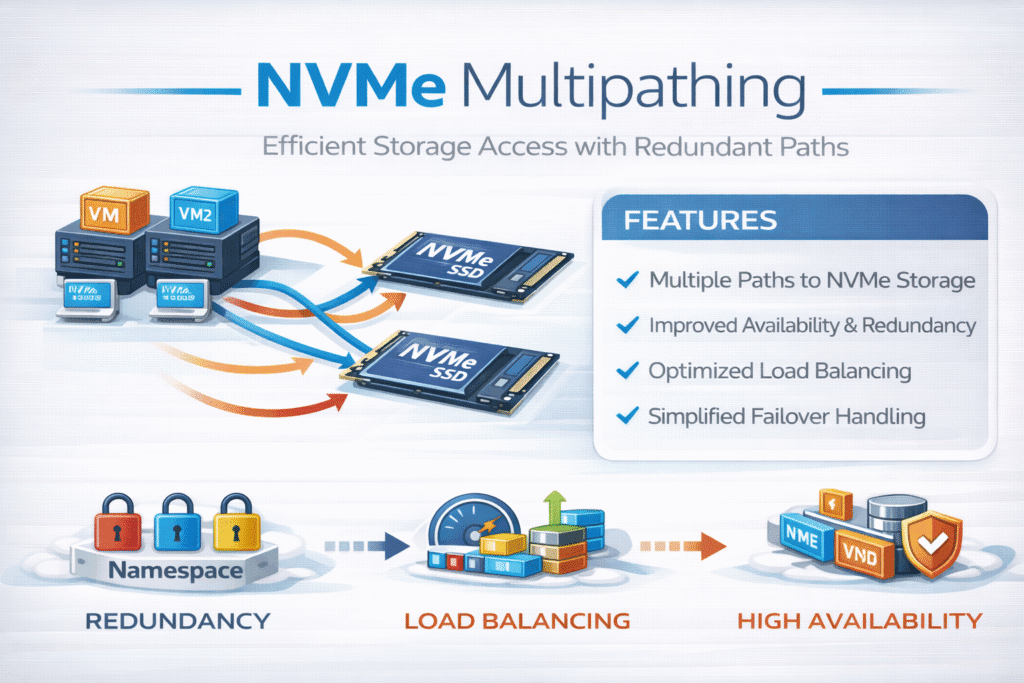

NVMe multipathing lets one host reach the same NVMe namespace through two or more paths, so I/O keeps running when a link, port, or target node drops. Linux supports native NVMe multipath and uses Asymmetric Namespace Access (ANA) to prefer the best path when multiple paths exist.

Platform teams use NVMe multipathing to reduce outage risk and to keep tail latency steady during failures. That matters most when you run Kubernetes Storage for databases and other stateful apps that depend on Software-defined Block Storage.

What NVMe Multipathing Actually Protects

A single path failure should not turn into an application incident. Multipathing gives the host more than one route to the same storage, so the host can fail over without waiting for an app restart or a manual remount.

In NVMe-oF designs, multipathing also helps with planned work. You can drain a storage node, update firmware, or roll a network change while the host keeps a live path for reads and writes.

🚀 Build Resilient NVMe Multipathing for NVMe/TCP Kubernetes Storage

Use Simplyblock to simplify Kubernetes Storage and keep Software-defined Block Storage online during path failures.

👉 Use Simplyblock for NVMe/TCP Kubernetes Storage →

ANA Path States – How Hosts Choose “Optimized” vs “Non-Optimized”

ANA gives the host a clear signal about path quality. The target reports which paths provide optimized access and which paths provide non-optimized access, so the host can send I/O to the right place first.

Linux then applies a path selection policy. The kernel documentation describes NVMe multipath behavior and the policies the NVMe host driver supports.

NVMe/TCP – Where Multipathing Delivers Fast Wins

NVMe/TCP runs NVMe-oF over standard TCP/IP, so many teams adopt it for Ethernet-first data centers. Multipathing matters here because TCP fabrics still face link drops, switch maintenance, and NIC resets. A second path keeps the host online through those events.

On modern clusters, teams also use multiple TCP streams to increase parallelism. Simplyblock highlights “Parallelism with NVMe/TCP Multipathing” as a way to scale performance and enable failover for NVMe/TCP storage.

NVMe Multipathing in Kubernetes Storage Operations

Kubernetes Storage hides most storage details behind PVCs and StorageClasses, but the node still performs the I/O. Multipathing improves the node’s ability to keep volumes available during path changes, which helps protect StatefulSets and database pods from avoidable restarts.

Scheduling choices also matter. When a workload depends on host-local paths, strict placement rules can block rescheduling during node drains. Local Node Affinity often shows up in those designs, especially on bare-metal clusters.

How to Measure Multipath Behavior Without Fooling Yourself

A clean benchmark run does not tell you how the system behaves during a path event. Test steady-state performance first, then repeat the same test while you simulate failures, such as pulling a link, restarting a target service, or failing a storage node.

Use tail latency as your primary signal. p95 and p99 latency reveal failover spikes and queue buildup that average latency hides. Also track CPU per I/O because an inefficient data path can consume cores you want for workloads.

Enterprise docs often note a key gotcha: some Linux distributions do not enable native NVMe multipath by default, so teams must turn it on and validate it.

Operational Practices That Reduce Failover Spikes

Use one standard approach across the fleet, and keep it consistent during upgrades. These steps reduce surprise during incidents and simplify runbooks.

- Verify ANA reports at least one optimized path per namespace before you certify a node for production.

- Alert on path flaps, not only “all paths down,” because repeated switches can spike p99 latency.

- Separate storage traffic from general pod traffic when you can, so congestion does not mask a path problem.

- Re-test during maintenance windows, because maintenance often triggers path changes.

- Add QoS controls in multi-tenant clusters, so one noisy workload cannot crowd out latency-sensitive traffic.

Choosing a Host Multipath Model

Most teams pick between native NVMe multipath and Device Mapper (DM-Multipath). The choice depends on your OS standards and what your team already runs in production. Red Hat documents both options for NVMe devices.

| Area | Native Linux NVMe Multipath | DM-Multipath |

|---|---|---|

| Best fit | NVMe-oF-first estates | Mixed estates with long DM history |

| Path guidance | Uses ANA states from the NVMe stack | Uses DM framework; can support NVMe setups |

| Ops impact | Simple model for NVMe/TCP and other NVMe-oF transports | Familiar workflows for teams that already standardize on DM |

Predictable NVMe Multipathing with Simplyblock™

Simplyblock™ targets high-performance Software-defined Block Storage for Kubernetes Storage, with native NVMe/TCP support and a focus on efficient data paths for scale-out clusters.

Teams that run latency-sensitive workloads often pair multipathing with tenant-aware QoS. That combination reduces “all paths down” outages and limits noisy-neighbor impact during peak load. For OpenShift estates, Simplyblock™ also positions itself as a high-performance alternative to ODF or Ceph for NVMe/TCP block storage.

Where NVMe Multipathing Heads Next

More platforms now tie path health into SLO reporting, so ops teams can act on early warning signs like path flaps and rising failover time. Hardware offload also keeps gaining mindshare because DPUs and IPUs can take on more networking and storage work, which frees the host CPU for apps.

At the same time, teams are standardizing on NVMe/TCP plus active-active path designs to keep Kubernetes Storage upgrades and node drains from triggering avoidable volume stalls.

Related Terms

Teams often review these glossary pages alongside NVMe Multipathing when they set targets for Kubernetes Storage and Software-defined Block Storage.

Questions and Answers

NVMe Multipathing ensures continuous access to storage by providing multiple paths between the host and NVMe device. This is critical for Kubernetes stateful workloads where downtime or single points of failure must be avoided.

Yes, NVMe/TCP supports multipathing by using ANA (Asymmetric Namespace Access), allowing hosts to reroute traffic if one path fails. This enhances reliability and performance for NVMe over TCP deployments.

Unlike traditional SCSI multipathing, NVMe offers faster failover, native path management, and lower overhead. It’s better suited for modern software-defined storage and high-performance cloud infrastructure.

Yes, CSI drivers can support multipathing for NVMe devices, especially when combined with topology-aware provisioning. This ensures resilient, persistent volumes for mission-critical applications.

In addition to fault tolerance, NVMe Multipathing enables load balancing across multiple network paths. This boosts throughput and reduces latency, particularly in I/O-intensive workloads like databases or analytics platforms.